A Drug Launch Case Study in the Amazing Efficiency of a Data Team Using DataOps

How a Small Team Powered the Multi-Billion Dollar Acquisition of a Pharma Startup

When launching a groundbreaking pharmaceutical product, the stakes and the rewards couldn’t be higher. This blog dives into the remarkable journey of a data team that achieved unparalleled efficiency using DataOps principles and software that transformed their analytics and data teams into a hyper-efficient powerhouse. This team built data assets with best-in-class productivity and quality through an iterative, automated approach. We will cover four parts: establishing the infrastructure, getting the data, iterating and automating, and using small, empowered teams.

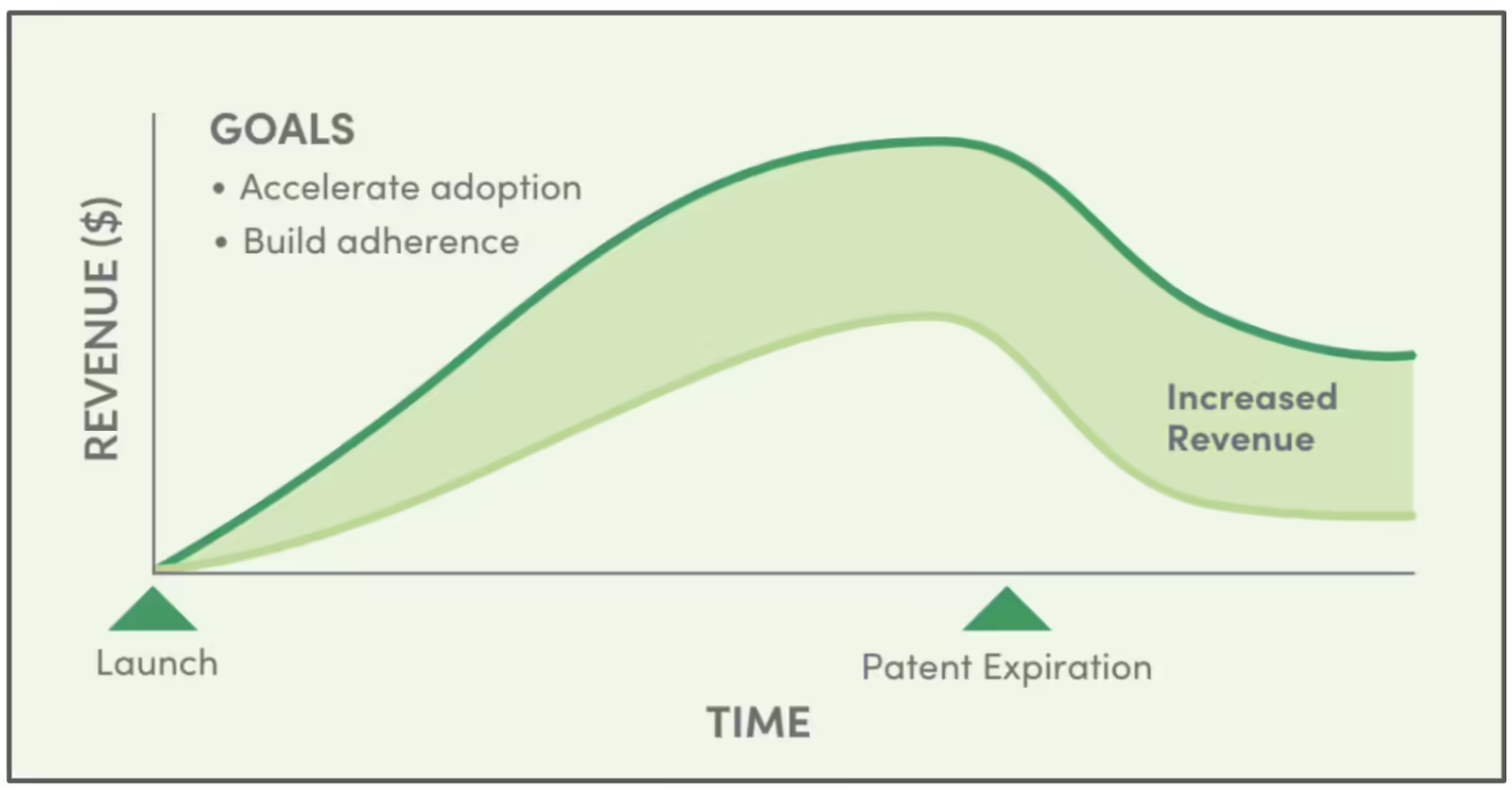

Since the speed at which a product grows during a launch determines the overall lifetime revenue (see graphic below), this team knew it had just one chance for a successful launch.

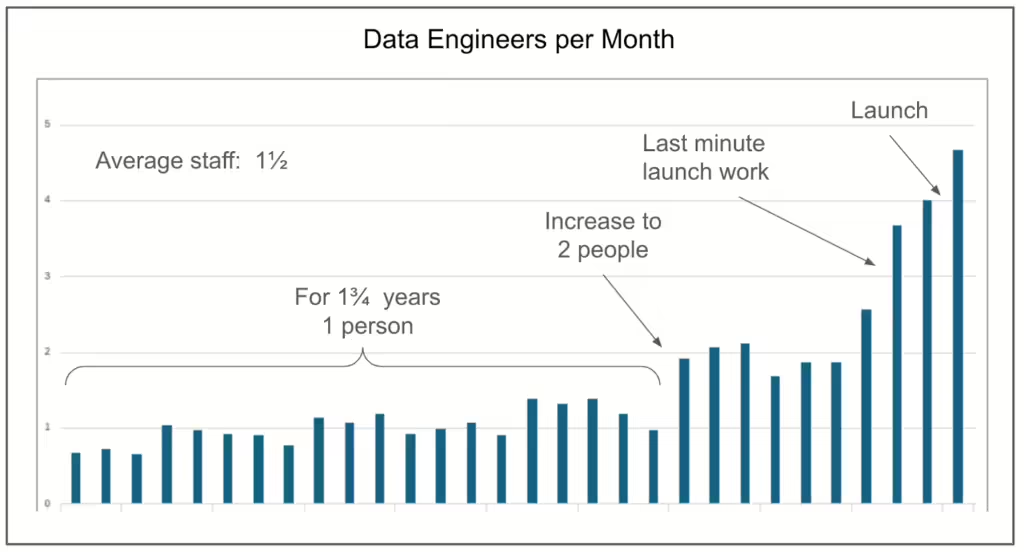

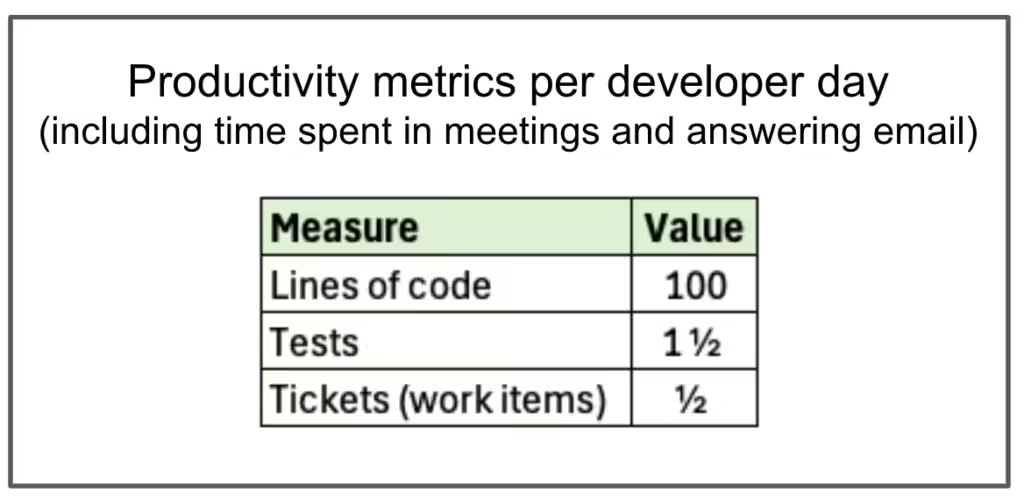

Their efforts culminated in more than just a successful drug launch—it fueled the acquisition of their company for billions of dollars. The numbers speak for themselves: working towards the launch, an average of 1.5 data engineers delivered over 100 lines of code and 1.5 data quality tests every day to support a cast of analysts and customers. Whether you’re curious about the infrastructure, the productivity, or the mindset that made this success story possible, you’ll want to keep reading to uncover the steps that turned a vision into a multi-billion-dollar reality.

Guiding Principles

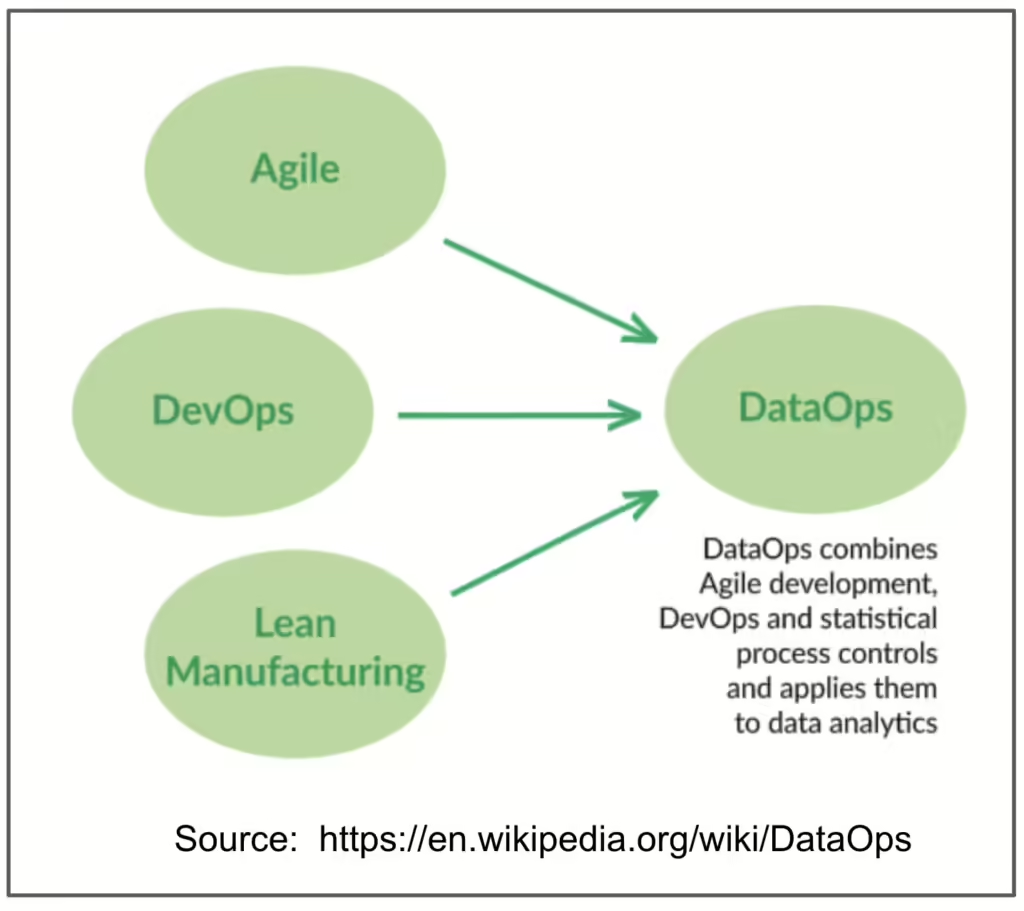

The foundation of the success relied on DataOps principles. Applying DataOps — an Agile, automation-focused approach to data engineering — ensured a dynamic feedback loop where data delivery and insights could evolve rapidly.

This agility translated into faster, more reliable data for stakeholders and empowered the team to iterate. The focus was on delivering value.

The project showed that smaller, empowered teams achieve higher impact than larger ones. These small, cross-functional teams ensured that members were deeply involved in the project operations, the technical setup, and the feedback cycle, leading to fewer delays, fewer bottlenecks, and faster decision-making. The company focused on delivering small increments of customer value – data sets, reports, and other items as their guiding principle. They selected best-in-class partners, tools, and vendors with that guiding principle in mind. Small, manageable increments marked the project’s delivery cadence. Starting simply and iterating quickly gave the team time to build foundational processes before adding complexity and scaling. Finally, embracing a ‘don’t be a hero, automate’ culture, the project emphasized owning and improving their work process rather than relying on extraordinary individual effort, ensuring sustainable progress and a stable, repeatable workflow.

For the data engineering team, these principles focus on building data assets that analysts trust, can control, and easily understand.

Establish the Infrastructure

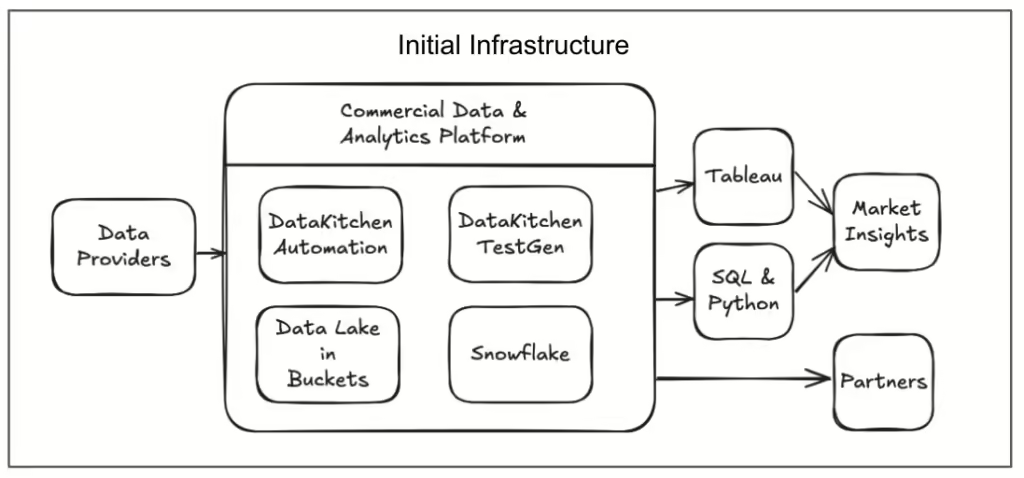

The project began with establishing the data infrastructure. They opted for Snowflake, a cloud-native data platform ideal for SQL-based analysis. AWS Redshift, GCP Big Query, or Azure Synapse work well, too. The team landed the data in a Data Lake implemented with cloud storage buckets and then loaded into Snowflake, enabling fast access and smooth integrations with analytical tools. Once the foundation was set, the team brought in the first one-time data cut, an essential step for early-stage analysis that established the company’s understanding of available insights. This cloud resource setup ensured the team could easily spin up environments, process large volumes of data, and adjust configurations quickly.

It is necessary to have more than a data lake and a database. A software system where processes can be developed and shared is required. The team used DataKitchen’s DataOps Automation Software, which provided one place to collaborate and orchestrate source code, data quality, and deliver features into production. The following diagram shows what the initial infrastructure looked like.

Get the Data

Securing data was another critical phase. A project of this scale required high-quality, historical data that could offer insights into market behavior over time. The company’s analysts, guided by DataKitchen engineers, worked with over a decade of claims data, which consisted of tens of billions of rows. DataKitchen loaded this data and implemented data tests to ensure integrity and data quality via statistical process control (SPC) from day one. By publishing a data dictionary to a wiki, analysts could quickly familiarize themselves with the data sets, providing a common language and understanding of the data, a valuable resource for new and seasoned team members. This centralization reduced confusion and established data standards early on, setting up a solid foundation for future analysis and a single source of truth.

Iterate and Automate to Analyze the Market and Establish Goals

The journey continued with data iterations. The company experimented with various data from sources such as IQVIA and Symphony Health, both well-known providers of pharmaceutical sales data, but they didn’t stop there. They explored claims data options from Komodo Health, MMIT, and Fingertip, evaluating the strengths of each set. The diverse range of data on NRx, TRx, sales force alignment, and zip code-to-territory mappings. EDI data, including 852, 844, and 867, added further depth to the project, enabling the team to glean additional insights. This variety was essential because the company’s data needs were both complex and nuanced. Even with a highly skilled team, forecasting the unique dynamics of a new drug required continuous validation of assumptions against these different data sources, ensuring a robust, well-rounded analytical approach.

The company was able to do this exploration cost-effectively. One data engineer was able to support the project for the first 1¾ years due to the best practices employed, including DataOps principles and the DataKitchen software to support the process. It wasn’t until nine months before launch that staff was increased to two people.

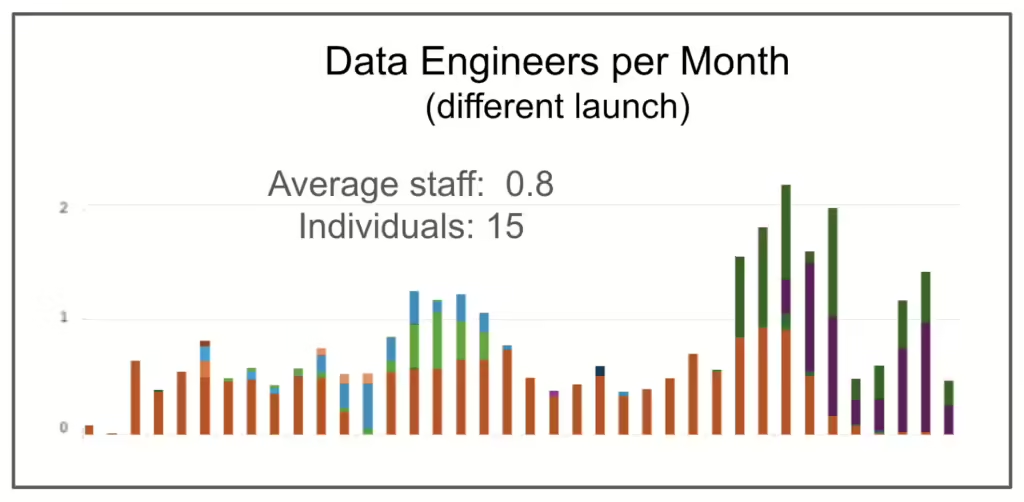

In a different launch, the company using DataKitchen’s Automation software were able to have 15 individuals contribute to the pre-launch analytics (over 36 months) with an average of 0.8 FTE. Here is a graph of that staffing (each color is a different individual).

Establish Other Key Systems and Capabilities

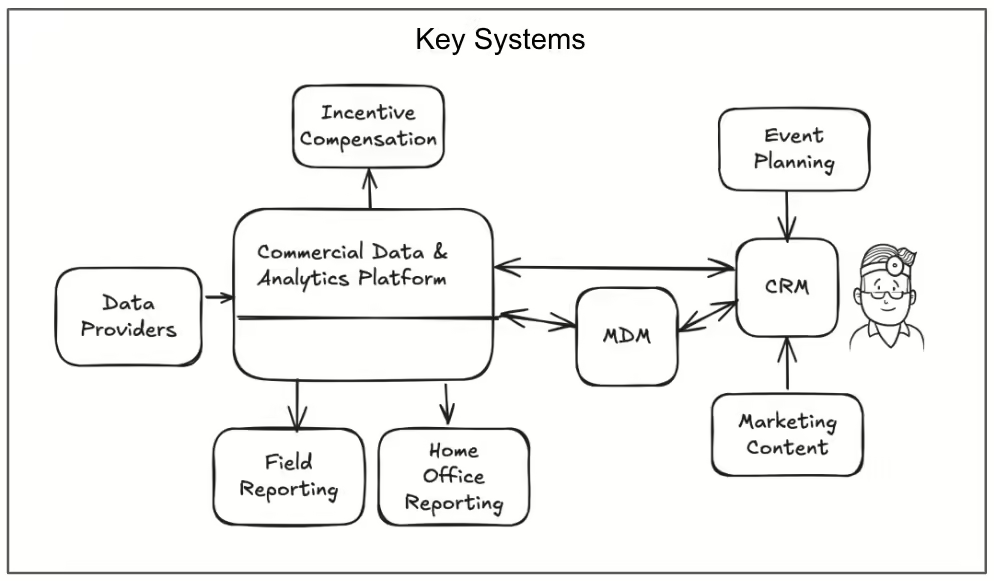

Besides syndicated data, the team needed a variety of specialized systems to support different areas of their commercial operations. A Master Data Management (MDM) system was vital for managing the list of physicians, with options considered, including IQVIA OneKey, Reltio, and Veeva Network. The company evaluated Constant Contact, Hubspot, and Salesforce Marketing Cloud for customer relationship management. Beghou, ZS, and KMK were contenders for call planning and salesforce design, while Hybrid Health and CVENT were considered for marketing content and event planning. Furthermore, an incentive compensation system was implemented to manage reward structures based on the data in Snowflake that was transformed and quality checked by DataKitchen software. The collaboration of these systems established a comprehensive digital ecosystem for the company’s commercial operations, ensuring every aspect of the marketing and sales journey was data-informed and optimized.

The following diagram shows the relationships between the key systems.

Start Early

The project culture fostered a balanced approach to time and expectations. Ample time to complete tasks reduced mistakes, allowed thorough documentation, testing, and automation, and ultimately enhanced the quality of the entire operation. Thousands of data tests were developed, and this extensive testing infrastructure enabled the deployment of analytics updates without fear of regression. Mistakes were seen as learning opportunities, and the anti-hero culture meant no individual bore the weight of project success. Systems were set up to allow smooth transitions and reduce the need for overreliance on individual effort. The long-term, sustainable success of the project reflected a “marathon, not a sprint” mentality, with strong systems supporting reliable execution over the project lifecycle.

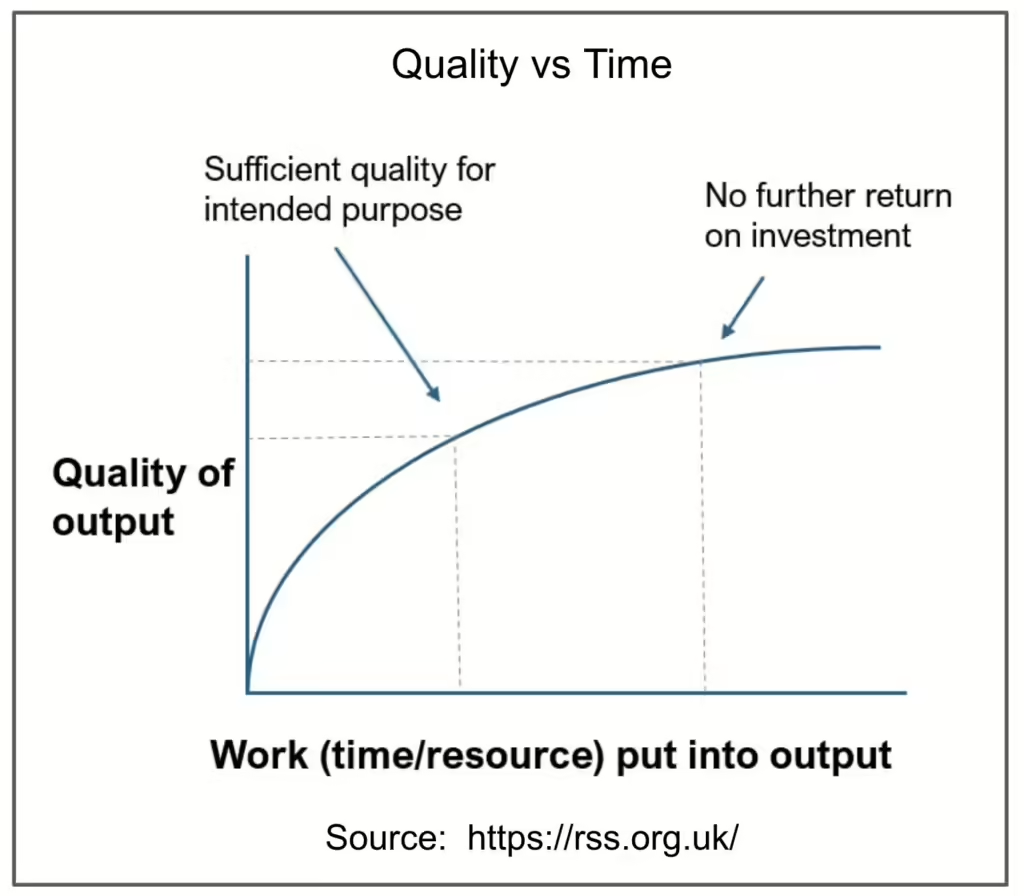

A paper by the Royal Statistical Society shows that as more time is given to a task, quality goes up, and after a point, you hit diminishing returns. The managers of this project were able to hit that sweet spot. See the graph below.

Use Small Empowered Data Teams

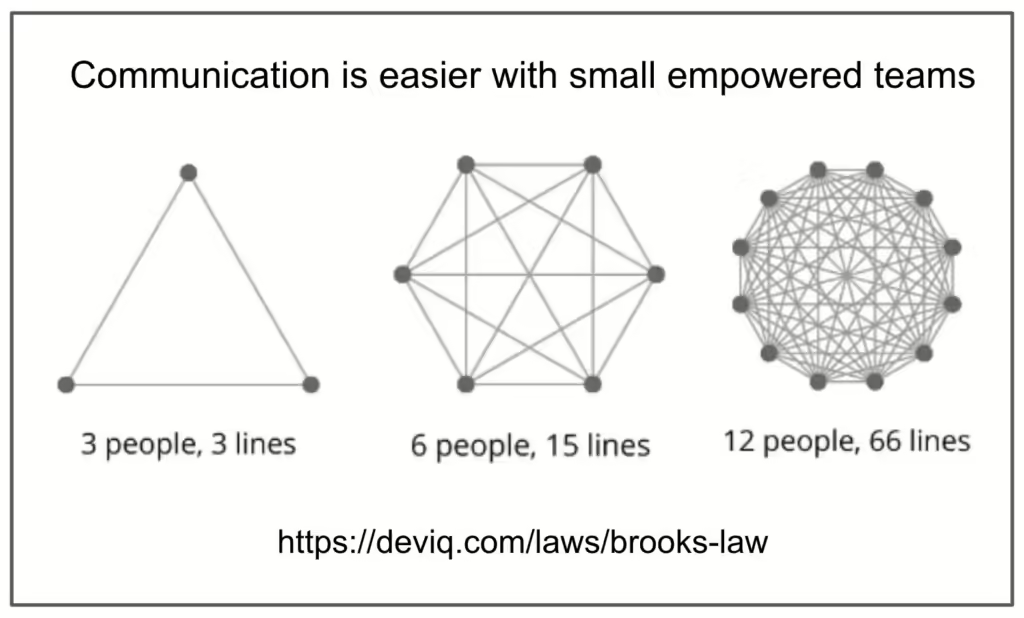

A key success factor was the emphasis on small, empowered teams. Communication was streamlined, which reduced the need for frequent meetings and excessive coordination. Team members knew who to approach for specific tasks or clarifications, enhancing productivity and accountability. This environment encouraged ownership of tasks and was conducive to proactive problem-solving. Remember that communication increases exponentially, (N2 – N)/2, with the number of individuals involved.

Results

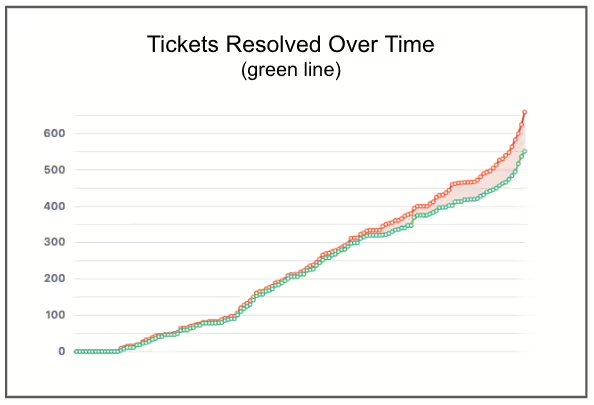

In the end, the company team’s data infrastructure and analytics environment were well-prepared for the launch, with key functions such as field reporting ready six months before the expected launch. Reports for incentive compensation, claims, Rx data, payer plans, marketing automation, and more were prepared and fully functional, positioning the company for success. The ability to deploy reliable data pipelines across various analytics functions instilled confidence and paved the way for efficient, effective operations within the broader organization.

The team was able to achieve excellent technical productivity results.

Conclusion

The combination of DataKitchen’s Automation software, a committed team, and agile, scalable processes culminated in a well-prepared, data-rich environment that was invaluable during the launch preparation. The company’s story exemplifies how rigorous DataOps principles, a strong cultural foundation, and a clear roadmap can transform complex data analytics into a strategic asset, enabling agility and sustained impact in a highly competitive, data-driven industry.