A New, More Effective Approach To Data Quality Assessments

In DataKitchen’s recent webinar, CEO Christopher Bergh offered a compelling new approach to a timeless problem: how do we improve data quality in organizations where no one truly owns it, everyone needs it, and no one has the authority to enforce change? With clarity and a bit of dry humor, Bergh laid out the uncomfortable truth that improving data quality is less about perfect metrics and more about influence, agility, and a bit of personal grit.

Bergh’s message was refreshingly direct for those stuck in meetings debating whether accuracy is a subset of validity or vice versa. Traditional data quality assessments, he explained, too often fall into the trap of abstract rigor—enormous scope, manual checks, detailed taxonomies—and ultimately fail to produce actionable outcomes. Instead, Bergh proposed an iterative, influence-first method focused on solving the real needs of data consumers using open-source automation.

Data Quality Assessment: It Isn’t About Analysis – It’s About Advocacy

The core problem, he noted, is a widespread misalignment between those who produce data and those who use it. Data owners often believe their datasets are good enough for their needs, so they aren’t particularly motivated to fix issues that don’t affect them directly. Meanwhile, consumers of that data—marketing, sales, finance—often find it unfit for purpose. One vivid example Bergh shared is a marketing team trying to run a campaign only to discover that half of the customer records have good emails, another half have good street addresses, and only 20% have both. In short, the campaign can’t run, and the data quality team is blamed.

Bergh argued that this disconnect mirrors the ecological concept of the “tragedy of the commons,” where shared resources are depleted because individuals act in their own short-term interest. In data quality, that shared resource is clean, usable data, and no one wants to take responsibility for fixing problems that benefit others more than themselves.

Data Quality Assessment: Isn’t A Science Problem — It’s A Sales Problem

Bergh’s key insight is that data quality leaders have little formal power. They cannot enforce standards or mandate process changes. What they can do is influence. And that means rethinking their role—not as enforcers of rules but as advocates, even salespeople, for quality improvement. He joked that reading Dale Carnegie might help more than reading ISO 8000. In his view, assessments are only useful if they lead to improvement—and improvement only comes from convincing others to act.

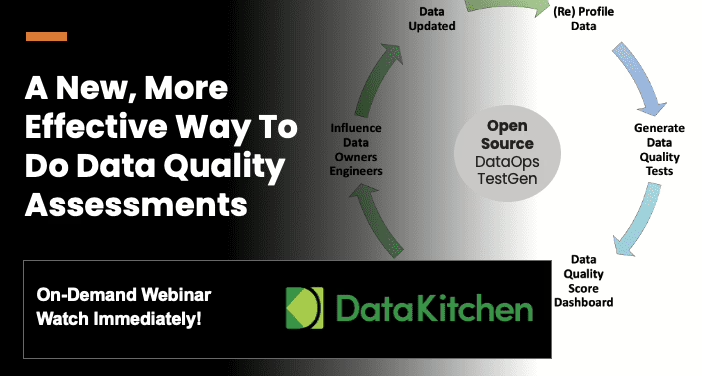

To that end, Bergh introduced a new, more effective approach to data quality assessments, enabled by DataKitchen’s free open-source software. Rather than starting with a massive inventory or an abstract framework of dimensions, he advised beginning with a single, urgent business need. If data issues block a department, that’s your entry point. Assess only the data relevant to that specific task. Profile it. Run quality tests. Score it. Then show the impacted stakeholders how their business goals are being undermined—and offer a clear, lightweight plan to fix it.

The open-source tool supports all of this—profiling, test generation, scoring, and assessment dashboards—and doesn’t require writing SQL or Python. DataOps Data Quality TestGen, Bergh stressed, is crucial. Most data quality teams don’t have time to write thousands of tests by hand or manage an ever-growing spreadsheet of issues. Intelligent automation closes that gap, enabling teams to focus on high-impact, human work: aligning stakeholders, advocating for fixes, and tracking progress through scorecards.

Data Quality Assessment: It Isn’t A Slow Project – It’s An Agile, Iterative Method

What emerged from the webinar was a powerful reframe of the data quality role: it’s not about policing tables or perfecting dimensions. It’s about advocacy. It’s about choosing a narrow scope, delivering quick wins, and using that success to build momentum and trust. It’s about turning assessments into action, not through command but persuasion.

For data professionals who feel stuck in endless cleanup loops or sidelined by organizational apathy, Bergh’s message was more than a tutorial—it was a call to arms. Improving data quality isn’t going to get easier. But it can become more effective, focused, and even more fulfilling if we remember that leadership in this space isn’t just about knowing the data. It’s about connecting with the people who use it.

The full webinar, including a live demo of the open-source assessment tool, is available here.

Whether you’re a data engineer, analyst, or quality lead, it’s worth a watch. Just don’t expect a lecture on dimensions. Expect a blueprint for influence.