Is Your Team in Denial about Data Quality?

Here’s How to Tell

In many organizations, data quality problems fester in the shadows—ignored, rationalized, or swept aside with confident-sounding statements that mask a deeper dysfunction. While the cost of poor data quality is well-documented—wasted time, lost trust, and flawed decisions—teams often operate in collective denial. The problem isn’t that they don’t care. They’ve built a culture of rationalization, where signs of trouble are misread as proof of success.

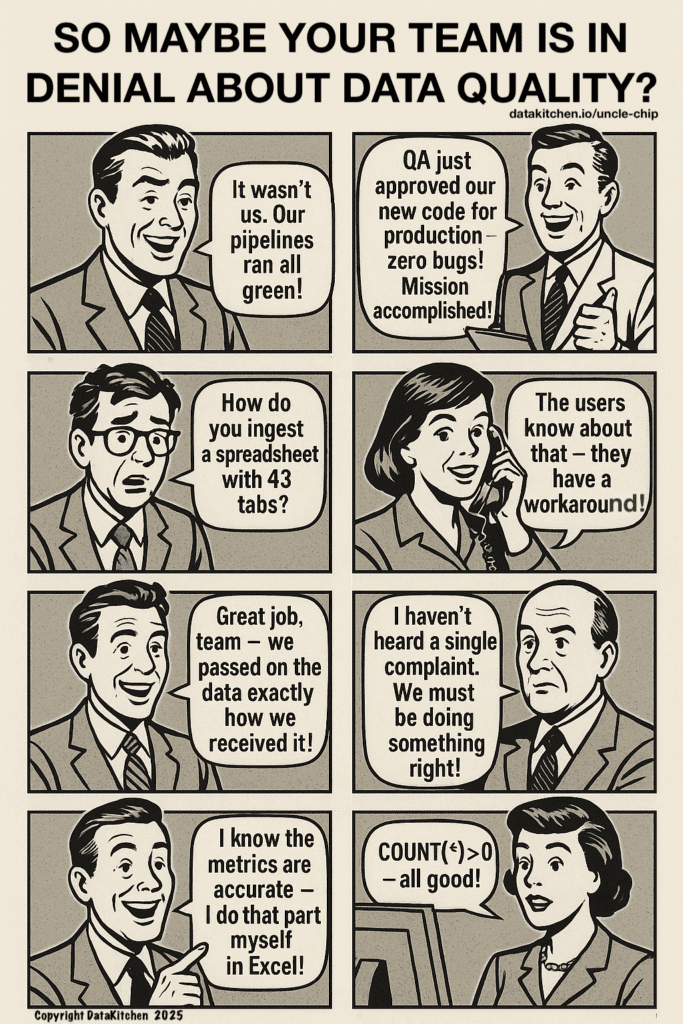

Take a closer look at the illustration Uncle Chip created above. It’s a snapshot of common justifications that data teams use to avoid grappling with the real condition of their data. These aren’t caricatures; they’re alarmingly familiar echoes from meetings, Slack threads, and status updates across the industry. A pipeline ran “all green”? That doesn’t mean the data inside was correct. QA passed the code? Great, but that doesn’t mean the business logic downstream was valid. “We passed the data exactly as we received it”? That’s not a defense—that’s a surrender of responsibility.

The woman on the phone saying, “The users know about that—they have a workaround!” might be trying to help, but she’s normalizing failure. Workarounds don’t fix broken data; they institutionalize it. When someone claims, “I haven’t heard a single complaint,” it usually means users have stopped expecting anything better or have worked around the data team entirely. And the engineer proudly pointing to COUNT(*) as validation? That’s not testing; that’s wishful thinking.

One of the most telling lines comes from the self-assured analyst who declares that metrics must be accurate because he calculated them in Excel. Confidence is not a substitute for verification. Manual processes, however well-intentioned, are rarely repeatable, scalable, or trustworthy. That’s not data quality; that’s data folklore.

Denial about data quality doesn’t always look like laziness or malice. More often, it’s baked into team culture, shaped by tools that don’t make quality visible, roles that lack ownership, and a lack of shared language to even talk about what’s broken. When success is measured by the absence of red flags instead of the presence of verified data quality, teams fool themselves into thinking everything is fine—until the report goes live. Someone important finds a problem in the data, and you sit in a ‘war room‘ all night looking at log files and writing random queries.

So, how do you break the cycle?

Start by playing Data Quality Denial Bingo with your team. Please print out the image above or share it during a team meeting. Ask each team member to check off every statement they’ve heard—or said themselves—in the past month. If someone gets five in a row, they win… or the whole team loses. Use the laughter and mild embarrassment that follows as a gateway to having a serious conversation about your data quality testing practices, data assessment, and data observability. Ask your team what it means to take ownership of data quality.

Because the first step to fixing the problem is admitting you have one. Is your team ready?

We built DataOps Data Quality TestGen as the first tool for data teams looking to break the cycle of surrender, failure normalization, wishful thinking, and unverified confidence. Those teams need an open-source, intelligent data quality tool. DataKitchen’s TestGen lets you start data testing, profiling, and assessing thousands of tables immediately. It’s AI-driven, so you can get valuable results in just a few button clicks.

It profiles your data, generates a catalog, instantly generates hundreds of valuable data quality checks, and allows you to build data quality assessment dashboards. Interested? Install here: https://info.datakitchen.io/testgen