When Timing Goes Wrong: How Latency Issues Cascade Into Data Quality Nightmares

As data engineers, we’ve all been there. A dashboard shows anomalous metrics, a machine learning model starts producing bizarre predictions, or stakeholders complain about inconsistent reports. We dive deep into data validation, check our transformations, and examine our schemas, only to discover the real culprit was something far more subtle: timing.

Process latency – the delays and misalignments when data moves through our systems – is one of the most underestimated threats to data quality in modern architectures. While we’ve gotten excellent at building robust pipelines and implementing data quality checks, we often treat timing as someone else’s problem. This is a dangerous oversight.

The Hidden Nature of Latency Problems

Unlike schema violations or null value issues that surface immediately in our monitoring dashboards, latency problems are insidious. They don’t break your pipelines – they corrupt your insights. A report might run successfully every morning, but if it’s pulling yesterday’s transactions against today’s customer dimensions, you’re serving up beautifully formatted garbage.

The challenge intensifies because latency issues rarely manifest as obvious timing problems. Instead, they masquerade as:

- Data inconsistencies when upstream sources haven’t synchronized properly

- Missing records that appear to be data loss, but are delayed deliveries

- Stale dimensions that make fact tables look wrong

- Integration conflicts between systems operating on different schedules

The Four Faces of Latency-Induced Data Quality Issues

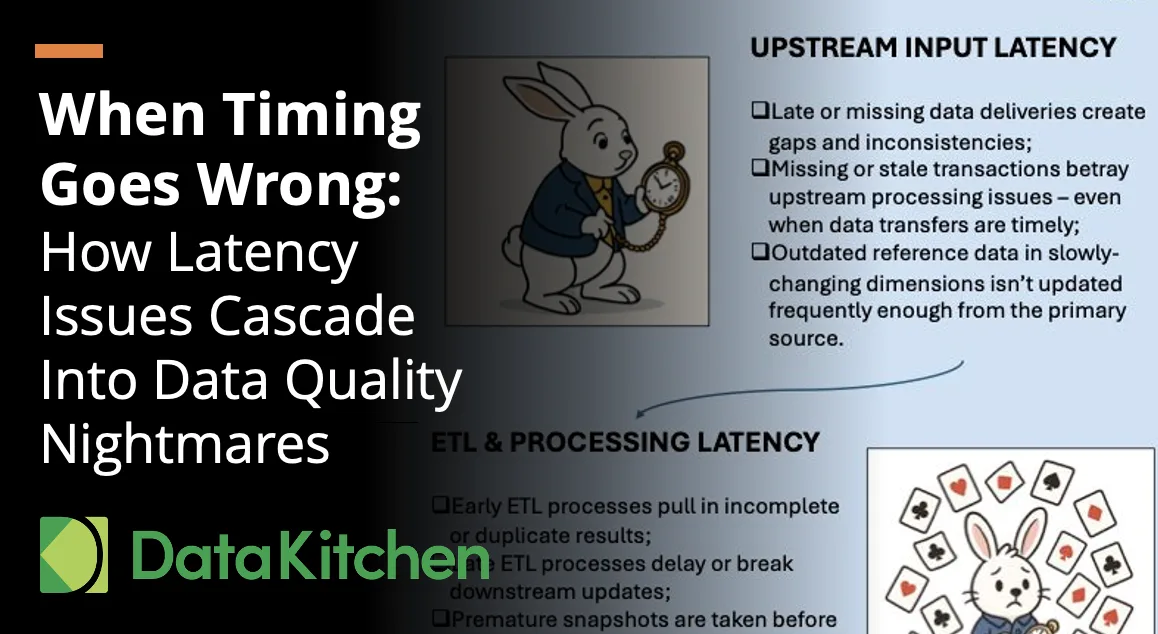

Upstream Input Latency

This is where data quality problems often begin. When source systems deliver data late or inconsistently, it creates gaps that propagate downstream. Missing transactions, stale reference data, and delayed dimension updates all stem from this root cause. Your perfectly architected data warehouse becomes unreliable not because of design flaws, but because the data it depends on arrives unpredictably.

ETL & Processing Latency

Even when source data arrives on time, processing delays create their own quality issues. Early ETL jobs might pull incomplete datasets, while late-running processes create cascading delays throughout your pipeline. Premature snapshots capture partial states, and overlapping batch windows intermingle different versions of the same data, creating temporal chaos.

Integration Drift

The most complex challenge emerges when different systems maintain separate versions of shared entities. Customer records updated in your CRM don’t immediately reflect in your analytics warehouse. Product catalogs in your e-commerce platform are updated, while your recommendation engine still references the old version. These synchronization gaps create a fragmented view of reality across your data ecosystem.

Delivery & Availability Gaps

The final manifestation occurs when downstream consumers expect fresh data that isn’t available. Reports are run on schedule, but they reflect outdated information. Machine learning models retrain on outdated features. Business users make decisions based on metrics that are hours or days behind reality, and they often remain unaware of this discrepancy.

Why We Ignore the Timing Problem

There’s a reason latency issues persist despite their impact: they’re fundamentally collaboration problems disguised as technical ones. It’s much easier to focus on what’s within our direct control – our schemas, our transformations, our infrastructure – than to coordinate schedules across teams, systems, and vendors.

We build our monitoring around what we can measure easily: pipeline success rates, data volumes, and schema compliance. But measuring cross-system timing dependencies? That requires visibility into other teams’ processes, understanding of business logic we didn’t design, and ongoing coordination that extends far beyond our immediate technical domain.

The Data Mesh Dilemma

As organizations embrace data mesh architectures, latency challenges multiply exponentially. When data ownership becomes distributed across multiple product teams, timing coordination becomes everyone’s responsibility, which often means it becomes no one’s responsibility.

Each domain team optimizes its data products independently. Sales refreshes customer segments nightly, marketing updates campaign performance hourly, and finance reconciles transactions on a weekly basis. These decisions make perfect sense within each domain, but the interactions between these schedules create systematic quality issues that no single team owns or can easily detect.

Taking Ownership of Time

The solution isn’t to abandon modern data architectures, but to explicitly own the timing aspects of data quality. This means:

Treating schedules as first-class design artifacts. Document not just what data moves where, but when it moves and what depends on that timing. Make schedule dependencies as visible as data lineage.

Building latency into your data quality framework. Monitor not just whether data arrived, but whether it arrived when expected relative to its dependencies. Alert on timing anomalies before they become data quality incidents.

Establishing cross-domain timing contracts. In data mesh implementations, service level agreements should cover temporal aspects: not just “we’ll provide customer data,” but “we’ll provide customer data by 2 AM EST, reflecting all transactions processed by midnight.” Make these explicit as part of your Data Journey.

Designing for timing failures. Build graceful degradation into your systems. When fresh data isn’t available, how does your system behave? Can it serve stale data with appropriate caveats rather than serving incorrect data silently?

Beyond Technical Solutions

Ultimately, addressing latency-induced data quality issues requires us to expand our definition of data engineering beyond purely technical concerns. We need to become coordinators of temporal complexity, building systems that not only move data efficiently but also do so predictably and transparently.

This means having uncomfortable conversations about cross-team dependencies. It means pushing back when unrealistic timing expectations create quality trade-offs. And it means designing systems that make timing problems visible rather than hiding them behind successful pipeline metrics.

The next time you’re debugging a mysterious data quality issue, don’t just look at the data – look at the clock. The problem might not be in your transformations or your infrastructure. It might be in time itself.

Timing issues in data systems are often the invisible root cause of visible data quality problems. By treating process latency as a first-class concern in our data architecture, we can build more reliable systems and prevent quality issues before they impact business decisions.

Want to fix these types of issues? Try our open-source tools