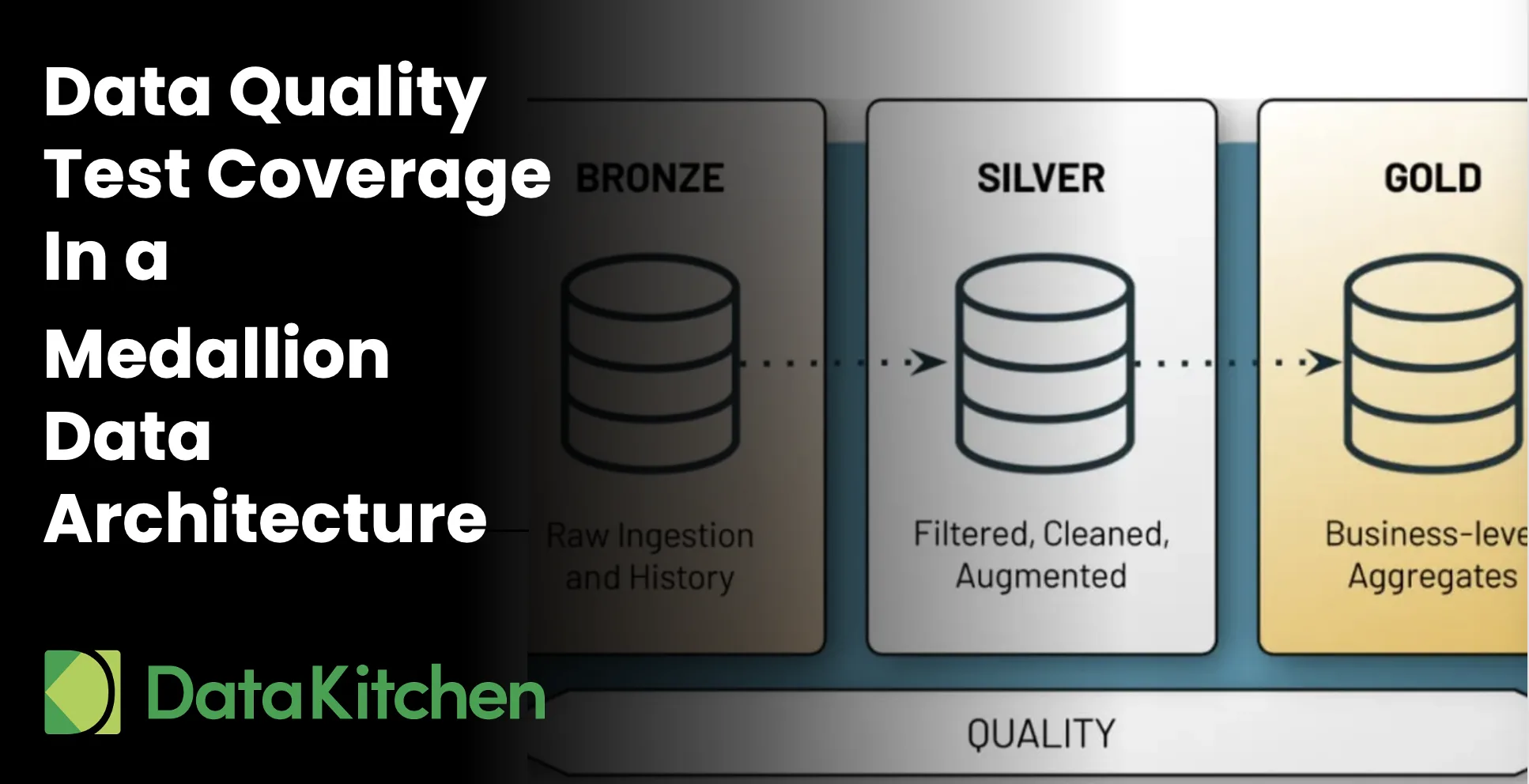

Data quality test coverage has become one of the most critical challenges facing modern data engineering teams, particularly as organizations adopt the increasingly popular Medallion data architecture. While this multi-layered approach to data processing offers significant advantages in organizing and refining data, it also introduces complexity that demands rigorous testing strategies to ensure data integrity across all layers.

Understanding the Medallion Data Architecture

The Medallion architecture represents a structured approach to data processing, organizing data into distinct layers that serve specific purposes in the data transformation journey. This architecture has gained tremendous popularity in data lakehouse implementations, where organizations need to balance raw data storage with analytical readiness.

The Bronze layer serves as the initial landing zone for all incoming raw data, capturing it in its unprocessed, original form. This layer maintains the complete fidelity of source data, preserving every detail that might be needed for future analysis or compliance requirements. Data engineers often refer to this as the “source of truth” layer, where data remains unchanged from its original state.

Moving up the architecture, the Silver layer represents the processing stage where data undergoes “just enough” cleaning and transformation to provide a unified, enterprise-wide view of core business entities. This layer focuses on standardization, deduplication, and basic quality checks while maintaining the granular detail needed for various downstream applications. The Silver layer essentially creates a cleansed, normalized version of the Bronze data without heavy aggregation.

Finally, the Gold layer represents the pinnacle of the Medallion architecture, housing fully refined, aggregated, and analysis-ready data. Data in this layer is typically organized into project-specific schemas optimized for business intelligence and advanced analytics. The Gold layer delivers the final data products that business users interact with through dashboards, reports, and analytical applications.

Some organizations extend this pattern with a Platinum layer beyond Gold, while others prefer the simplicity of L1, L2, and L3 designations. Regardless of naming conventions, the fundamental challenge remains consistent across all implementations: data quality becomes increasingly complex as data moves through these layers, making comprehensive test coverage essential for maintaining trust and reliability.

The Critical Need for Test Coverage

Test coverage in data engineering shares fundamental principles with software testing but addresses unique challenges inherent to data systems. Just as software teams would never consider releasing partially tested code, data teams must adopt equally rigorous standards for their data products. The consequences of insufficient testing in data systems can be far-reaching, affecting business decisions, regulatory compliance, and organizational trust.

The primary motivation for comprehensive test coverage centers on two fundamental concerns that every data engineering team faces: preventing production breaks when deploying changes and avoiding the embarrassment of customers discovering data quality issues before internal teams do. These scenarios represent not just technical failures but organizational credibility challenges that can have lasting impacts on stakeholder confidence.

Data test coverage refers to the extent to which automated quality checks cover your data itself, data pipelines, transformations, and outputs. The higher your coverage, the more confident you can be that the data you produce is accurate, reliable, and trustworthy. Many organizations mistakenly assume that occasional spot checks or ad-hoc validations provide sufficient protection against data quality issues. Unfortunately, manual, partial testing often leads to incomplete visibility and unexpected problems that surface at the worst possible moments.

The analogy to software development proves particularly relevant here. Imagine releasing software that has only been partially tested—no development team would accept such risk. The same standards should apply to data systems, where incomplete testing can lead to faulty business decisions, compliance violations, and eroded trust in data-driven insights.

Strategic Placement of Test Coverage

Effective test coverage in a Medallion architecture requires understanding both where and when to implement testing strategies. The placement of tests follows two primary patterns: development test coverage and production test coverage, each serving distinct but complementary purposes in maintaining data quality.

Development test coverage operates within what data engineers refer to as the Innovation Pipeline, where new features, transformations, and data sources are developed and validated before being promoted to production. During this phase, both new and existing tests run against changes to provide high confidence that modifications haven’t broken existing functionality. This represents a critical checkpoint where code changes while data remains static, allowing teams to validate transformations against known datasets.

Production test coverage functions within the Value Pipeline, ensuring that data quality remains high and error rates stay low during active data processing. In this environment, code remains static while data constantly changes, requiring different testing approaches that focus on data consistency, freshness, and adherence to expected patterns. These production tests often mirror development tests but operate against live, flowing data rather than static test datasets.

The beauty of this dual approach lies in the fact that development and production tests can often be identical, providing consistency across environments while addressing the different dynamics of static versus flowing data. This consistency enables teams to build confidence in their testing strategies and ensures that validation logic remains synchronized across the entire data lifecycle.

Comprehensive test coverage must extend beyond just data validation to encompass every step and tool in your value pipeline. Whether you’re working with Python code for data access, SQL transformations in ETL processes, R code for modeling, Tableau workbooks for visualization, or online reporting platforms, each component requires specific validation approaches. The goal is to ensure that data inputs remain free from issues, business logic continues operating correctly, and outputs maintain consistency across all downstream applications.

Implementing Shift Left and Shift Down Testing

Modern data engineering practices emphasize two complementary testing philosophies that significantly improve data quality while reducing costs and complexity. Shift Left testing involves executing data quality tests earlier in the data pipeline lifecycle, ensuring that issues are identified and resolved before they propagate downstream. This approach recognizes that early detection dramatically reduces the effort and cost required to address data quality problems.

Shift Down testing embraces integration and end-to-end testing methodologies, validating not just isolated components but also the interactions and cumulative impacts within the entire data ecosystem. Rather than testing individual transformations in isolation, Shift Down approaches validate the complete data journey from source to consumption, ensuring that the entire system functions cohesively.

The economic benefits of these approaches become clear when considering the cost implications of data quality issues discovered at different stages. The industry-standard 1:10:100 rule, developed by George Labovitz and Yu Sang Chang in 1992, demonstrates that the cost of preventing poor data quality at the source equals approximately one dollar per record, while remediation after creation costs ten dollars per record, and the cost of failure—doing nothing until customers discover issues—reaches one hundred dollars per record.

This cost structure makes a compelling case for comprehensive testing at the earliest possible stages. It becomes ten times cheaper to find problems in data at the source or raw layer compared to discovering them in integrated data or tool-modified outputs. The financial impact extends beyond immediate remediation costs to include reputation damage, regulatory penalties, and the opportunity cost of incorrect business decisions based on faulty data.

Measuring Test Coverage in Medallion Architecture

Effective measurement of test coverage requires systematic approaches that ensure comprehensive validation across all layers and components of your Medallion architecture. The measurement framework operates on multiple levels, each targeting different aspects of data quality and system reliability.

At the foundational level, every table within your architecture should have dedicated tests. This includes tables in Bronze, Silver, and Gold layers, ensuring that no data repository operates without quality validation. The minimum standard calls for at least two tests per table, typically focusing on consistency checks that validate volume, freshness, and schema adherence.

Diving deeper, every column in every table requires its own testing regime. Column-level testing extends beyond simple existence checks to validate data types, value ranges, null constraints, and adherence to patterns. The recommended minimum of two tests per column ensures that both basic integrity and business-specific validation occur for each data element.

Test Coverage Rule Of Thumb: In All Levels/Zones In Your Medallion Architecture

- Every Table Should Have Tests: Minimum 2 Tests Per Table

- Every Column In Every Table Should Have Tests: Minimum 2 Tests Per Column

- Every Significant Business Metric Should Have Tests: Minimum 1 Custom Test Per Metric

Test Coverage Rule Of Thumb: Every Tool That Uses Data Should Be Checked For Errors and Timing

- Minimum 1 Check Per Tool: Logs For Errors

- Optional: Check Metrics

- Minimum 1 Check Per Tool Per Job: Task Status and Substatus Results

- Minimum 1 Check Per Tool Per Job: Timing and Duration

Business-specific metrics represent another crucial dimension of test coverage. Every significant business or domain-specific metric should have custom tests that validate not just technical correctness but business logic accuracy. These tests often require in-depth domain knowledge and represent the highest-value validations in terms of business impact.

Treat data quality tests like first-class citizens. Tests aren’t just QA – they’re living proof of what the system should do. And by ‘tests,’ we mean numerous tests —hundreds, thousands, covering every table.

Tool-level testing completes the coverage framework by ensuring that every tool in your data processing pipeline operates correctly. This includes checking logs for errors, monitoring performance metrics, validating task status and substatus results, and tracking timing and duration patterns. Each tool should have at least one error check, with additional monitoring for metrics, status validation, and performance tracking.

Practical Test Coverage Implementation

To illustrate the scale of comprehensive test coverage, consider a typical Medallion architecture implementation with three database levels (L1, L2, L3), three primary tools (StreamSets, Airflow, dbt), and five distinct jobs or workflows requiring monitoring.

In the L1 Bronze layer, assuming 100 tables with an average of 10 columns each, the minimum testing requirement includes 200 table-level tests and 2,000 column-level tests. The L2 Silver layer, with similar table and column counts, requires another 200 table tests and 2,000 column tests. The L3 Gold layer, which contains fewer tables (perhaps 10) with more columns (averaging 30), requires 20 table tests and 600 column tests, in addition to domain-specific business metric tests.

Tool-level monitoring adds another layer of validation, with three tools requiring error checking and six jobs needing 12 separate checks for various problems, including status validation and performance monitoring. This example demonstrates how comprehensive test coverage quickly scales to thousands of individual tests across a moderately complex Medallion implementation.

The medallion architecture above has three database levels (l1, l2, l3), three tools (streamsets, airflow, dbt), and five jobs/workflows to monitor

Bronze/L1: 100 tables + 10 Columns: Minimum 200 Table Tests and 2000 Column Tests

Silver/L2: 100 tables + 10 Columns: Minimum 200 Table Tests and 2000 Column Tests

Gold/L3: 10 tables + 30 Columns: Minimum 20 Table Tests and 600 Column Tests, plus a handful of Domain Specific Business Metric Tests

3 Tools: 3 Checks For Errors

6 Jobs: 12 Checks For Problems

The manual creation of such extensive test suites presents obvious scalability challenges. Writing data quality tests manually simply does not scale to enterprise requirements. Consider that creating 5,020 tests manually, assuming 30 minutes per test, would require 2,510 hours of work—equivalent to 14 months of full-time effort with no time for meetings, breaks, or vacations. Automated test generation tools, such as DataOps TestGen, can achieve the same result in minutes, making comprehensive coverage practically achievable.

Manual Writing Data Quality Test Is Time Consuming

The economic argument for comprehensive test coverage becomes compelling when considering the actual cost of data quality issues. Every problem discovered earlier in the development and processing pipeline costs significantly less to resolve than issues found in production or by customers. Tests represent gifts that data engineers give to their future selves, providing early warning systems that prevent costly downstream problems.

Ensuring coverage for every table and tool creates a comprehensive safety net that protects both data quality and organizational resources. However, manually writing (or configuring) those tests can be time-consuming. Looking at the example below, it could take months to manually code all the necessary tests.

Open-source tools for automated test generation make comprehensive coverage economically feasible, even for resource-constrained teams. Rather than requiring massive manual investment in test creation, automated tools enable junior operators to generate thousands of tests with minimal effort, democratizing access to enterprise-grade data quality assurance. This is why we created open-source DataOps Data Quality TestGen.

Test Coverage + Data Journeys Provide Precision.

Understanding the distinction between data lineage and data journeys is crucial for implementing adequate test coverage. Data lineage provides valuable insights into where problems might spread throughout your system, much like a building blueprint shows potential paths for fire spread. However, data journeys with comprehensive test coverage reveal where problems are actually occurring, much like a fire alarm control panel indicating active emergencies.

Data lineage represents educated guesses about impact, showing dependencies and relationships between data elements. While valuable for understanding system architecture, lineage alone cannot definitively identify which components are experiencing actual problems. Complete test coverage, as displayed through data journeys, provides clarity on the real-time health of the system and the status of data quality.

The practical difference becomes apparent during incident response. Data lineage might indicate that an upstream issue could impact five tables, but comprehensive test coverage reveals that only one table actually contains errors. This precision enables faster resolution and prevents unnecessary investigation of unaffected components.

Conclusion and Future Considerations

Comprehensive test coverage in Medallion data architecture represents more than just technical best practice—it embodies a commitment to data reliability that enables organizational trust and effective decision-making. Having customers uncover data errors creates embarrassment and potential damage that rigorous quality testing can prevent.

Quality testing at every stage of the Medallion architecture—Bronze, Silver, and Gold—catches issues early while minimizing the risk of data inconsistencies surfacing in customer-facing insights. Prioritizing thorough automated testing and continuous monitoring enables data teams to uphold data integrity and foster trust across the organization.

The future of data engineering depends on treating data quality, at every level, with the same rigor applied to software quality.

Automatically testing both the data and the tools that act upon data creates comprehensive protection against quality degradation. Since nobody has time to write the thousands of tests required for comprehensive coverage manually, open-source enablement becomes crucial for the widespread adoption of best practices.

Test coverage and quality dashboards foster organizational best practices by providing visibility into data health and enabling proactive quality management. As Medallion architectures continue to gain popularity in data lakehouse implementations, teams that master comprehensive test coverage will deliver more reliable data products and maintain competitive advantages through superior data quality assurance.

The investment in comprehensive test coverage pays dividends through reduced firefighting, improved stakeholder confidence, and more reliable, data-driven decision-making. For data engineering teams serious about delivering production-grade data products, implementing systematic test coverage across their Medallion architecture represents not only a technical improvement but a fundamental shift toward sustainable and trustworthy data operations.