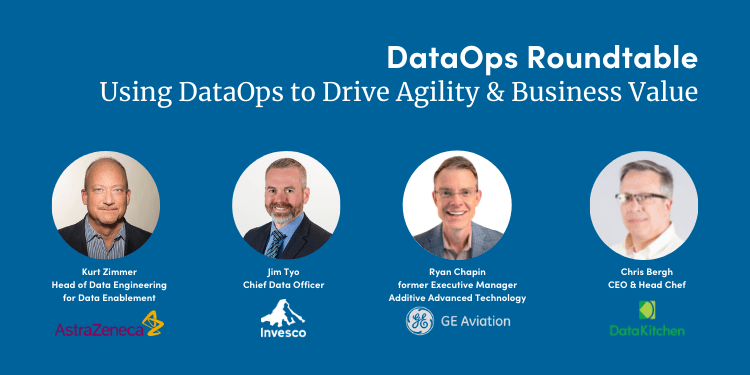

In May 2021 at the CDO & Data Leaders Global Summit, DataKitchen sat down with the following data leaders to learn how to use DataOps to drive agility and business value.

- Kurt Zimmer, Head of Data Engineering for Data Enablement at AstraZeneca

- Ryan Chapin, Former Executive Manager, Advanced Additive Design, Chief Product and Portfolio Manager, GE Aviation

- Jim Tyo, Chief Data Officer, Invesco

- Chris Bergh, CEO & Head Chef, DataKitchen

You can listen to the entire discussion here.

DataOps is a Key Enabler of Business Agility

DataOps can mean different things to different organizations. Each panelist described how DataOps is defined and used at their organization.

At Astrazeneca, Kurt Zimmer explained that data, “provides a massive opportunity to drive all sorts of levers, such as to lower cost and to drive things like speed of execution, which has a tremendous impact on the ability to bring life-saving medicines to the marketplace.”

Kurt continued, “I see DataOps as an absolutely critical piece of our operation. Here’s a quick example. If you were buying a piece of furniture via any channel, you expect that piece of furniture to show up at your house in really good shape. You expect it to work. You expect it not to be marred, etc. If you think about the supply chain for that or any other thing, like a car, it starts with raw materials and at any point in the cycle of moving raw materials through the chain to your home, a lot of things can happen. Quality and speed and automation, in many cases, are point solutions or point capabilities along that chain.

Ultimately you as a consumer only care about one thing. You want a quality piece of furniture to show up in your home, the first time, and only once. That doesn’t happen that often in terms of data. Data takes a long journey. It’s often wrong. It takes a circuitous route to our end users. That’s unacceptable so when we think about moving from simple automation to large-scale process improvement across the entire ecosystem, things like DataOps, in particular, can bring absolutely significant and game-changing effects to that chain.”

Jim Tyo added that in the financial services world, agility is critical. “Previously we would have a very laborious data warehouse or data mart initiative and it may take a very long time and have a large price tag. That’s no longer the way that we can operate because that is not going to move at the speed of business anymore. Agility is absolutely the cornerstone of what DataOps presents in the build and in the run aspects of our data products.”

He added, “Most organizations are well-versed in software and application development. There are a lot of great principles and practices around DevOps, DevSecOps, and Agile disciplines. Using Kurt’s analogy, those processes and practices are really meant to build an application, so the piece of furniture is an application or software, whereas data becomes a component of that, a leg or a bolt, or something that’s within that software application. DataOps is a complementary process. The first-class citizen is data and the product that you’re manufacturing is a data solution. And that I think is the fundamental change.”

Ryan Chapin explained that at GE Aviation the main products such as jet engines generated tons and tons of data. GE’s goal was to leverage this data to drive faster turnaround times and quicker speed to market. “GE followed agile processes and saw tremendous value in co-location. We really believed in having both the data scientists, as well as the engineers who are responsible for complex design all in the same place. And ultimately we formed something we called the Digital League. The Digital League was meant to drive a very clear focus on the business and customer outcomes that are most essential for us to deliver. We took over an entire building where we co-located all the resources to ensure very high speeds, and high effectiveness of the team.” GE also worked hard to democratize data. “We had around 10,000 plus design engineers for our jet engine products. We needed to get the data from a centralized place into their hands so that they could get in the game of digital transformation.”

Chris Bergh came to DataOps because his life in data and analytics “sucked.” “People would call me up when things were late, they would yell at me when things were wrong, and even when we did a good job there were 5, 10, or 15 follow-up questions that would take months to answer.”

So I became focused on how to get data and analytic teams to be successful. How do you make it so that they can get the ideas from their heads into production quicker? How do you create a process like a factory that produces Toyotas and not AMC Pacers? How can you get these teams who work all over the company to collaborate better? Those are the problems that DataOps solves.

Identify and Evangelize the Opportunity First

The need for DataOps at each organization was clear after considering the complexity of the organization and past failures. Helping others to understand the opportunity is a critical first step.

Zimmer explained that unfortunately until you get underneath the surface, most organizations don’t always understand the type of challenges they have. “At AstraZeneca, we found that we have incredibly complex pipelines that service our global businesses, and when you start looking underneath those pipelines and start seeing how many places that things can go wrong and do go wrong, you start to get a better feel for the opportunity and thus the reason to bring in tooling that allows you to look across people, process and technology. It is without a doubt, challenging until you start to see those results. Now we are demonstrating much faster cycle time and quality, frankly by doing things like benchmarking. That’s when organizations start to get a quick and powerful feel for what the opportunity is. We’re early on but have had some good early successes.”

Tyo elaborated, “A lot of awareness for the need, unfortunately, comes from failures of the past. So there’s been a lot of investment made and massive data initiatives that had a lot of promises and in many circumstances over-promised and under-delivered. I would argue that there’s a lot of frustration in financial services from business partners about the investment that they’ve made in data programs, and really haven’t seen or been able to quantify the true return on investment. There is a necessity for a much more agile framework to start to show incremental value. And that’s where DataOps comes in because, at its core, it is about an incremental and step-based approach where you can start to show the true benefits and earn the right to have more of those deeper conversations with your business partners.”

Chapin suggested using real-life examples and stressing the importance of getting good quality data where people can see the outcomes and are likely to jump on board. “There’s a little bit of a culture change upfront to help people realize that maybe 80% of what they want is better than 0%.”

Chris Bergh emphasized that to get started organizations must “think about the solution from a people and process perspective. Don’t just run out and just buy a fancy new tool or hire that genius person who’s going to do everything.”

Success Requires Focus on Business Outcomes, Benchmarking

Chapin shared that even though GE had embraced agile practices since 2013, the company still struggled with massive amounts of legacy systems. GE formed its Digital League to create a data culture. “One of the keys for our success was really focusing that effort on what our key business initiatives were and what sorts of metrics mattered most to our customers. Given that our data sources were often spotty at best, we had a concerted effort upfront to focus on the most important data sources and to rank those and determine which 10 are the absolute most essential for our success and for our customer success. Let’s go aggressively after those instead of trying to swallow 1000 data sources. So we really prioritized the data that we thought had the biggest chance of delivering success in the end. And I think that really paid off for us. It allowed teams to really get to the heart of the problems much quicker.”

Chapin also mentioned that measuring cycle time and benchmarking metrics upfront was absolutely critical. “It is sad to admit this but some of our more sophisticated models, early on took somewhere from 14 to 16 months on average, and we were like, what the heck, this takes way, way, way too long for us to keep up with the demands of our customer. We actually broke down that process and began to understand that the data cleansing and gathering upfront often contributed several months of cycle time to the process. So we attacked that through our Digital League and were able to shorten it down to one month from the 14 months.”

Tyo agreed that it is critical to get clear alignment on business outcomes first. “Before we jump into a methodology or even a data strategy-based approach, what are we trying to accomplish? We went back to the basics and agreed on the business outcomes that we’re trying to drive. Fortunately, this starts to uncover the obstacles that we’ve had in the past. This led us to a methodology that will help us get there faster and an incremental approach with less of a large upfront investment. That creates that connectivity of quantifiable benefits to business outcomes.”

Zimmer added, “When we saw the amount of energy and how many people it takes to create data to drive a data scientist and the like, it was absurdly inverted towards just so much horsepower to create something for somebody somewhere. That begat the questions: What do we do about it? How do we decrease the time to make a decision?”

“Two years ago DataOps was a brand new emerging topic and people really didn’t understand what it was but once we started to peel the onion we realized that it suddenly gave us the ability to look at a large complex system and incrementalize our attack on it. You don’t have to attack the whole thing, you attack pieces of it, benchmark elements, and begin to turn knobs. This gives you very distinctive, very discrete things that you can attack such as quality, speed, reduction in people, the time it takes to deliver, and cross-functional effectiveness. We saw other metrics from some of our peers that were absolutely staggering in terms of the amount of cost they took out to deliver data in a holistic environment. Some of the numbers are pretty astounding.”

It struck me that DataOps has the potential to be a transformative capability, and not just from a technology perspective, but from the lens it brings to how we approach these complex data environments. It takes them out of the craft world of people talking to people and praying, to one where there’s constant monitoring, constant measuring against baseline. [It provides the ability] to incrementally and constantly improve the system.

DataOps Enables Your Data Mesh or Data Fabric

The panelists agreed that DataOps is a critical component of a Data Mesh or Data Fabric architecture. Tyo commented that companies have complicated legacy platforms and it is difficult to virtualize and create a framework that can connect across those very complex infrastructures. The Data Fabric can play an important role to help create the connective tissue between components to create a unified user experience. However, “the DataOps framework is not just an ancillary methodology, it’s actually a core component of that mesh or fabric.” Zimmer agreed. “If you don’t have DataOps in your toolkit, you ain’t going to be data meshing anything.”

Bergh added, “DataOps is part of the data fabric. You should use DataOps principles to build and iterate and continuously improve your Data Fabric. Whether it’s streaming, batch, virtualized or not, using active metadata, or just plain old regular coding, it provides a good way for the data and analytics team to add continuous value to the organization.”

Education is the Biggest Challenge

Tyo commented that the primary challenge for anything that is not readily understood and hasn’t been around for decades is “education, socialization and finding the first place to apply it. Most companies have legacy models in software development that are well-oiled machines. Introducing that parallel manufacturing path for data as a first-class citizen, for data as a product or data as a solution, is where the challenge comes in getting started.”

Zimmer wholeheartedly agreed. The education component is “monstrous. We ran into a lot of ‘not invented here’ when we talked to internal teams and tried to measure their maturity relative to DataOps. Their definition of DataOps was that we do some automation and check a record count. It was all about technical automation

We had to go find someone who’s willing to open their mind for five minutes to an alternative reality. And once we cracked the code on that alternative reality and they saw that we weren’t just talking about running a test but continuous testing every step or instantiating a transit environment to recreate a test environment in seconds rather than days. Initially, they didn’t understand the definition and they let alone understand the potential. Half of this journey is getting people to understand the vernacular because it’s outside their purview. They think that they are doing DataOps if they automate one little thing using a piece of Python code, but we’re lightyears ahead of that, and it’s really hard to wrap your head around it.”

To bring doubters along, Zimmer found people “who weren’t doubters and gave them proof points by accelerating what they did. And it’s amazing what happens when the business who you’ve helped on this journey goes on the corporate website and declares the massive change in productivity and reduction of cost, without you having to say a word. That’s plenty.”

Chapin agreed that at GE it certainly required a change in the culture. He added that at GE in particular people get tired of the initiative of the day and also have a “not invented here” mindset so it was crucial to show some actual evidence of how this new methodology and process will actually work to help deliver results. “When you show the vision and how it is going to help their analytics go from 14 months to a day, that’s when you get the most buy-in and people jumping on board saying ‘sign me up.’”

Bergh advised leaders to “Listen to your change agents. Years ago I was running software development teams and our CTO had heard about this thing called Agile and I thought ‘why in the world would we want to ship software faster than four months? That makes no sense whatsoever. It’s going to be a problem. It’s going to make me look bad.’ But I listened to him, and you know what? It is better to ship software quickly because you can learn more. And so I think we’re in the same position, where leaders are saying, ‘man, this is just going to create headaches. If I go fast I’m going to break things. If I don’t have a lot of meetings, I’m going to lose control. And I think you’ve got to listen to your change agents and try it out and you’re going to find that this way is better. It’s not an overnight path. You’re not going to flick a switch, but the wave is coming. Agility as a concept in business is really powerful and certainly deserves a place in every data and analytics team.”

DataOps Maximizes Your ROI

The panelists provided great advice for maximizing ROI with DataOps.

- Design for measurability. According to Zimmer, it’s hard to maximize ROI when you can’t measure it. “One of the key things that caught my attention with DataOps was its ability to put a baseline metrics lens over top of the data ecosystem. And by doing that you can very simply draw attention to areas and provide data, KPIs, and metrics that people will actually believe. It’s not you making stuff up anymore. It’s definitive and that changes the game especially with respect to senior leadership, where they don’t take, lightly to people coming up with stupid ROI metrics. They want to see the real deal.”

- Clearly define the problems and expected outcomes. Said Chapin “so often people come up with a solution to what they perceive as the customer’s biggest problem without first validating that with a customer.”

- Prioritize your data sources. “Instead of going after 1000s of different sets of data and trying to weave them all together, decide on those that are top 10,” Chapin explained. “We came up with a ‘most valuable data set tool’ that allowed us really to get a clear connection to the outcomes that we’re shooting for and expert opinion on whether or not that data source would help us solve that problem.”

- Automate the data collection and cleansing process. At GE they “used tools like statistical process control to monitor the flow of data and make sure that we were getting quality data in and flagging folks when the data quality started to suffer or drift.”

- Take a show-me approach. Chapin advised to find the, “toughest most unsolvable problem and apply the methodology. We won skeptics over by proving to them that the unsolvable could be solved.”

- Be business-centric. Tyo pointed out, “Don’t do data for data’s sake. Data with context in the hands of the business at the right time, in the right place, that’s where we generate value. There is no data strategy, it’s only a business strategy.”

- Be the provider of choice. Tyo added, “even if you are internal to the organization, compete every day. If your business chooses an outside vendor for data work, you are doing something wrong and have to change your approach.”

Bergh summarized the advice well. “If you focus on value for your customer and being a servant to them, then psychologically, your life is a lot better as an individual contributor. Work is more joyful, you get more satisfaction, and you’re no longer on these six-month journeys to nowhere. It can be harder because sometimes your customer says, ‘that’s not quite right.’

[With DataOps] you’re going to be more valuable to your company, you’re going to get praise, and instead of having people laugh at you or roll their eyes when you walk into the room, you will be making your customer successful.”

For more insights, you can listen to the entire conversation here.