TL;DR: Functional, Idempotent, Tested, Two-stage (FITT) data architecture has saved our sanity—no more 3 AM pipeline debugging sessions.

Picture this: It’s 2:47 AM, your Slack is buzzing with alerts, and the CFO’s quarterly report is broken because somewhere in your seven-layer medallion architecture, the bronze data doesn’t match the gold data. Sound familiar? We lived this nightmare for years until we discovered something that changed everything about how we approach data engineering.

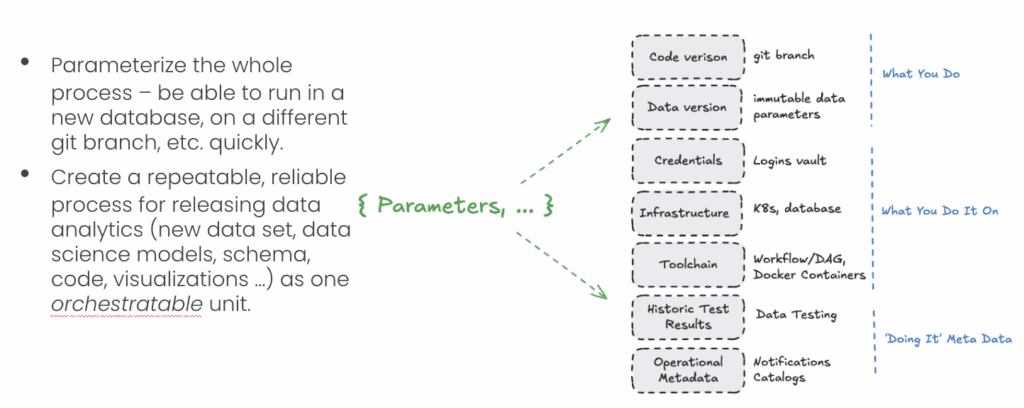

We wanted to make our lives easier, simpler, and less complicated. The cloud has made it incredibly affordable to have copies of systems, tools, pipelines, and even data. We craved a single source of truth through Git and grew tired of managing inconsistent data across environments. Most importantly, we wanted zero meaningful difference between development, production, and canary environments. The goal was simple: complete a rebuild, push a minor update, add a new dataset, or recreate last month’s results without breaking a sweat.

Data architecture should focus on maximizing data engineer productivity and simplicity while reducing complexity

The alternative—maintaining three to five copies of data in every environment and spending entire weekends debugging why Level 1 data differs from Level 3 data—is unsustainable. If explaining a minor fix to a junior data engineer requires a PhD in your data architecture, then your architecture has become the problem.

We’ve been applying these principles in our data engineering consulting for many years, and it’s time we gave them a proper name and explained why they’ve been transformative for every team we’ve worked with. Yes, I know—another acronym in a field already drowning in them. But this isn’t just buzzword engineering; this represents how we learned to stop breaking things constantly and started working faster with less risk, fear, and late-night heroics.

What is FITT Data Architecture?

FITT represents four principles we follow religiously, born from countless production incidents and hard-learned lessons about what works in real-world data systems.

Functional – Everything is Pure Functions

No more mystery dependencies or hidden state lurking in your transformations. For every version of code, each transformation takes clearly defined inputs and produces predictable outputs, period. Same input equals same output, every single time, regardless of when or where you run it.

We have decided to treat all raw data as immutable by default. Yes, storage and compute have costs, but debugging at 2 AM is infinitely more expensive. The math is simple: data engineering time is worth more than compute costs, which are worth more than storage costs. When you embrace this hierarchy, many architectural decisions become obvious.

For every version of code, each transformation takes input and produces output, period.

This functional approach eliminates an entire class of bugs that plague traditional data systems. No more wondering if yesterday’s transformation had any impact on today’s results. No more state-dependent edge cases that only surface in production under specific conditions. Each transformation becomes a mathematical function that you can reason about, test, and trust.

Consider a typical calculation of customer lifetime value. In a traditional system, this might depend on cached aggregates or previous run results. In a functional system, the calculation receives raw transaction data and customer attributes as input and produces CLV metrics as output. Run it in January with December’s data, and you’ll get identical results to running it in December—guaranteed.

Idempotent – Run it 1000 Times, Get the Same Result

This principle fundamentally changed how our teams approach data engineering. When everything is idempotent, recovery becomes trivial and stress levels plummet. Pipeline broke due to a schema change? Simply fix the transformation and re-run it. Getting weird results that don’t match expectations? Re-run the pipeline with debugging enabled. Want to test a change safely? Re-run it on yesterday’s data and compare outputs.

The psychological impact of this approach cannot be overstated. Our junior developers are now allowed to experiment, rather than living in constant fear of breaking production systems. They’ve learned that curiosity and iteration are safe when the underlying system is designed to handle repetition gracefully.

This one changed our lives. When everything is idempotent, recovery becomes trivial. Pipeline broke? Just re-run it. Weird results? Re-run it. Want to test a change? Re-run it on yesterday’s data. Need to backfill from 80% done. Just run the substeps. The psychological impact is enormous

Idempotency proves crucial for several scenarios that every data team faces regularly. During recovery situations, when something inevitably breaks, you can simply modify the code or parameters and re-run the affected portions rather than spending hours debugging. For consistency, development and production environments behave identically because they’re running the same idempotent processes. Most importantly, engineers gain confidence to experiment and iterate without the paralyzing fear of corrupting critical business data.

This property extends beyond individual transformations to entire pipeline orchestration. Need to backfill three months of data after fixing a bug? The process is identical whether you’re filling one day or one hundred days. Each day’s processing is self-contained and doesn’t depend on the success or failure of adjacent days.

The idempotent approach naturally encourages a “build a little, test a little, learn a lot” development rhythm. Consider implementing a complex customer segmentation model that involves multiple data sources, feature engineering, and machine learning predictions. Rather than building the entire pipeline and hoping it works, you can break it into small, idempotent chunks: first, transform raw customer data into clean features and test against known customers; then add transaction aggregations and verify the math with sample accounts; finally, integrate the ML predictions and validate outputs against business expectations. Each step can be developed, tested, and refined independently using the same historical data. When a transformation doesn’t produce expected results, you haven’t lost hours of downstream processing—you simply adjust the logic and re-run that specific chunk. This granular approach significantly accelerates development cycles, as engineers can iterate on individual components without having to rebuild entire pipelines.

Tested – Automated Tests Everywhere, Hope is Not a Strategy

We treat tests as first-class citizens in our data architecture, not afterthoughts or nice-to-haves. Every pipeline includes comprehensive data quality tests covering schema validation, null checks, and range verification. Business logic tests ensure transformation correctness by validating that known inputs produce expected outputs. Pipeline behavior tests verify that the system handles edge cases, failures, and recovery scenarios in a graceful manner. SLA checks monitor performance and alert when processing times exceed acceptable thresholds.

These tests aren’t just quality assurance mechanisms—they serve as living documentation of what the system is intended to accomplish. When a new engineer joins the team, they can read the tests to understand both the expected behavior and the edge cases that the system handles. Six years ago, one of our VP customers boasted of having run 10,000 tests against his production data – so, when someone questioned the data, he could say, “Our 10,000 tests didn’t catch it. Are you sure?”

We treat tests like first-class citizens. Tests aren’t just QA – they’re living proof of what the system should do. And by ‘tests,’ we mean numerous tests —hundreds, thousands, covering every table.

The key insight is that data systems fail in predictable ways, and comprehensive testing catches these failures early, when they’re cheap to fix, rather than late, when they become expensive disasters. A missing null check was detected in the development costs, which can be fixed in minutes. The same issue discovered in production during a board presentation costs significantly more in both engineering time and business credibility.

Modern data testing frameworks enable the maintenance of thousands of tests across hundreds of tables. These tests run automatically with every deployment and provide immediate feedback when changes introduce regressions. The investment in test infrastructure pays dividends in reduced on-call burden and increased confidence in system changes.

Two-stage – Raw to Final, That’s It

This principle represents our most controversial departure from conventional wisdom, and it’s also been our most liberating change. We completely discarded our multi stage architecture and adopted a radically simplified two-stage approach.

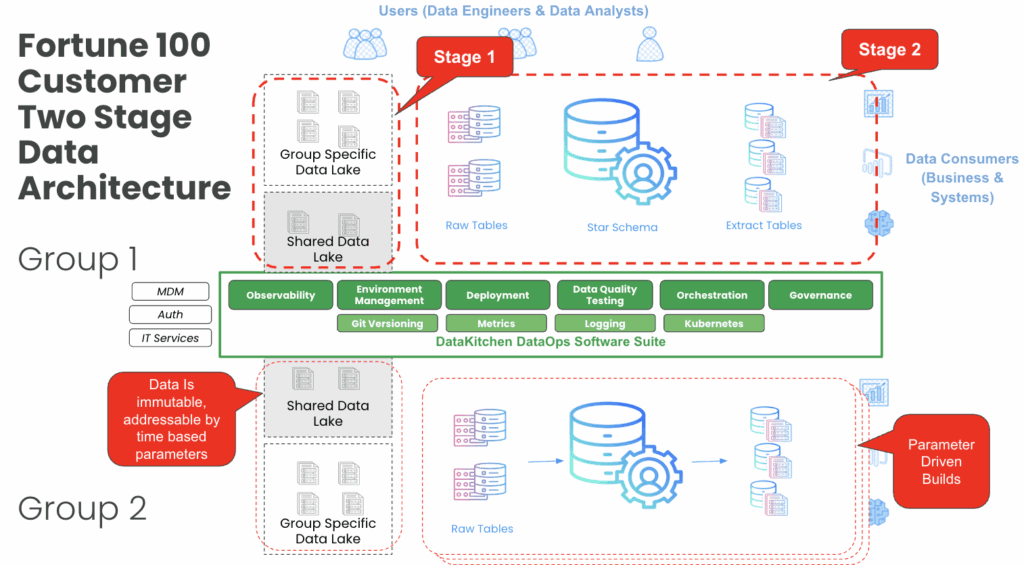

The first stage, raw data, represents exactly what we received from source systems—immutable, never modified, and always preserving the original context and timestamp of ingestion. The second stage, final data, contains information ready for consumption by downstream systems, analytics tools, and business users.

Raw, which represents exactly what we received from source systems, immutable forever. Final, ready for consumption. Everything in between? Can be deleted

Everything between these stages? Ephemeral, temporary, and disposable. These intermediate transformations can be deleted after processing or kept around for debugging—the choice depends on operational needs rather than architectural requirements.

“But what about bronze, silver, and gold layers?” you might ask. Through years of experience, we realized those layers often represented arbitrary complexity rather than meaningful business value. The benefits traditionally achieved through staged processing—data quality, transformation logic, and performance optimization—are now accomplished through functional composition and comprehensive testing.

This approach creates what we refer to as a “copy-paste” data architecture. Do you want an exact copy of the production for testing? Simply adjust a few configuration parameters, create a Git branch, and rebuild the environment. The raw data provides your foundation, and the functional transformations recreate everything else deterministically.

Development vs Production: A Pattern That Works

The implementation of FITT principles requires rethinking how we manage environments and deployments. Our approach employs a simple yet powerful pattern: development environments run yesterday’s data with today’s code, while production environments run today’s data with yesterday’s code.

Dev: Yesterday’s data, today’s code (we can have multiple environments), Prod: Today’s data, yesterday’s code (single environment, stable)

This pattern ensures we never deploy untested code to production while allowing developers to iterate safely without affecting real business data. Version control becomes the single source of truth for both code and configuration. Our Git repository essentially IS our pipeline definition.

Want to reproduce results from six months ago? Pull from git and re-run the pipeline against the archived raw data. The combination of immutable raw data and version-controlled transformations makes time travel trivial. This capability proves invaluable during audit seasons, regulatory reviews, or when investigating anomalies in historical data.

The development pattern enables proper continuous integration for data pipelines. Each pull request can be validated against historical data, ensuring that proposed changes produce expected results across various scenarios. Code reviews become more meaningful because reviewers can test changes rather than just inspecting code.

Incremental updates still work within this framework—the crucial insight is making each increment idempotent. Whether you process today’s incremental update once or fifty times, you achieve identical results. This property eliminates an entire class of timing-related bugs that plague traditional incremental processing systems.

The Awesome Results

After implementing FITT principles across dozens of client engagements, the results have been consistently remarkable. Incidents have dropped so dramatically that customers frequently comment that our systems are “too quiet”—they’ve grown accustomed to constant alerts from their previous data infrastructure.

When issues do arise, recovery follows a predictable pattern: identify the problematic component, fix the transformation logic, and re-run the affected pipeline segments. No more archaeological expeditions or complex rollback procedures that might introduce additional problems.

New team members achieve productivity within days rather than months because the system behaves predictably and follows consistent patterns. Junior engineers can confidently make changes because the comprehensive testing catches mistakes early and the idempotent nature ensures that failures don’t create lasting damage.

If you have to explain how to make a minor fix to a junior data engineer, your data architecture is the problem.

Data quality problems surface during development rather than production, thanks to comprehensive testing integrated into the development workflow. Mysterious data drift—that phenomenon where numbers slowly change over time for unknown reasons—becomes impossible because every change in output data can be traced to a specific Git commit.

Perhaps most importantly, the architecture scales with team growth. Adding new data sources, implementing additional transformations, or expanding to new use cases follows the same patterns that existing team members already understand. Knowledge transfer occurs through code and tests, rather than relying on tribal knowledge and lengthy documentation.

Addressing Common Objections

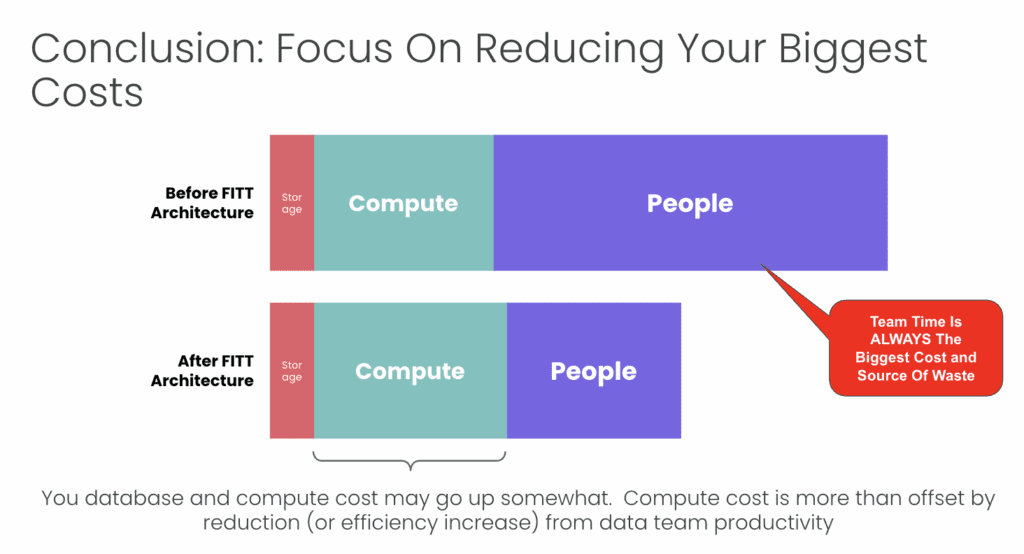

The most frequent pushback we encounter centers around cost concerns. “Storage costs will kill us!” teams often protest. However, we’re specifically talking about raw data storage, not maintaining every intermediate table indefinitely. Modern cloud storage costs have plummeted to the point where the storage expense of raw data is negligible compared to engineering time spent debugging data quality issues. FITT principles also help reduce computing costs. When pipelines are idempotent and well-tested, you eliminate the expensive computational overhead of debugging failed runs, reprocessing corrupted data, and running complex recovery procedures that often consume more resources than the original processing.

Data Engineering time >> compute costs > storage costs.

Performance concerns represent another common objection. The reality is that when engineers aren’t constantly debugging broken states and hunting down data inconsistencies, they have significantly more time to optimize the systems that impact user experience. Clean, functional architectures are inherently easier to optimize because performance bottlenecks become obvious rather than hidden behind complex interdependencies.

Getting Started with FITT Implementation

Implementing FITT doesn’t require discarding existing infrastructure or rewriting every pipeline simultaneously. The transition can happen incrementally, allowing teams to gain confidence with the approach before committing fully.

- Start by selecting one critical pipeline—ideally something that causes regular pain but isn’t so complex that failure would be catastrophic. Refactor this pipeline according to the FITT principles, treating it as a proof of concept for the broader architectural approach.

- Begin treating raw data as truly immutable, even if your current systems don’t enforce this property. This mental shift alone eliminates many classes of debugging scenarios and forces clearer thinking about transformation logic.

- Add comprehensive data tests to existing transformations before modifying them. This safety net catches regressions during refactoring and provides confidence that changes maintain expected behavior.

Start saying ‘yes’ to user requests — I can get that done tomorrow

- Simplify staging by identifying and eliminating intermediate layers that don’t provide clear value. Many organizations discover that their bronze and silver layers exist primarily for historical reasons rather than current business requirements.

- Ensure that all transformation logic and data testing code are stored in version control with proper branching strategies. This step enables the development versus production patterns that make safe iteration possible.

Be Data Engineering Productivity-Centric, Not Data Architecture-Centric

Traditional data architectures focus on organizing data—determining where information lives, how it’s structured, and how it flows between systems. FITT represents a philosophical shift toward centering architecture around team processes and productivity. This isn’t merely academic; it’s a practical response to the reality that data systems fail not because of storage limitations, but because of unreliable, untestable, and irreproducible transformations.

The human element represents the largest expense on most data teams. Optimizing for engineer productivity and satisfaction creates a virtuous cycle where better systems attract top talent, which in turn builds even better systems.

Medallion data architectures are designed to maximize data vendor revenues, not data team productivity.

The core insight driving FITT is elegantly simple: data represents the byproduct of functions, not the primary design consideration. When we design around processes and transformations, we create systems that are inherently more reliable, debuggable, and maintainable.

Teams implementing FITT principles consistently report significant improvements across multiple dimensions. Developer productivity increases as engineers spend less time debugging mysterious failures and more time building valuable features. System reliability improves through comprehensive testing and idempotent operations. Data quality becomes proactive rather than reactive through testing integrated into development workflows. Operational complexity decreases through fewer moving parts and clearer failure modes.

The Honest Downsides

Transparency requires acknowledging that FITT implementation comes with genuine trade-offs. Initial setup takes longer than traditional approaches because comprehensive testing and functional design require upfront investment. The benefits compound over time, but teams must be prepared for a higher initial setup cost.

Some team members resist the constraints, particularly engineers accustomed to imperative programming styles or those who view testing as optional overhead. Change management becomes crucial for successful adoption, requiring clear communication about the long-term benefits that come from short-term discipline.

You need to have tests, lots of them. But tests are the gift you give to your future self.

Better tooling becomes necessary for writing and maintaining comprehensive data quality tests. Teams need access to modern data testing frameworks, such as DataKitchen’s TestGen, or must write a large number of tests to achieve coverage.

Equally important is choosing an orchestrator that integrates testing into the process flow rather than treating it as an afterthought. Modern orchestration platforms, such as Dagster, provide native data quality testing as part of their execution model, making it natural to validate data at each step of transformation. DataKitchen’s DataOps Automation platform builds testing directly into pipeline orchestration, ensuring that data quality checks are mandatory rather than optional. Even traditional orchestrators, such as Airflow, can effectively support FITT principles when combined with the right plugins and patterns that enforce testing at each stage. The key is selecting tools that make comprehensive testing the default behavior rather than something engineers must remember to add.

Why You Should Embrace This Approach

The data engineering field suffers from endemic burnout, constant firefighting, and teams spending disproportionate time on operational issues rather than building valuable solutions. FITT isn’t magic, but it represents the closest approach we’ve discovered to making data engineering “boring” in the best possible sense—predictable, reliable, and stress-free.

The transformation from hero-driven development, where only senior engineers dare touch production systems, to an environment where any team member can contribute confidently represents the real victory. This democratization of system ownership scales teams more effectively than hiring additional senior talent.

Junior engineers thrive in FITT environments because the system’s predictable behavior enables them to develop intuition about data engineering without fear of causing catastrophic failures. Senior engineers appreciate the reduced cognitive load, allowing them to focus on high-value coding rather than constantly firefighting.

What’s important? Process acting on data >> data itself. Why? Cognitive load

The approach has proven successful across various industries, team sizes, and technical stacks. Whether you’re processing financial transactions, customer behavior data, or IoT sensor readings, the fundamental principles of functional design, idempotent operations, comprehensive testing, and simplified staging apply universally.

Most importantly, FITT enables data teams to focus on their core mission: transforming raw information into business value. When the infrastructure becomes reliable and predictable, engineers can focus on understanding business requirements, optimizing for user needs, and developing innovative solutions rather than maintaining fragile systems.

Most data architectures are overly complex, trend following, and not built for rapid change, high quality, and ease of work.

The choice is ultimately simple: continue accepting the current state of data engineering chaos as inevitable, or invest in systematic approaches that make the work sustainable and enjoyable. FITT provides a proven path toward the latter, backed by years of real-world implementation across diverse environments.

Every data team deserves to experience the satisfaction of systems that just work, deployments that happen without drama, and on-call rotations that rarely interrupt personal time. FITT makes this vision achievable through the systematic application of proven principles, rather than relying on luck with the next architectural trend.

Want to learn more?

FITT vs. Fragile: SQL & Orchestration Techniques FITT Data Architectures

Want to learn about this in a Webinar format ?

More reading

- https://www.dataengineeringweekly.com/p/functional-data-engineering-a-blueprint

- https://maximebeauchemin.medium.com/functional-data-engineering-a-modern-paradigm-for-batch-data-processing-2327ec32c42a

- https://github.com/sbalnojan/easy-functional-data-engineering

- https://medium.com/geekculture/idempotent-data-pipeline-ba4c962d8d8c

- https://www.youtube.com/watch?v=uev_27z3-1s