A Guide to Ops Terms and Whether We Need Them

It is challenging to coordinate a group of people working toward a shared goal. Work involving large teams and complex processes is even more complicated. Technology-driven companies face these challenges with the added difficulty of a sophisticated technical environment. It is no wonder then that the technology industry sometimes struggles to find coherent terminology to describe its own processes and workflows.

In the past couple of years, there has been a tremendous proliferation of acronyms with the “Ops” suffix. This was started in the software space by the merger of development (dev) and IT operations (Ops). Since then people have been creating new Ops terms at a pretty rapid pace:

- AIOps – Algorithmic IT Operations synonymously titled as “Artificial Intelligence for IT Operations.” Replaces manual IT operations tools with an automated IT operations platform that collects IT data, identifies events and patterns, and reports or remediates issues — all without human intervention.

- AnalyticsOps – Schedule, manage, monitor and maintain models under automation.

- AppOps – The application developer is also the person responsible for operating the app in production; the operational side of application management, including release automation, remediation, error recovery, monitoring, maintenance.

- ChatOps – The use of chat clients, chatbots and real-time communication tools to facilitate how software development and operation tasks are communicated and executed.

- CloudOps – Attain zero downtime based on “continuous operations”; run cloud-based systems in such a way that there’s never the need to take part or all of an application out of service. Software must be updated and placed into production without any interruption in service.

- DataOps – a collection of data-analytics technical practices, workflows, cultural norms and architectural patterns that enable: rapid innovation and experimentation; extremely high quality and very low error rates; collaboration across complex arrays of people, technology, and environments; and clear measurement, monitoring and transparency of results. In a nutshell, DataOps applies Agile development, DevOps and lean manufacturing to data-analytics (Data) development and operations (Ops).

- DataSecOps – DevSecOps for data analytics

- DevOps – a set of practices that combines software development (Dev) and information-technology operations (Ops) that aims to shorten the systems development life cycle and provide continuous delivery with high software quality.

- DevSecOps – views security as a shared responsibility integrated from end to end. Emphasizes the need to build a security foundation into DevOps initiatives.

- GitOps – use of an artifact repository that always contains declarative descriptions of the infrastructure currently desired in the production environment and an automated process to make the production environment match the described state in the repository.

- InfraOps – the layer consisting of the management of the physical and virtual environment, which may very well be within a cloud environment. On top of this layer would be Service Operations (‘SvcOps’) and Application Operations (‘AppOps’).

- MLOps – machine learning operations practices meant to standardize and streamline the lifecycle of machine learning in production; orchestrating the movement of machine learning models, data and outcomes between the systems.

- ModelOps – automate the deployment, monitoring, governance and continuous improvement of data analytics models running 24/7 within the enterprise.

- NoOps – No IT infrastructure; software and software-defined hardware provisioned dynamically

There are probably even more Ops terms out there (honestly, got tired of googling). Naturally, people have found this confusing and have questioned whether all these acronyms are necessary. As students of management methodology and lovers of software tools, we thought we might take a stab at trying to sort this all out.

Taylorism

After the industrial revolution (~1760 to 1840), manufacturing still greatly relied upon human labor. Naturally, managers looked for ways to improve efficiency. Fred W. Taylor (1856-1915) revolutionized factories with a methodology called “scientific management” or “Taylorism.” To improve plant productivity, Taylorism timed the movements of workers, eliminating wasted motion or unnecessary steps in repetitive jobs. Applying analysis to manufacturing processes produced undeniable efficiencies and naturally, provoked resentment by labor when taken to extremes. Taylorism took a top-down approach to managing manufacturing and treated people as automatons.

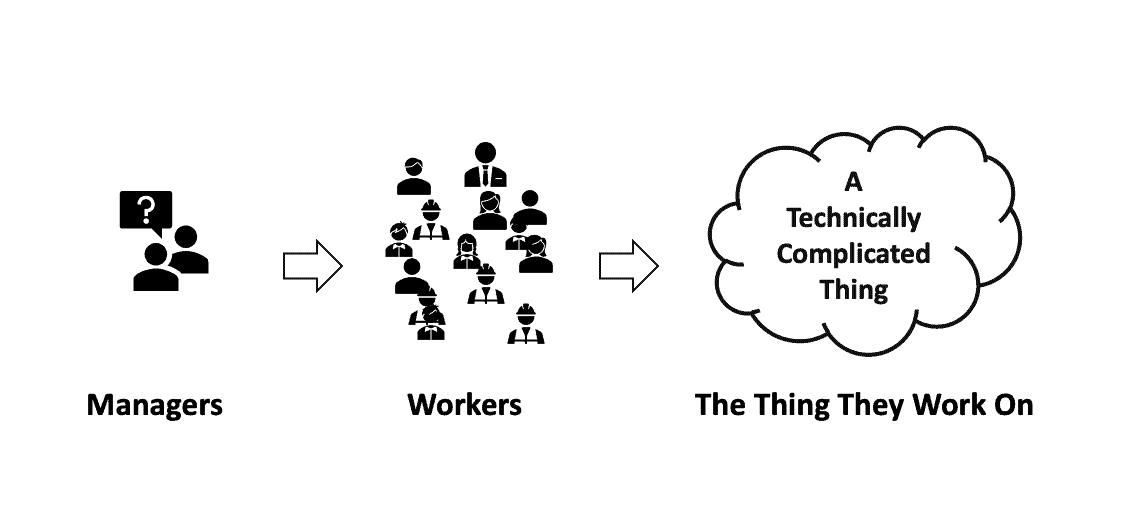

Figure1: When trying to produce a “technically complex thing” (TCT) organizations need communication between managers and the people doing jobs.

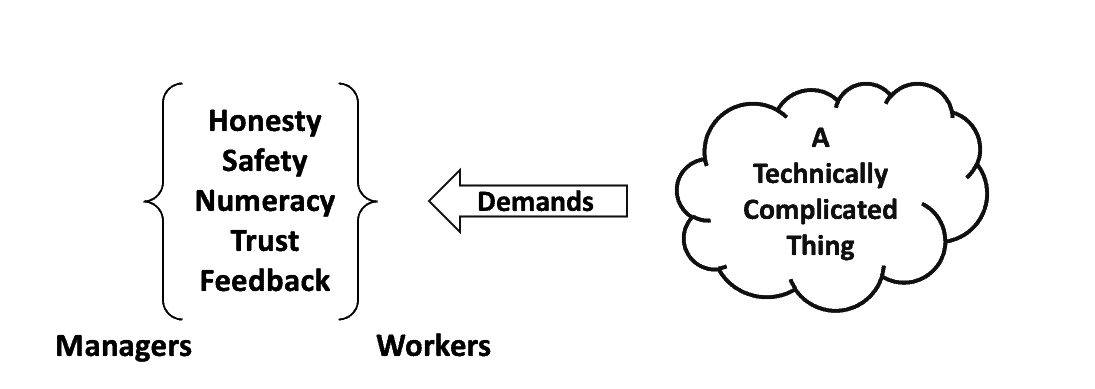

Top-down, dictatorial control hardly works in modern manufacturing endeavors, which have grown in scale and complexity. When trying to produce a “technically complex thing” (TCT) organizations need communication between managers and the people doing jobs. A TCT could be industrial manufacturing (like ventilators), software or data analytics. These endeavors demand a culture of honesty, safety, numeracy, trust and feedback. New management methods emerged based on these requirements.

Figure 2 : Production of a “technically complex thing” demands that a culture of honesty, safety, trust and feedback exist between managers and employees.

Managing Technically Complex Things

In the era of producing TCT’s, we have many methodologies that organize human activity to deliver benefits to society and value to individuals while eliminating waste. We sometimes group all of these methodologies underneath the term “Lean Manufacturing.” Lean seeks to identify waste in manufacturing processes by focusing on eliminating errors, cycle time, collaboration and measurement. Lean is about self-reflection and seeking smarter, less wasteful dynamic solutions together.

Figure 3: In the era of TCTs, manufacturing methods like Six Sigma, Total Quality Management and the Toyota Way focus on eliminating errors, cycle time, collaboration and measurement. These methods built upon the pioneering work of W. Edwards Deming.

Figure 3: In the era of TCTs, manufacturing methods like Six Sigma, Total Quality Management and the Toyota Way focus on eliminating errors, cycle time, collaboration and measurement. These methods built upon the pioneering work of W. Edwards Deming.

Applying Lean to Software Development

As the software industry emerged, companies began to understand that lean principles could be equally transformative in the context of software development. Development organizations began to apply “lean” to their software development processes.

Figure 4: Lean manufacturing principles applied to software development took the form of Agile, Scrum, Kanban and Extreme Programming.

Lean in Data Science

More recently, data-analytics organizations are applying lean principles to their methodologies. Enterprises following this path find that these methods help data science/engineering/BI/governance teams produce better results more efficiently.

Figure 5 : Applying lean principles to data analytics

Figure 5 : Applying lean principles to data analytics

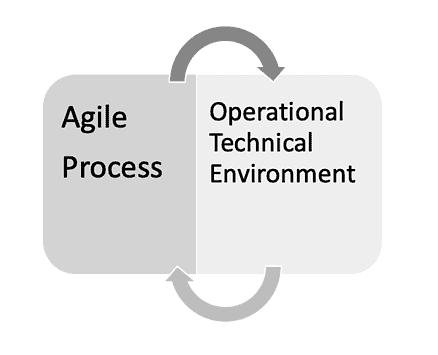

The application of lean principles in the technology space is facilitated by progress in automating the technical environment underlying the end-to-end processes. DevOps continuous deployment played a critical role. Afterall, there’s little point in performing weekly sprints if it takes months to deploy a release. The Agile management process was a step forward, but insufficient. Dev teams needed the support of the technical environment to optimize management processes further.

Figure 6: The benefits of Agile Development can’t be fully utilized without optimizing the operational technical environment.

The Technical Environments Enabling Agile

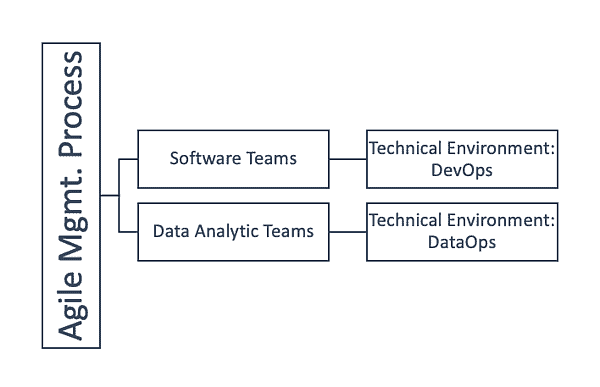

DevOps supplies the technical environment that enables Agile to be applied in software development and IT. With DevOps, dev teams create automated processes that deploy new features or bug fixes in minutes. The flexibility that DevOps and Agile enable have helped many companies attain a leadership position in their markets. However, DevOps and Agile together could not enable these same efficiencies in data analytics.

Figure 7: Software and data-analytics teams have unique technical environments which are addressed by DevOps and DataOps respectively.

Data Analytics Requires More Than DevOps

Data analytics differs from traditional software development in significant ways. For example, when a data professional spins up a virtual sandbox environment for a new development project, they need data in addition to a clone of the production technical environment. In software development, test data is usually pretty straightforward. In data analytics, there could be governance concerns. Data quality affects outcomes. There could be concerns about the age of data. A model trained on data that is three months old might work differently on data that is one day old. Also, predictive analytics can be invalidated if data doesn’t all originate from the same point in time. There are many issues to consider when provisioning test data in data analytics.

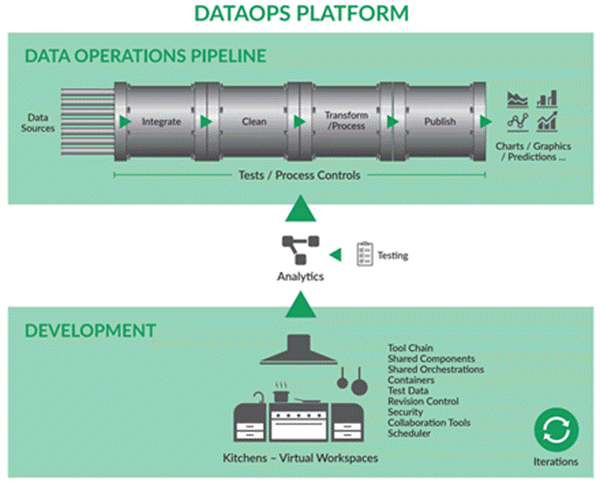

Another major difference between software development and data analytics is the data factory. Streams of data continuously flow through the data-analytics pipeline. Data analytics more resembles a manufacturing process than a software application. For these reasons (and more) DevOps by itself is insufficient to enable Agile in data organizations. Data-analytics created DataOps; a technical environment tuned to the needs and challenges of data teams.

Figure 8: Data analytics must orchestrate many pipelines including data operations.

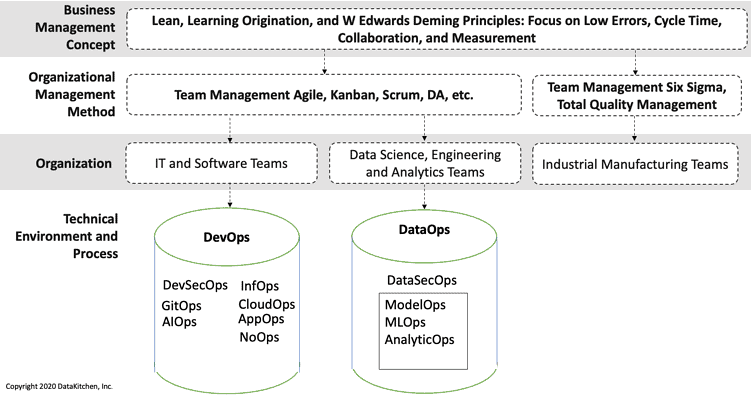

Teams and Technical Environments Define Ops

DevOps is the foundational technical environment for IT and software teams. DataOps is the technical environment of data-analytics teams. The figure below shows how DevOps and DataOps serve as the foundation for all other Ops’. Each of the other Ops’ represent branches off the DevOps and DataOps trees. Perhaps a new Ops is coined for a subgroup of a team and/or the requirement to use different methods or tools. For example, DevSecOps emphasizes security in DevOps development. DataSecOps performs the same function for DataOps.

When terms point to the same team members and the same genre of tools, the Ops terms are synonymous. For example, ModelOps, MLOps, and AnalyticOps focus on the unique problems of data scientists creating, deploying and maintaining models and AI-assisted analytics using ML and AI tools and methods. Maybe the industry doesn’t need all three of these terms.

Figure 9: Ops terms can be organized by team and technical environment/process.

Stay Lean

Whenever a term or acronym gains momentum, marketers go to great lengths to associate their existing offerings with whatever is being hyped. Sometimes that creates a backlash that drowns out some good ideas. You may believe that you do not need a new Ops term or you may find that it helps to galvanize your target audience and increases focus on the technical environment critical to your projects. Stay focused on the goals of lean manufacturing. Anything that eliminates errors, streamlines workflow processes, improves collaboration and enhances transparency aligns with DevOps, DataOps and all the other possible Ops’ that are out there.

To learn more about the differences between DataOps and DevOps, read our white paper, DataOps is NOT Just DevOps for Data.