Tests identify data and code errors in the analytics pipelines. Automated orchestration of tests is especially important in heterogeneous technical environments with streaming data. The DataKitchen Platform makes it easy to write tests that check and filter data across the end-to-end data pipeline.

Nodes, Recipes, Orders, and Variations

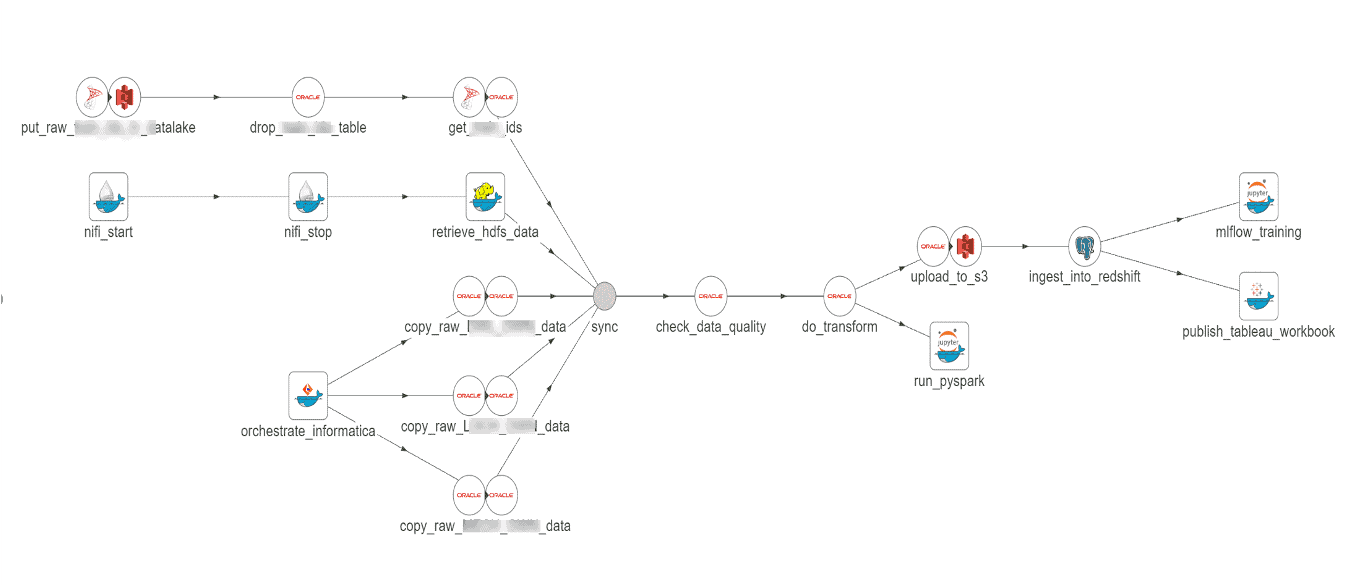

Our discussion of testing will flow more easily if we define some terminology upfront. The data pipeline is best conceptualized as a directed-acyclic graph (DAG), as shown in the diagram below. Here are some terms that are helpful to DataKitchen users:

- Node – Each Node in the DAG can represent some processing or transformation operation. A pipeline Node may contain substeps and tests. A DataOps Platform incorporates complex toolchains into a coherent work environment. When the graph spans a heterogeneous technical environment, each Node includes the appropriate tooling-specific steps.

- Recipe – defines a collection of one or more Nodes for automated orchestration

- Order – an orchestrated execution of a Recipe

- OrderRun – a specific instance of an Order execution

- Recipe-Variation (or just Variation) – a Recipe that executes based on parameters Parameters include graph definition, Order schedules, runtime resource configuration, tooling-instance connections, source-data instances, and more

Figure 1: The data pipeline illustrated as a directed-acyclic graph.

In DataOps, automated testing assures quality and verifies the absence of errors. The data team writes tests to validate each Node’s inputs and outputs. Using the DataKitchen Platform, tests can be defined to execute as part of data pipeline orchestration. Tests span a wide range of complexity, from simple metrics, like checking row counts, to evaluating results using statistical controls or sophisticated business logic. Tests may also be configured to take action. For example, a failed test may stop an OrderRun in place, transmit an alert, or simply log results.

This post discusses the step-by-step approach to writing a Node input test using the DataKitchen Platform.

Test to Verify Record Count

Step 1: Define the runtime variable

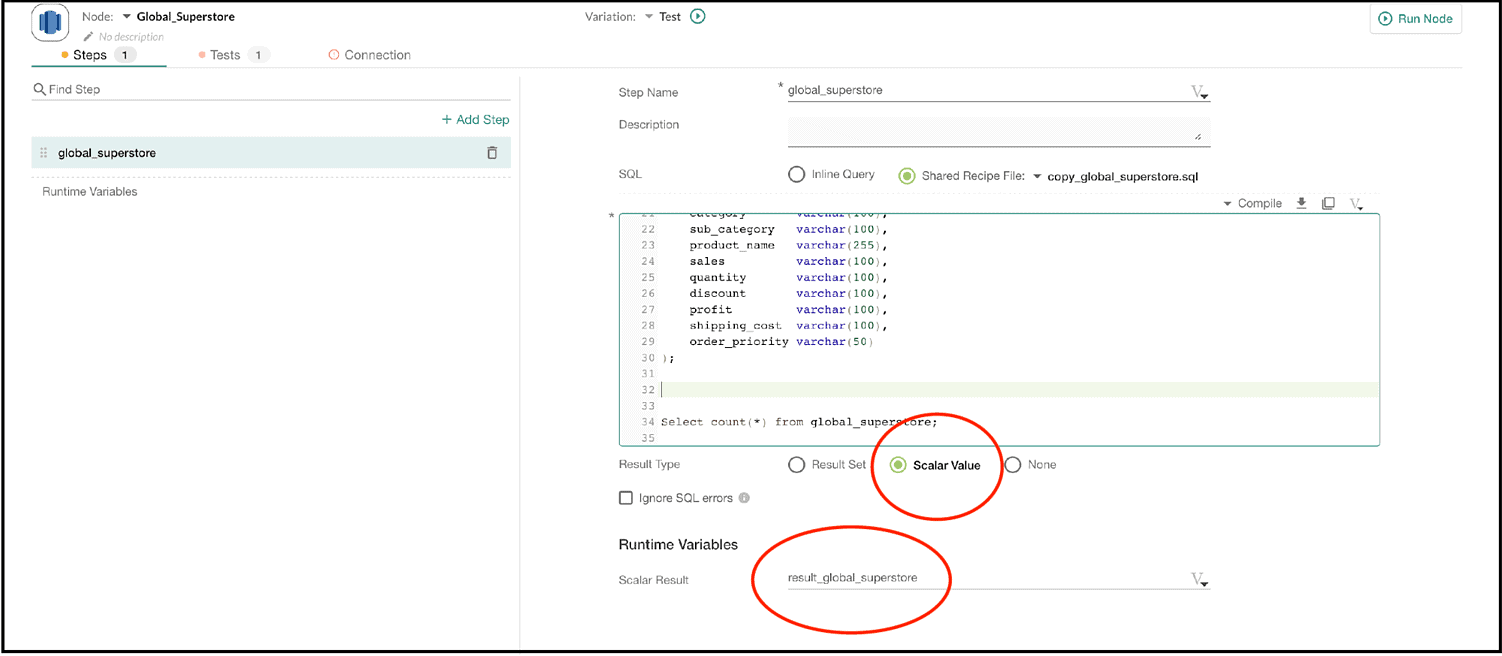

The CSV file ‘global superstore’ is ingested into a database using an SQL script. The last line in the SQL script creates a variable that stores the number of records in the data table. We would like to add a test that verifies that the record count is above a certain threshold.

The screenshot below shows the DataKitchen UI for creating a test of a Variation. Since the count is a scalar value, we select the result type as scalar value and define a runtime variable result_global_superstore.

Figure 2: The DataKitchen UI provides the user with a simple way to create tests of Variations.

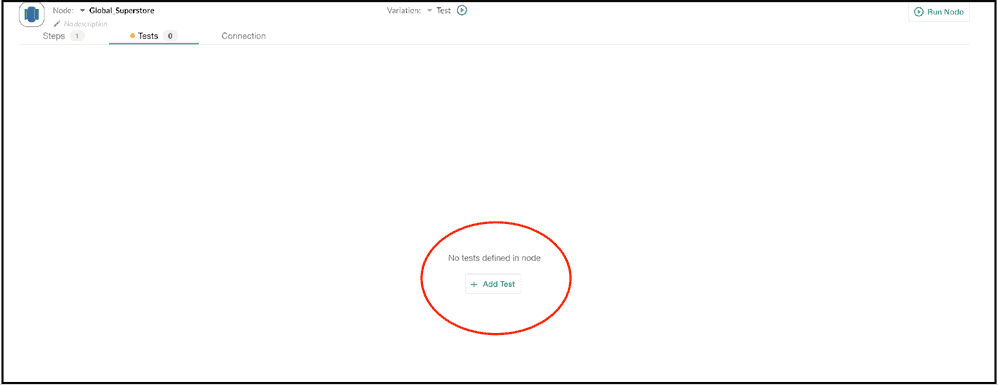

Step 2: Select the Test tab and add a test

Click on the test tab at the top of the screen and select +Add test.

Figure 3: The user adds a test of a Variation using a simple UI.

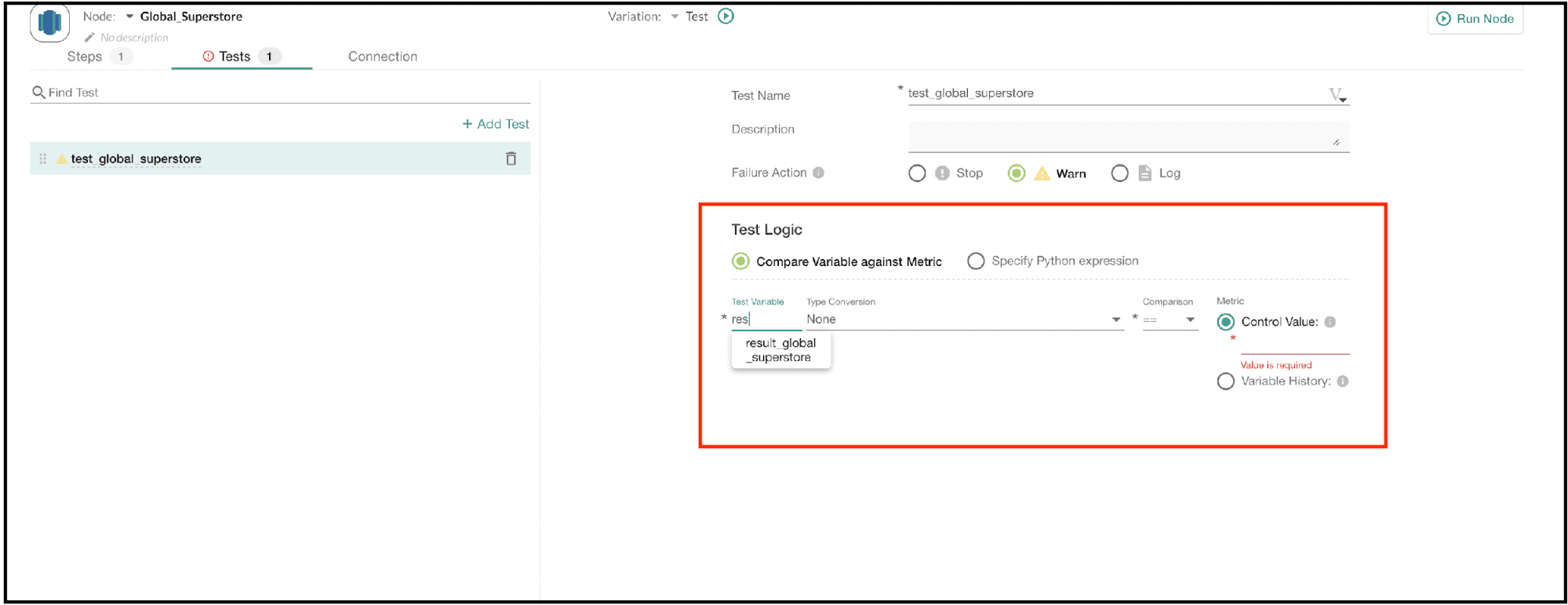

The system creates a default ‘test1’. We specify the details of the test on the right-hand side of the screen.

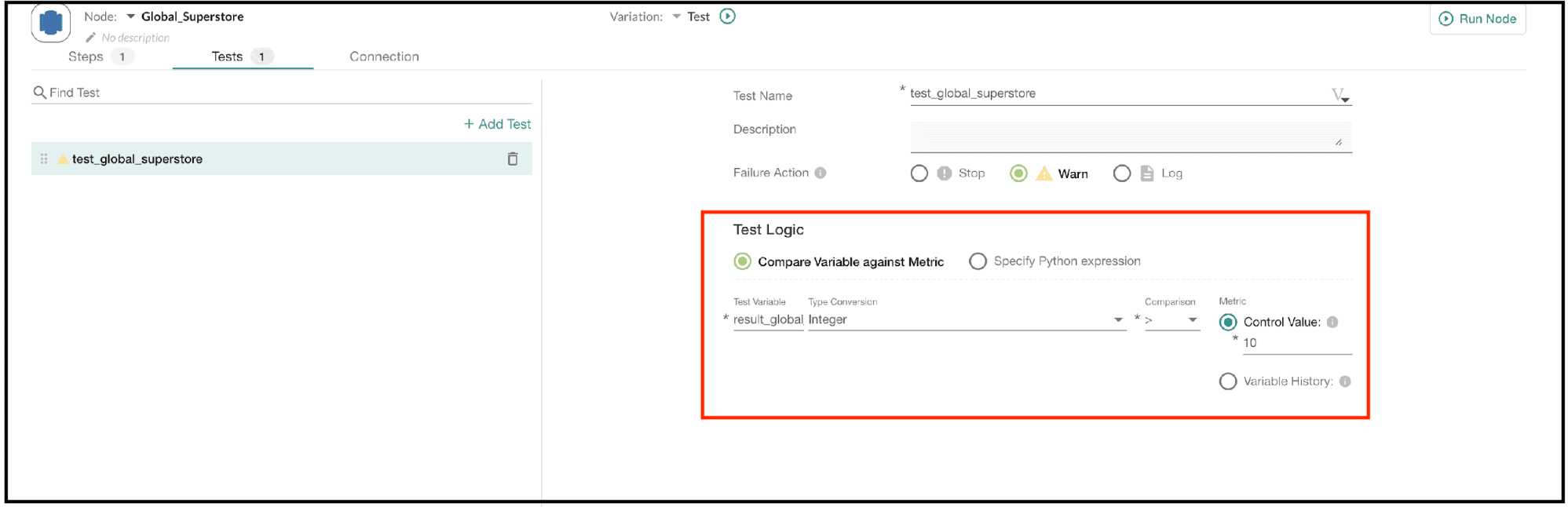

Step 3: Select the test variable, comparison, and control value

We change the test name to test_global_superstore – a more meaningful name. The description field can be used to describe the test you are performing and why it is needed. The Failure Action field determines what happens if your Node test fails. Here are the standard options:

- Stop: The OrderRun will be stopped, and subsequent Nodes will not be executed.

- Warn: The Order will continue to run, but a warning message will be displayed.

- Log: The results will be logged whether the test passes or not.

Let us select the Warn option for this test.

The Test Logic section defines the test condition:

- ‘Compare Variable against Metric’ uses the UI to build the test.

- ‘Specify Python Expression’ requires a python expression.

For this post, we create a simple test to evaluate the table row count using the option, Compare Variable against Metric.

Click on the ‘Test Variable’ field to view a list of available variables. Select result_global_superstore, which was defined earlier. Suppose that we wish to verify that the value is greater than ten. In a real-world application, this might be a historical balance test. For example, the number of YTD sales orders should never decline.

Figure 4: The test variable name, type, and comparison value are defined using the DataKitchen UI.

In the Type Conversion field select ‘integer’ from the drop-down list to assign result_global_superstore a type.

Figure 5: Test variables can be assigned to one of several basic data types.

In the comparison field, select ‘>’ from the drop-down list and set the ‘Control Value’ to ten. Click update.

Figure 6: The test compares the variable result_global_superstore to a control value.

Step 4: Run Variation and check test results

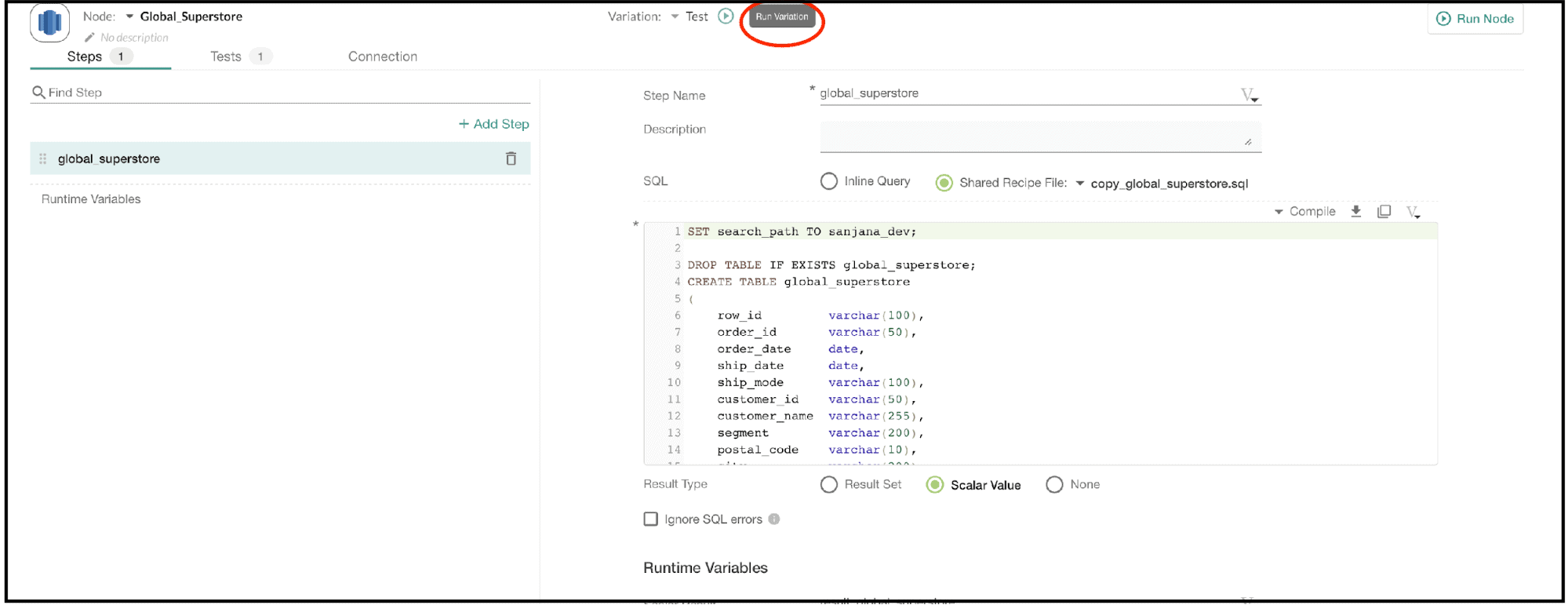

Click the Run Variation option in the DataKitchen UI. Then click the Run button in the Run Variation overlay.

Figure 7: Executing the Variation using the DataKitchen UI.

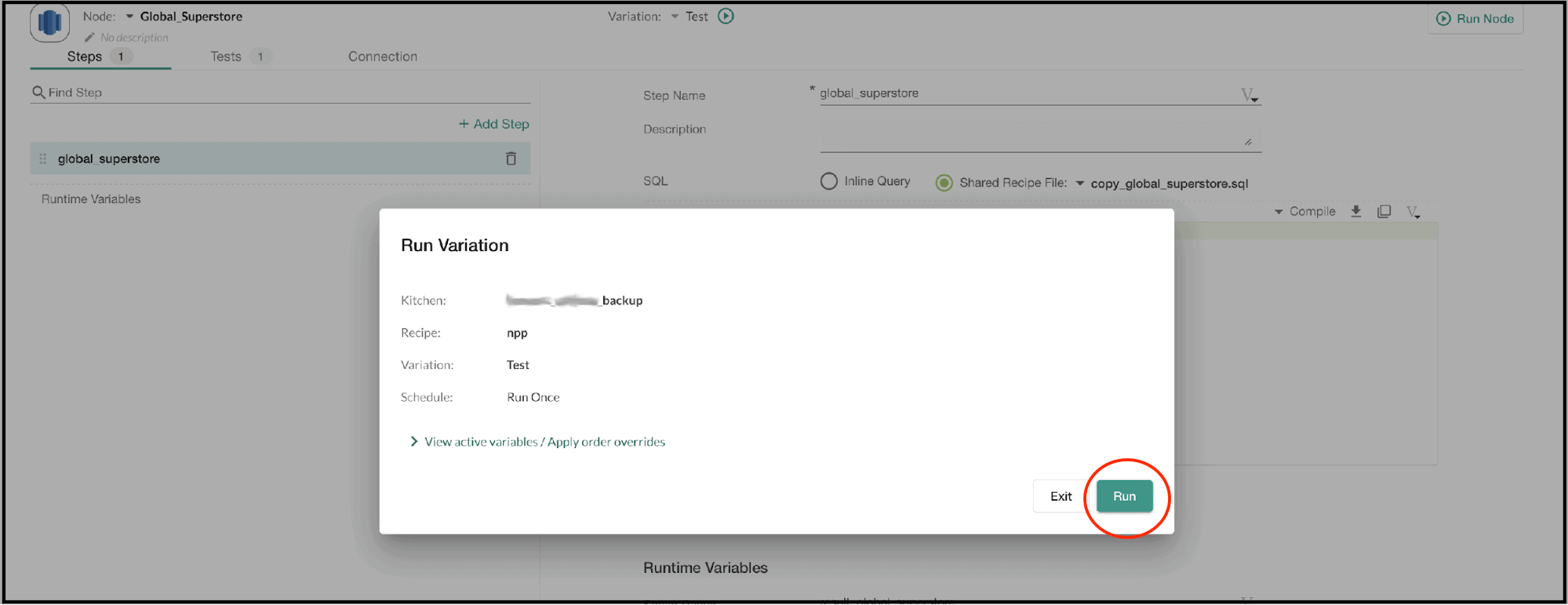

Confirm the Variation execution by clicking the Run button.

Figure 8: The Run Variation dialogue box.

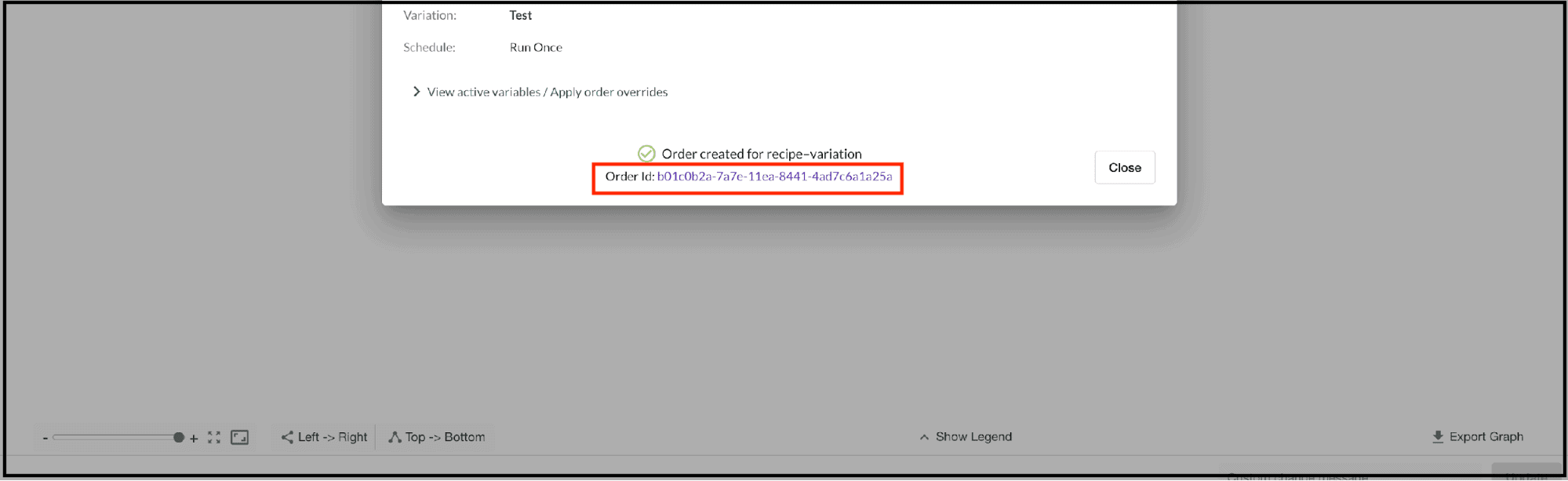

After running the Variation, view Order status by clicking on the ‘Order ID’ link to open the Orders screen.

Figure 9: After initiating an OrderRun, navigate to the Orders screen using the link shown.

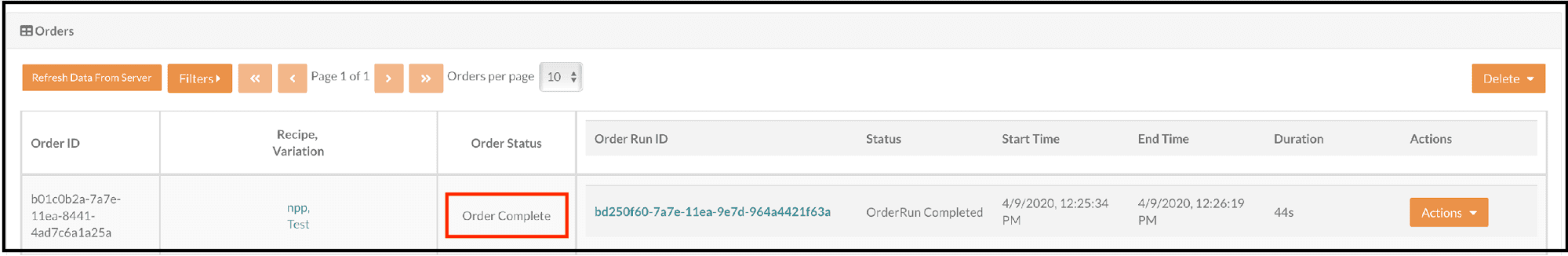

On the Orders screen, click the Refresh Data From Server button if needed to display the OrderRun ID for the Order. In this case, our Order has completed running, and the Order status is Order Complete.

Figure 10: The Orders table shows that the OrderRun is complete.

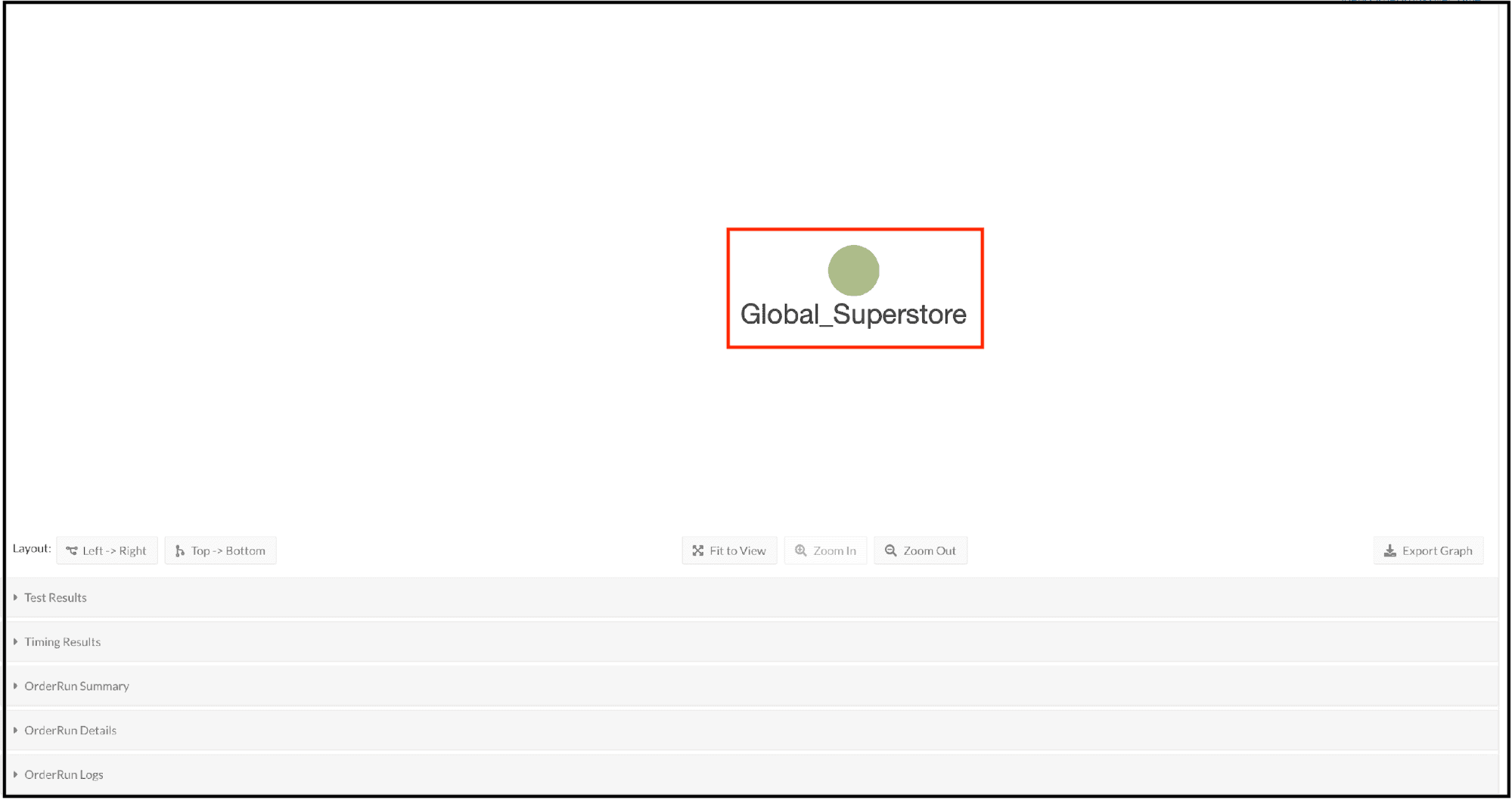

Click the linked OrderRun ID, on the Orders screen to advance to the OrderRun Details screen. The OrderRun Details screen shows the Recipe map. The color of each Node reflects its status:

- Blue – Order is still active

- Red – Error in the OrderRun

- Green – OrderRun is complete

Figure 11: The OrderRun containing one Node Global_Superstore has completed.

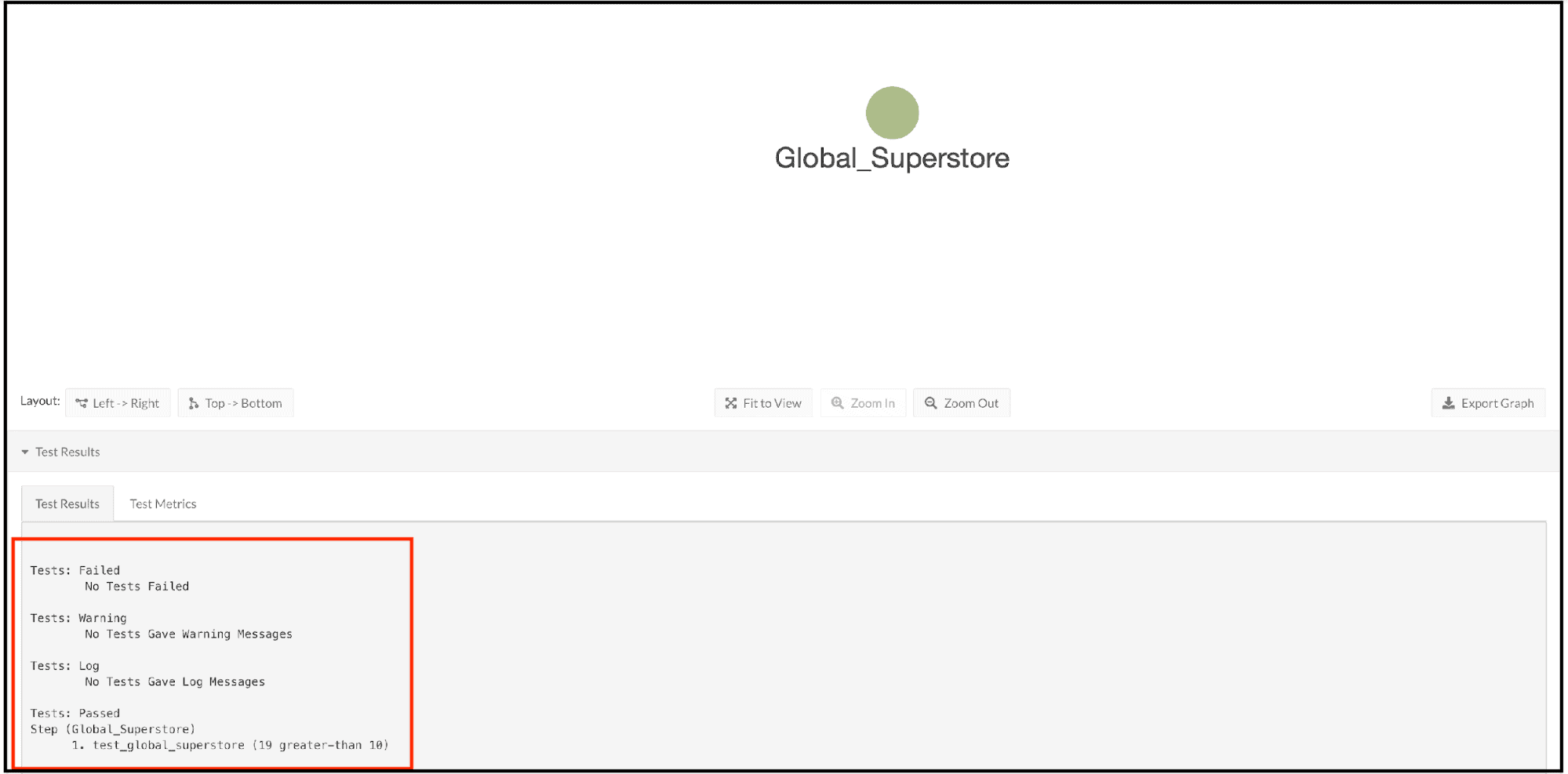

Scroll below the graph and expand the Test Results section. In the Test Results, we can see that the test did not fail, it did not produce a warning, and it did not record any messages in the log. The Tests: Passed section shows one test for the Node ‘Global_Superstore’, named ‘test_global_superstore’, and the values from that test. This is the test that we defined above. Nineteen records were counted in the data table, and this is indeed greater than our ‘Control Value’ of ten.

Figure 12: Test results for the OrderRun of Node Global_Superstore

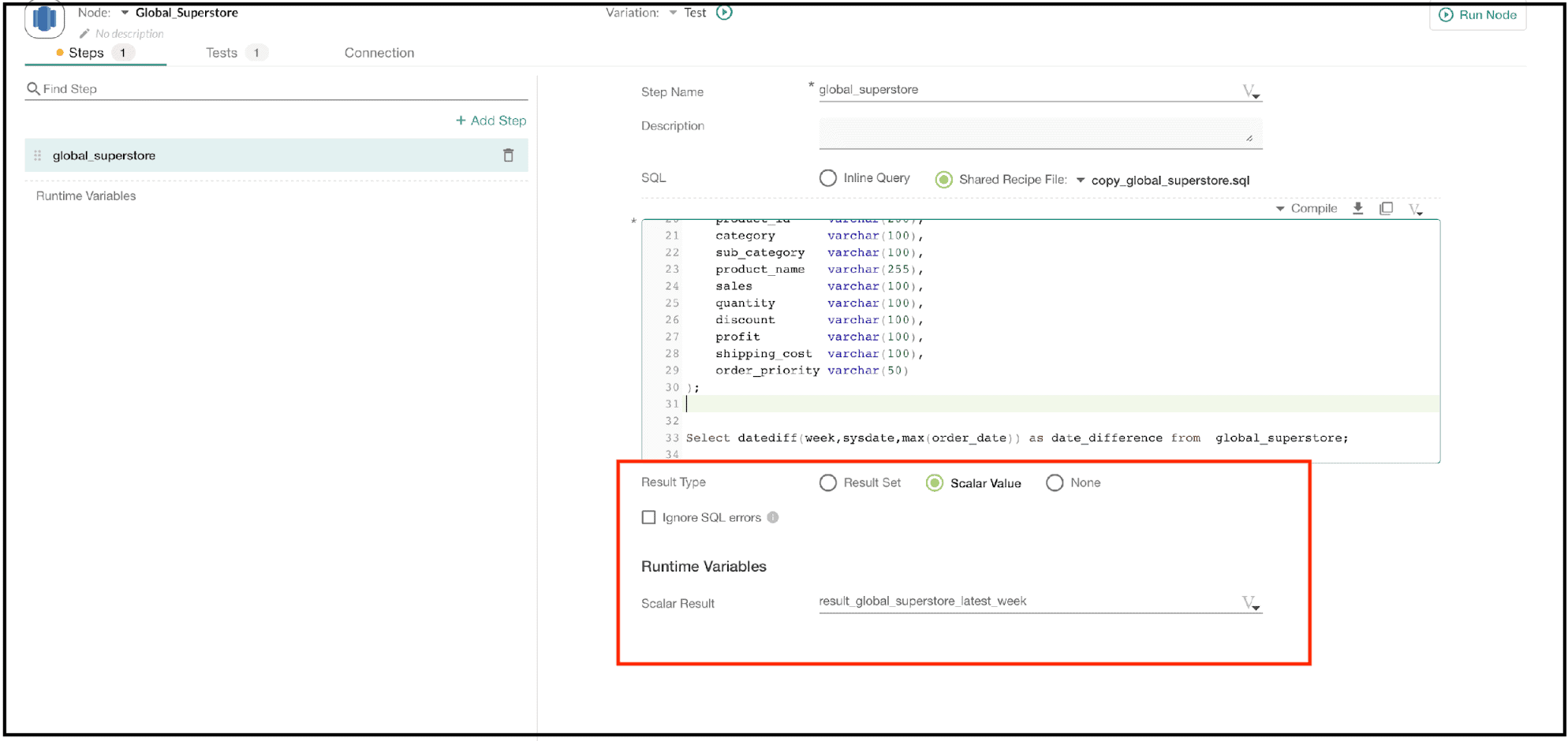

Test to Check the Latest Order Week

Let’s take a look at another example test. The test in this section verifies that we are using the latest version of the data. If we are using stale data, the difference between the date of the most recent Node and today’s date will be more than one week. In the below screenshot, we can see that the last line of the SQL script calculates the difference in weeks between the current system date and the maximum (latest) sales order date. The resultant value is assigned to the runtime variable result_global_superstore_latest_week. If the data is current, the value in result_global_superstore_latest_week will be ‘-1’. If the data hasn’t been updated this week, then the calculated value will be ‘-2’.

Figure 13: Defining a runtime variable for testing the age of the data table.

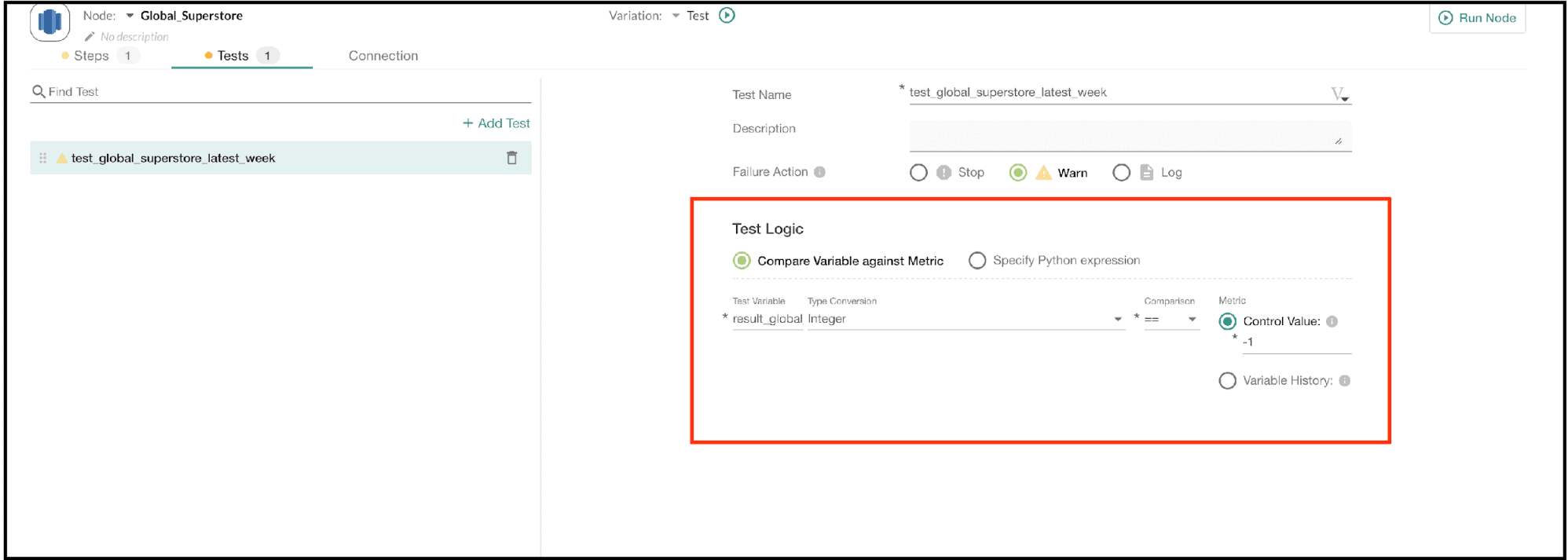

In this step we select the test variable result_global_superstore_latest_week created in the previous step, and select ‘integer’ under the ‘Type Conversion’ section. The comparison operator is ‘==’ (test if one value equals another) and ‘Control Value’ is a ‘-1’. In other words, the test verifies that result_global_superstore_latest_week is equal to ‘-1’. If it is any other value, the test will fail. Next, we update the changes and run the Variation.

Figure 14: Testing if result_global_integer is equal to ‘-1’.

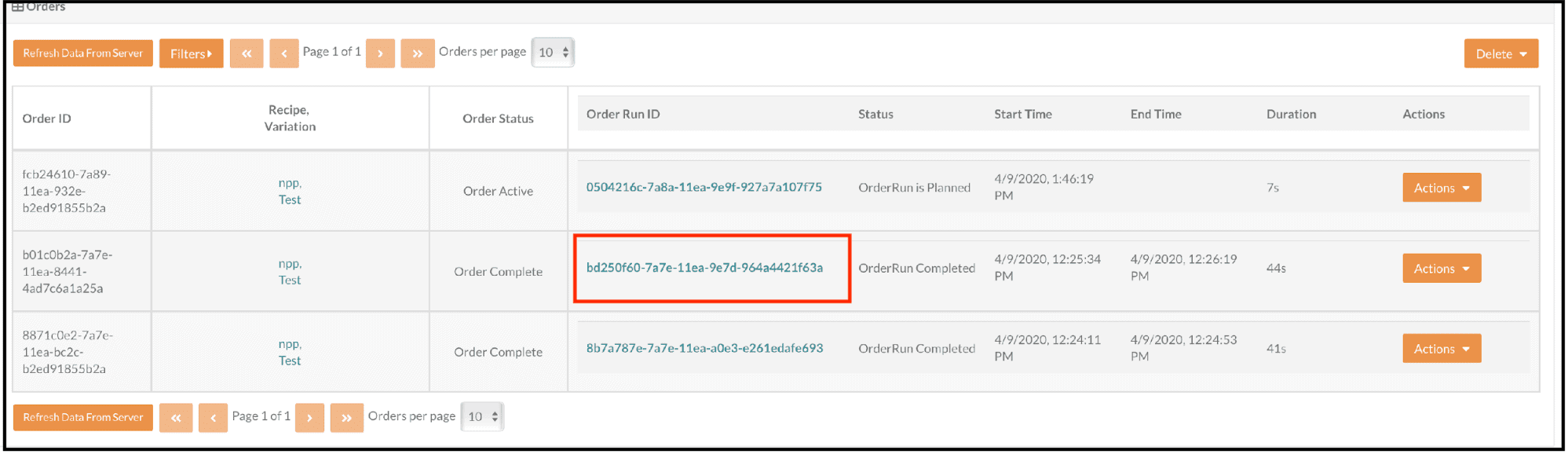

Click the linked OrderRun ID, on the Orders screen. It takes you to the OrderRun Details screen.

Figure 15: The Order results table

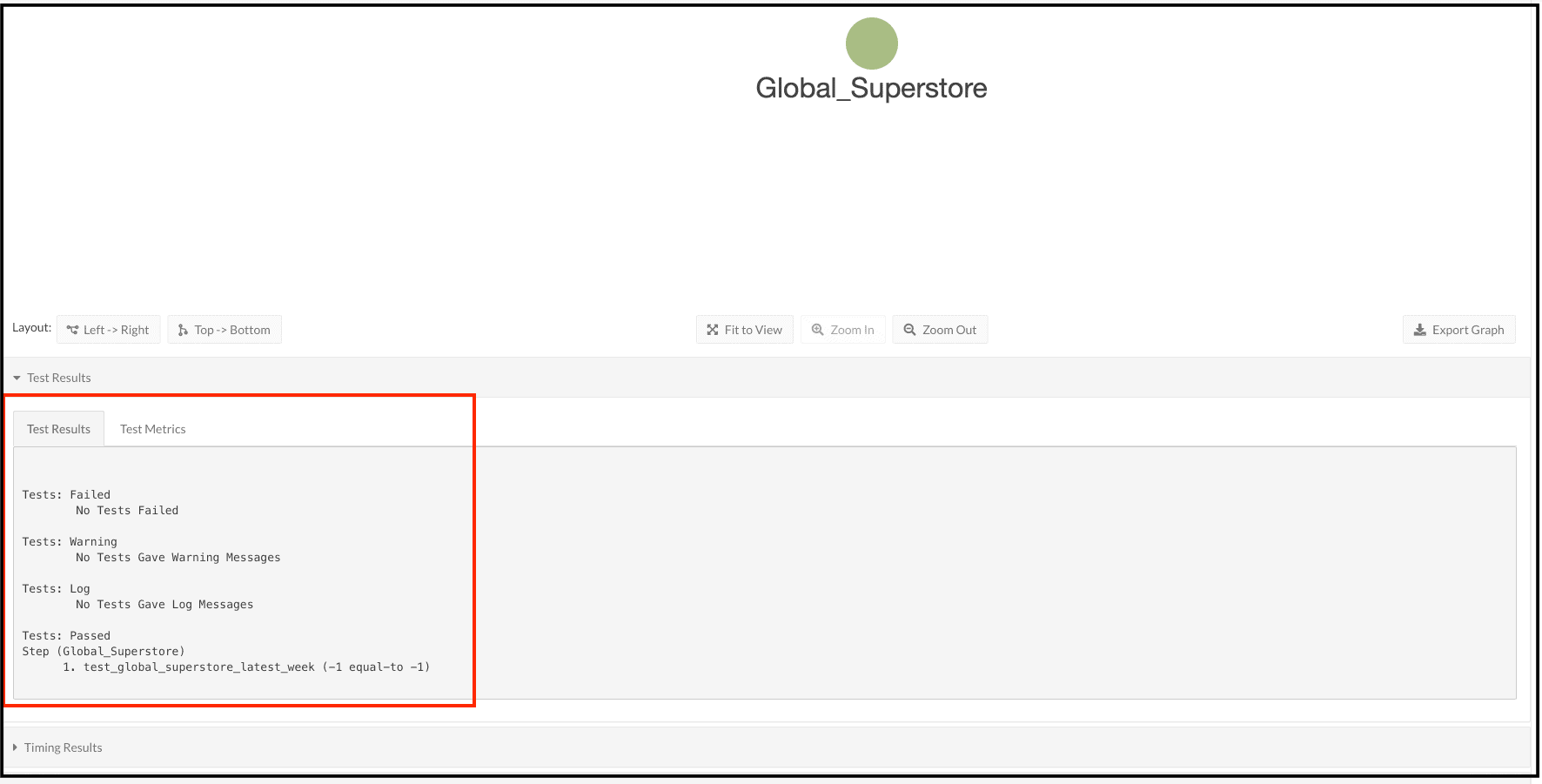

Scroll below the graph and expand the Test Results section. The Tests: Passed section shows one test for the Node ‘Global_Superstore’, named ‘test_global_superstore_latest_week’, and the values in that test. We can see that the test passed because the result_global_superstore_latest_week was equal to ‘-1’.

Figure 16: Test results after executing the Node Global_Superstore

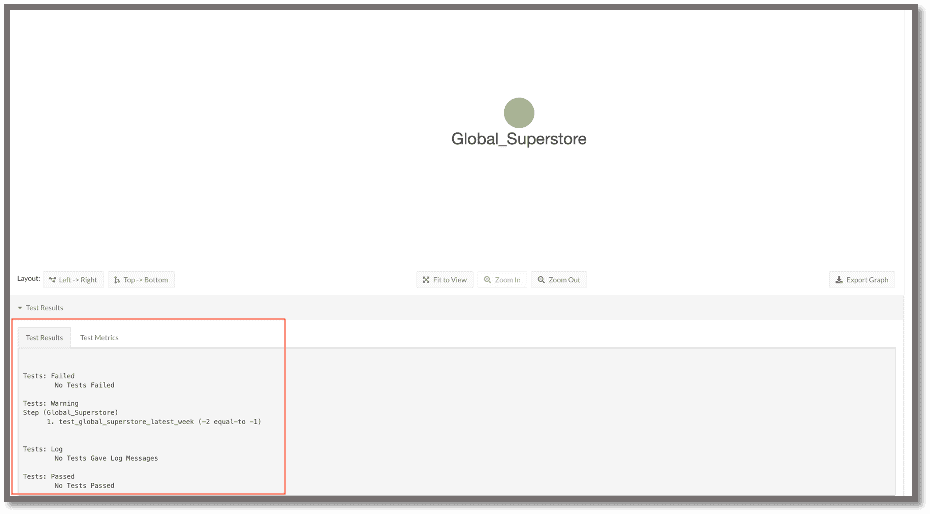

What happens if we run this test on a data table with stale data? We update the raw data file by deleting the sales orders from the most recent week. The test should produce a warning. Without making any changes to the test that is already in place, let us run the Variation and see what happens.

Figure 17: The test correctly identifies stale data and as configured, issues a warning

Recall that we selected the ‘Warn’ option in the failure action field on the test tab. In the test results section on the Order details page, we can see that the test did not fail or log results, but it did produce a warning. The Tests: Warning shows one test for the Node ‘Global_Superstore’, named ‘test_global_superstore’, and the values in that test. The value result_global_superstore_latest_week was equal to ‘-2’ which correctly triggered the warning.

Conclusion

The DataKitchen Platform provides a straightforward way to write powerful data tests that verify the validity of data. DataKitchen users interact with a straightforward UI able to abstract the complexity of a heterogeneous toolchain, characteristic of most data pipelines. The UI enables the user to easily specify test conditions, reporting and conditional actions. Tests prevent erroneous or missing data from impacting the quality of end deliverables which ultimately reduces unplanned work that diminishes productivity.

To learn more about DataOps testing, visit our blog, Add DataOps Tests for Error-Free Analytics.