Below is our third post (3 of 5) on combining data mesh with DataOps to foster greater innovation while addressing the challenges of a decentralized architecture. We’ve talked about data mesh in organizational terms (see our first post, “What is a Data Mesh?”) and how team structure supports agility. Let’s take a look at some technical aspects of data mesh so we can work our way towards a pharmaceutical industry application example.

Implementing a data mesh does not require you to throw away your existing architecture and start over. The data industry has a wide variety of approaches and philosophies for managing data: Inman data factory, Kimball methodology, star schema, or the data vault pattern, which can be a great way to store and organize raw data, and more. Data mesh does not replace or require any of these.

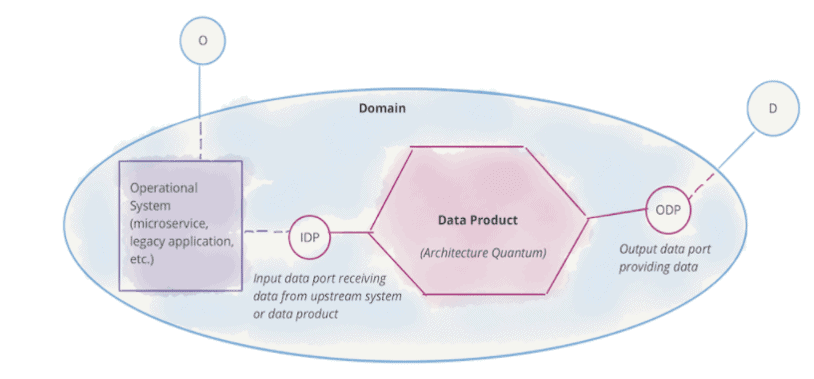

Figure 1: Looking inside a data mesh domain. Source: Thoughtworks

The data mesh is focused on building trust in data and promoting the use of data by business users who can benefit from it. In essence, a domain is an integrated data set and a set of views, reports, dashboards, and artifacts created from the data. The domain also includes code that acts upon the data, including tools, pipelines, and other artifacts that drive analytics execution. The domain requires a team that creates/updates/runs the domain, and we can’t forget metadata: catalogs, lineage, test results, processing history, etc., …

Instead of having a giant, unwieldy data lake, the data mesh breaks up the data and workflow assets into controllable and composable domains with inherent interdependencies. Domains are built from raw data and/or the output of other domains. Figure 1 shows a simplified diagram of a domain receiving input data from an upstream source like an operational system (O) and supplying data (D) to a customer or consumer.

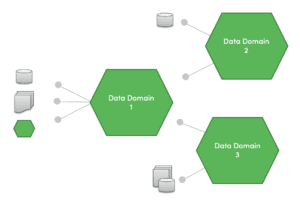

Figure 2: Interdependent domains pose a clear order-of-operations challenge in building systems.

There’s a clear order-of-operations challenge in building systems based on interdependent domains. For example, in the domain diagram in Figure 2, imagine that domain 1 has a list of mastered customers, which is utilized by domains 2 and 3. There’s an implied producer-consumer order of operations relationship between these two. The system needs a way to enforce the order of operations without slowing down the domain teams.

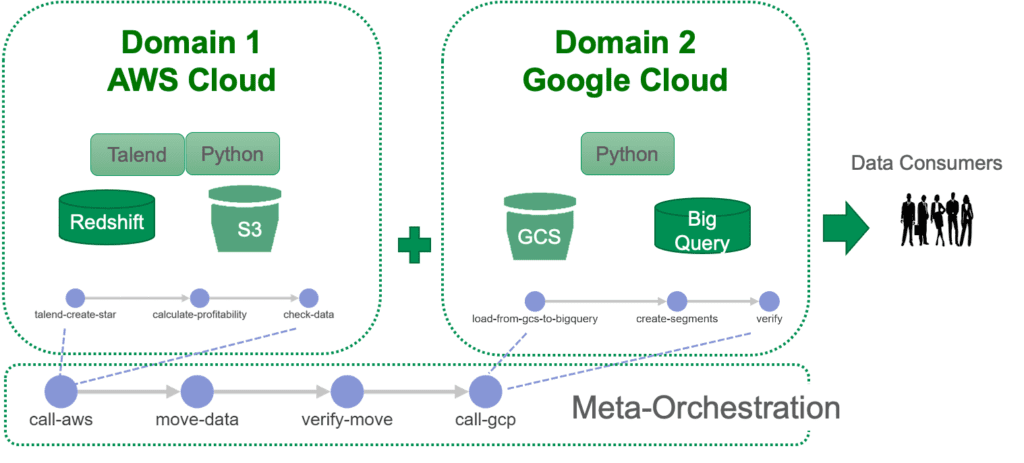

DataOps Meta-Orchestration

It’s also very important to make domains composable and controllable, and these are two of the major value propositions of DataOps automation. Meta-orchestration is a feature that contributes to both composability and control. Unlike generic orchestration tools, DataKitchen Platform orchestration connects to the existing ecosystem of data analytics, data science and data engineering tools. It can orchestrate a hierarchy of directed acyclic graphs (DAGS) that span domains and integrates testing at each step of processing. We call this collection of capabilities observable meta-orchestration. In figure 3, we show two domains in disparate locations using different toolchains. The analytics delivered to users require local orchestrations within each domain to execute with an order of operations dependency. A horizontal data pipeline spans the domains and delivers analytics to users. The top-level meta-orchestration manages the order of operations and the integration of domain capabilities into a coherent whole. The local domain teams can focus on their local orchestrations without worrying about how their work fits into the bigger picture. When a domain team makes a schema or some other change that could impact other domains, DataOps automated testing, incorporated into meta-orchestration, verifies the updated analytics within the system context.

Figure 3: The DataKitchen DataOps Platform meta-orchestrates horizontal data pipelines across domains, teams, toolchains and data centers.

Meta-orchestration is usually challenging to implement because each autonomous domain chooses its own toolchain, and coordination between domains is performed through meetings and impact review. The DataKitchen Platform greatly simplifies this process. It enables a data engineer to write orchestrations and tests for the respective domains within the common, unifying context of the DataKitchen superstructure. The data engineer does not have to be an expert in the local domain toolchains. DataKitchen also makes it possible to add many types of tests without writing code – through configuration using a user interface. DataKitchen makes it much easier to coordinate inter-domain dependencies and assert control with a high level of observability.

DataOps Composability

Meta-orchestration can also be used to build composable systems. Data engineers can write orchestrations that spin up Kitchens – self-service sandboxes used for domain development and inter-domain testing. Creating and closing Kitchens on-demand eliminates one of the biggest bottlenecks in data analytics development, waiting for data and machine resources to be instantiated so that a project can begin. Kitchens also improve release efficiency by moving analytics from development to production with minimal keyboarding.

In principle, none of the domain teams should need to be experts in the entire system, but the reality is that local changes can unintentionally impact other domains. DataOps automation makes it easy to spin up Kitchens that replicate the end-to-end system environment so that domains can test code changes in an environment that matches the production release environment. With DataKitchen’s self-service infrastructure-as-a-platform capabilities, the domain teams stay autonomous and focused on their data product.

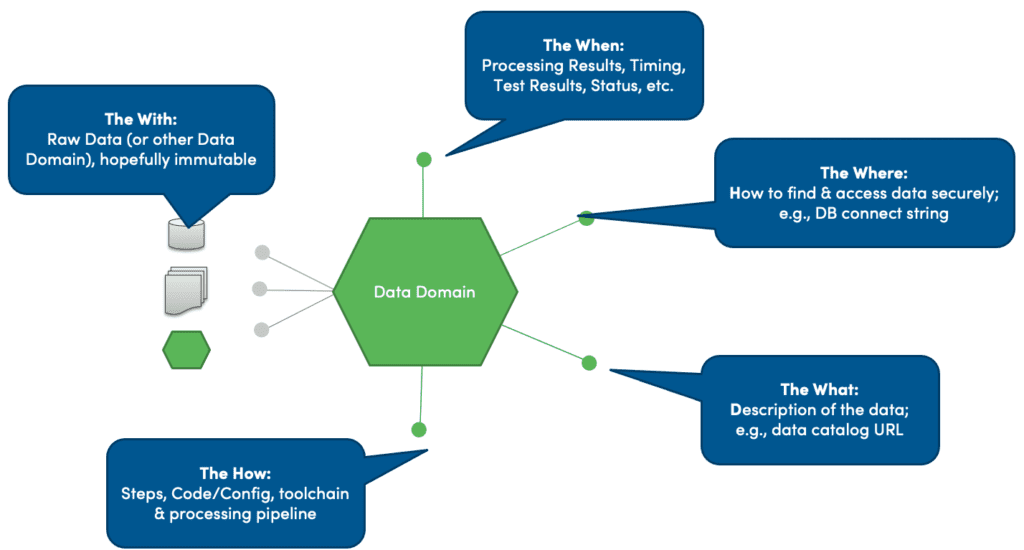

The Four “W’s” and an “H” of Domains

The consumers and customers of a domain can look at it as a black box. They don’t have to think about the domain’s internal complexity. The domain is accessed through its external interfaces, which can be easily remembered as the four “W’s” and an “H:”

Figure 4: The four “W’s” and an “H of interfacing with a domain

- What is in the domain – description of the data, e.g., data catalog URL

- Where is the data – how to find and access the data securely, e.g., DB connect string

- When was the domain created/tested– data process lineage including artifacts like processing results, timing, test results, status, logs, etc., …

- With what components was the domain created, set of raw data (or other data domain) used, hopefully, immutable

- How was the domain created – steps, code/configuration, toolchain, and processing pipeline used to build the data

Figure 5: Domain interfaces as URLs

It’s convenient to publish a set of URLs that provide access to domain-related data and services. How does one get access to a domain? How does one edit the catalog? How does a customer get status? Where is the source code that was used? A published set of URLs that access domains allow your team to better collaborate and coordinate. Figure 5 shows a domain with associated URLs that access key tools and orchestrations.

It’s helpful when implementing a data mesh for each domain to leverage horizontal capabilities that address issues of order-of-operations, composability, control and inter-domain communication. Managing these processes and workflows is the primary purpose of DataOps. While data mesh is usually discussed in terms of team organization and architecture, we believe that automating domain development and operations processes is equally important. DataOps automation provides the helping hand that enables data mesh to effortlessly deliver on its promise.

At this point in our blog series, we have talked about data mesh from an organizational and technical perspective. We’ve reviewed the promises and challenges related to data mesh and made the initial case for DataOps as a data-mesh enabler. In our next post, we will dive even deeper and explore data mesh through the lens of a pharmaceutical industry data analytics system. If you missed any part of this series, you can return to the first post here: “What is a Data Mesh?”