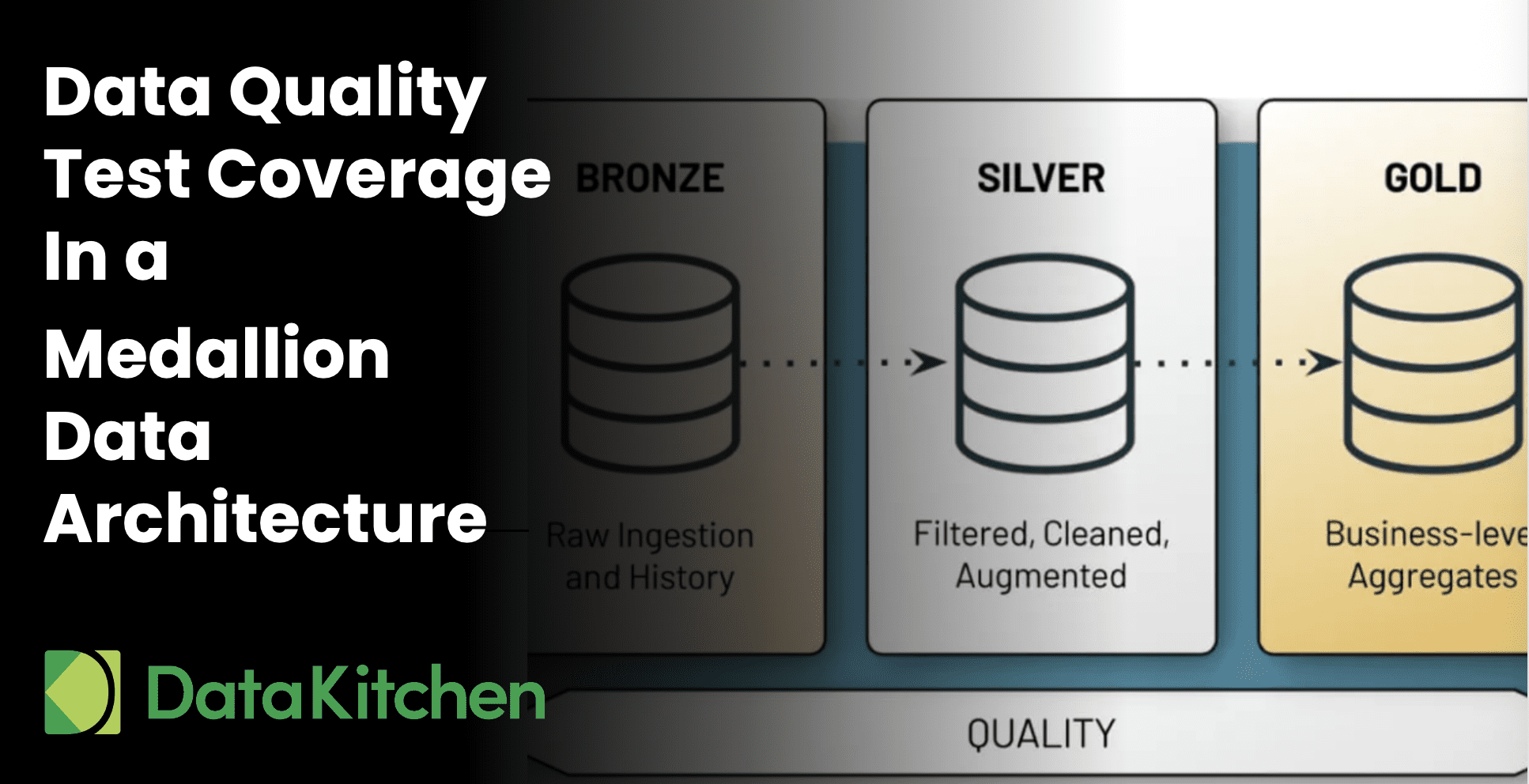

For data engineering teams serious about delivering production-grade data products, implementing systematic test coverage across their Medallion architecture represents not only a technical improvement but a fundamental shift toward sustainable and trustworthy data operations.

Critical Data Elements: Your Shortcut to Data Governance That Actually Works

💥 80% of data governance initiatives fail. Not because of tools. Not because of frameworks. But because the business isn’t involved, and no one agrees on what data truly matters. That’s where Critical Data Elements (CDEs) change everything.

Webinar: Test Coverage: The Software Development Idea That Supercharges Data Quality & Data Engineering

In an exciting webinar, we talk about the importance of having test coverage across all your tables and tools

Scaling Data Reliability: The Definitive Guide to Test Coverage for Data Engineers

Let us show you how to implement full-coverage automatic data checks on every table, column, tool, and step in your delivery process. And how you can create 1000s of tests in a minute using open source tools.

The Data Quality Revolution Starts with You

Your organization’s data quality transformation is waiting for someone to take the first step. The open source tools are available, the methodologies are proven, and the need is obvious. . The revolution starts with you. What are you waiting for?

When Timing Goes Wrong: How Latency Issues Cascade Into Data Quality Nightmares

Timing is EVERYTHING. How Latency Issues Spawn Data Quality Problems

Webinar: A Guide to the Six Types of Data Quality Dashboards

In an exciting webinar, we discuss the six major types of Data Quality Dashboards

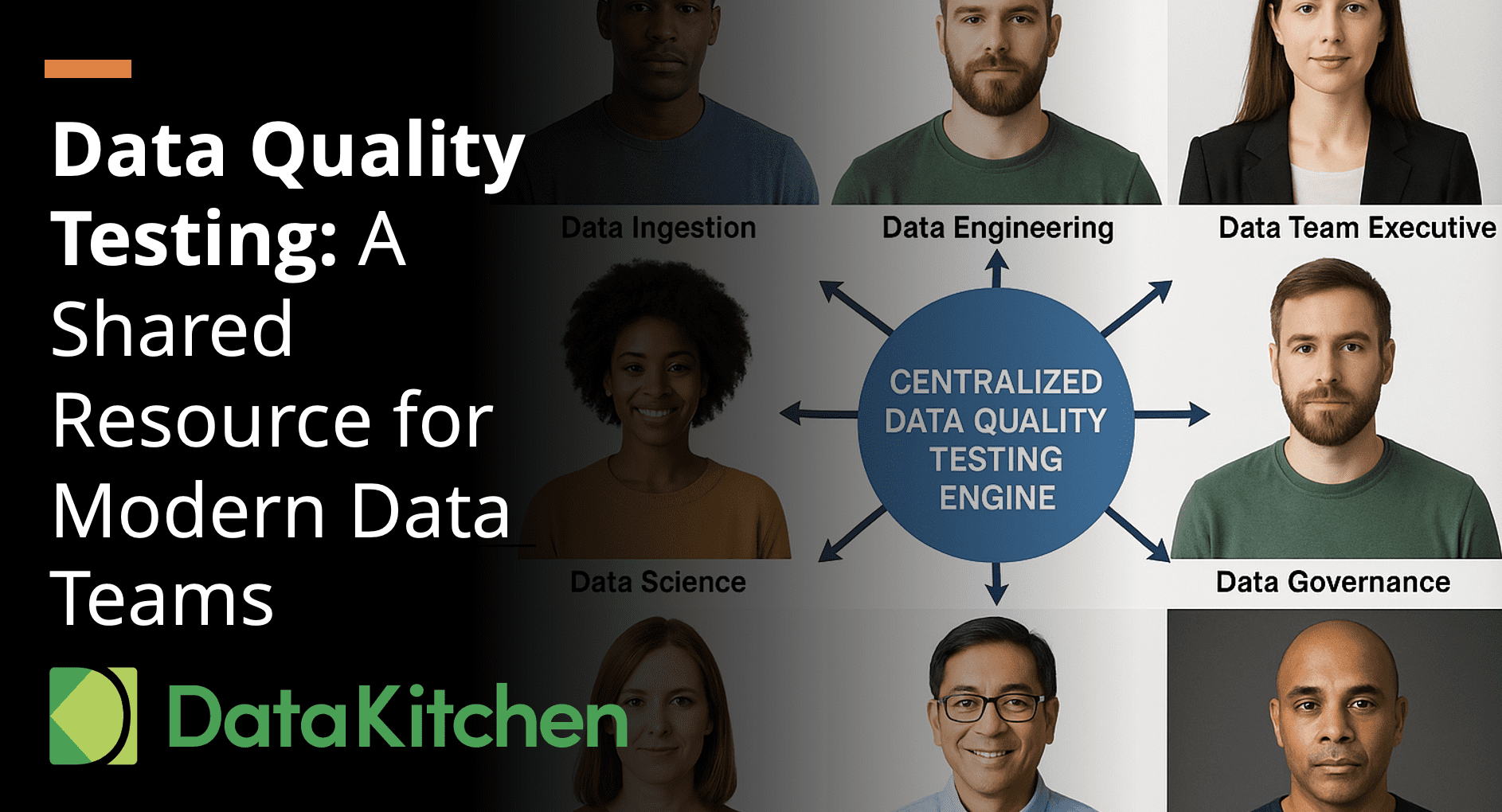

Data Quality Testing: A Shared Resource for Modern Data Teams

Data quality is not a problem any single role can solve in isolation. The complexity and scale of modern data ecosystems necessitate a collaborative approach, where quality testing serves as a shared infrastructure across all data and analytics roles.

How I Broke Our SLA and Delighted Our Customer

Failing the SLA was the price we paid for trust. And it was worth every second.

Is Your Team in Denial about Data Quality? Here’s How to Tell

Data quality problems are ignored, rationalized, or swept aside. How do you tell if your team is in denial about data quality? Uncle Chip has provided a bingo card … play with your data team!