Data Quality Testing: A Shared Resource for Modern Data Teams

In today’s AI-driven landscape, where data is king, every role in the modern data and analytics ecosystem shares one fundamental responsibility: ensuring that incorrect data never reaches business customers. Whether you’re a Data Engineer building ETL pipelines, a Data Scientist developing predictive models, or a Data Steward ensuring compliance, we all want the same outcome: data that is trustworthy, accurate, and understandable.

The reality is stark: no one in your organization wants misleading data to reach business decision-makers. Yet ironically, data quality testing often remains isolated in the hands of a few engineers or specialists, disconnected from the broader collaborative framework that defines a high-functioning data team.

That must change.

The Data Team Is Diverse—But Unified By the Need for Quality

A modern data team is a mosaic of specialized roles. According to Gartner’s breakdown of analytics and data roles, data teams now span far beyond traditional data engineering and business intelligence (BI) analysts. We have ingestion engineers, analytic engineers, stewards, governors, modelers, owners, scientists, product managers, compliance officers, and executives. This proliferation of roles is necessary—it reflects the growing complexity and business criticality of data. But it also introduces a problem.

Each role touches data differently. And each role encounters the impact of poor data quality in different ways.

Yet they are united by the same pain: quality issues are everyone’s problem, and solving them benefits everyone. Quality tests improve productivity, reduce stress, and restore the trust that’s often eroded by broken dashboards, missed alerts, or misaligned metrics.

So, how can a team with such varied responsibilities all participate in data quality without devolving into chaos or passing the buck?

While these roles have different day-to-day responsibilities and technical focuses, they are united by common pain points when data quality fails:

- Delayed project deliveries as teams spend time investigating and fixing data issues

- Reduced confidence in analytics among business stakeholders

- Increased operational overhead from manual data validation and correction

- Compliance risks when governance standards aren’t met

- Poor business decisions based on inaccurate information

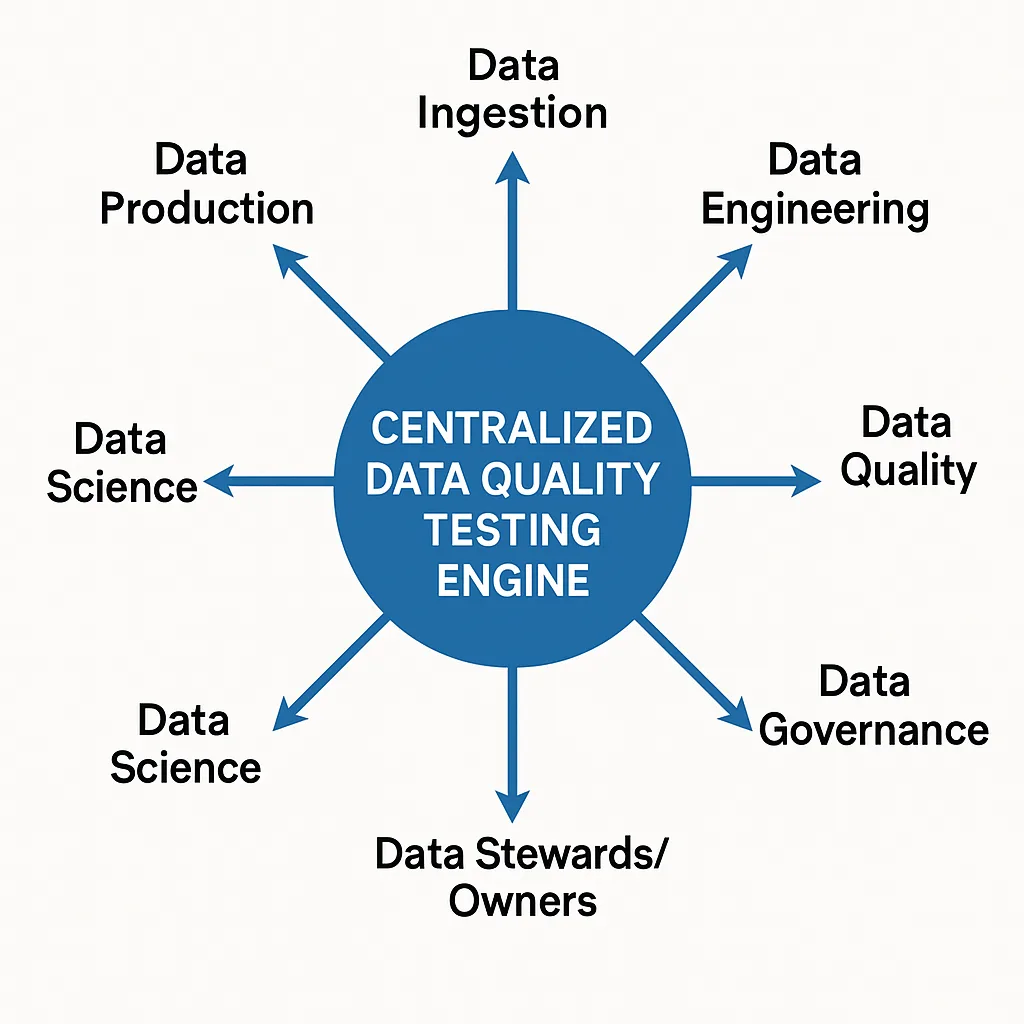

The solution isn’t for each role to develop isolated data quality processes. The answer: a centralized data quality testing engine—shared, visible, extensible, and integrated across your data lifecycle.

One Engine, Many Roles: How Different Roles Engage with Data Quality Testing

A centralized approach to data quality doesn’t mean central control—it means shared infrastructure. It provides everyone with a standard foundation, even as they utilize it in role-specific ways. Every data team member needs to interact with data quality testing, but in different ways depending on their responsibilities and expertise:

Data Ingestion Teams: Data ingestion specialists use quality tests to identify errors in source data before it propagates downstream. They configure tests to catch schema changes, missing data, and format inconsistencies at the earliest possible point in the data pipeline.

Data Engineering Teams: Data Engineers leverage quality testing to find problems in data warehouse ETL jobs both during development and in production environments. They write tests for data transformations, join operations, and aggregation logic to ensure their pipelines produce accurate results.

Data Quality Specialists: Dedicated Data Quality professionals use testing frameworks to score data sources and influence source data improvement. They establish quality metrics, set thresholds, and collaborate with upstream systems to identify and address the root causes of data issues.

Data Governance Teams: Data Governance professionals employ quality testing as a means to enhance data catalogs with high-quality metadata. Test results provide objective measures of data fitness for use and help enforce data governance policies across the organization.

Data Stewards and Data Owners: Data Stewards and Owners need to view and understand data quality test results to fulfill their accountability for data accuracy. They use quality dashboards to monitor their domains and take corrective action when issues arise.

Data Science Teams: Data Scientists use quality testing as a way to validate data for predictive models. They run tests to check for data drift, feature completeness, and statistical properties that could impact model performance.

Data Production Teams: Production support teams use quality testing as a monitoring tool for production data jobs. They set up automated quality checks that alert them to issues before they impact business users.

Data Team Executives: Leadership uses quality testing metrics to assess test coverage and organizational risk. They track quality trends, coverage gaps, and the business impact of data quality initiatives.

A centralized data quality testing engine supports numerous use cases that different team members can leverage across the entire data lifecycle. Production support teams conduct continuous monitoring tests to detect problems as they occur and trigger alerts before business users experience any impact, while data ingestion specialists validate newly arrived data to ensure that source systems meet the expected standards and formats. Development teams integrate quality tests into their workflows as regression testing to prevent code changes from introducing new data issues, and data quality analysts generate objective quality scores that drive improvement initiatives in upstream systems. Governance and compliance teams rely on standardized testing to demonstrate adherence to regulatory requirements and data policies. In contrast, operations teams execute comprehensive periodic health checks to identify gradual quality degradation that might otherwise go unnoticed until it becomes a critical business problem.

The Reality: Time and Resource Constraints

Despite the clear value of data quality testing, team members are often too busy with core responsibilities to write comprehensive tests. Data Engineers focus on building pipelines, Data Scientists on developing models, and Analysts on creating reports and dashboards.

Even when team members recognize the importance of quality testing, they may feel disconnected from the business context needed to write meaningful tests. A Data Engineer might know how to test for null values, but may not understand the business rules that determine whether a customer record is valid.

This creates a gap between the need for comprehensive testing and the practical ability of busy data professionals to implement it.

Overcome That Reality: Automate the 70%, Focus on the 30% That Matters Most.

DataOps Data Quality TestGen addresses this challenge by providing a centralized testing engine designed for modern data teams. Rather than expecting every role to become a testing expert, TestGen automates the generation of approximately 70% of the tests most organizations need. TestGen functions as a complete DataOps tool supporting the entire quality testing lifecycle:

- Data Profiling & Catalog: Automatically discover and document data structures and relationships

- Dataset Screening: Perform initial assessments of new data sources

- AI Generation: Leverage artificial intelligence to generate 120+ comprehensive validation tests

- Custom Test Development: Support for business-specific tests addressing unique requirements

- Anomaly Detection: Execute tests at scale and identify unusual patterns

- In-Database Execution: Run tests directly within your data warehouse for maximum performance

- Quality Scoring & Dashboards: Generate scores and visualizations for different roles

- Shareable Reports: Create detailed reports integrated with other DataOps tools

While automated generation addresses foundational needs, TestGen offers an intuitive user interface that enables team members with business knowledge to fine-tune tests without requiring code. Data Stewards can adjust acceptable ranges, Data Owners can modify validation rules based on domain expertise, and Analysts can configure business-specific logic.

Benefits Across the Organization

When implemented as a shared resource, data quality testing delivers transformative benefits across the entire organization. Improved productivity emerges as teams spend less time investigating issues and more time delivering business value, with automated test generation eliminating the overhead of writing basic tests from scratch. Data professionals experience reduced stress as they gain confidence that quality issues will be caught systematically rather than discovered by frustrated business users in critical moments. Enhanced customer trust develops through consistent, proactive testing that builds stakeholder confidence in data accuracy and reliability. Better collaboration flourishes as a shared platform creates a common vocabulary and metrics for communicating about quality issues and improvements. Perhaps most importantly, centralized testing enables organizational learning by creating visibility into quality patterns that inform strategic process improvements and technology investments across the data ecosystem.

Conclusion: Data Quality Is a Team Sport—Let’s Equip Everyone

Data quality is not a problem any single role can solve in isolation. The complexity and scale of modern data ecosystems necessitate a collaborative approach, where quality testing serves as a shared infrastructure across all data and analytics roles.

By implementing a centralized testing engine that automates foundational tests while providing intuitive customization capabilities, organizations ensure that every role has access to the necessary quality testing without requiring every professional to become a testing expert.

The result is higher-quality data, more productive teams, and greater trust in the analytics that drive business decisions. In an era where data is increasingly central to competitive advantage, investing in shared data quality testing infrastructure is not just a technical necessity—it’s a strategic imperative.

Data quality testing is everyone’s job. Let’s ensure that everyone has access to the shared infrastructure and tools necessary for success.

Ready to implement centralized data quality testing in your organization? DataOps Data Quality TestGen provides the automated test generation and collaborative features needed to make data quality a shared organizational strength rather than an individual burden. It’s Open Source – Install now!