DataOps Mission Control

Data Teams can’t answer very basic questions about the many, many pipelines they have in production and in development. For example:

Data

- Is there a troublesome pipeline (lots of errors, intermittent errors)?

- Did my source files/data arrive on time?

- Is the data in the report I am looking at “fresh”?

- Is my output data the right quality?

- Do I have a troublesome data supplier?

Jobs

- Did Job X run after all the jobs in Group Y were completed?

- How many jobs ran yesterday, and how long did they take?

- How many jobs will run today, how long will they take, and when are they running?

- Did every job that was supposed to run, actually run?

Quality/Tests/Trust

- How many tests do I have in production?

- What are my pass/fail metrics over time in production?

Tools/Models/Dashboards

- Is my model still accurate?

- Is my dashboard displaying the correct data?

Root Cause

- Where did the problem happen?

- Is the delay because the job started late, or did it take too long to process?

They also can’t answer a similar set of questions about their development process:

Deploys

- Did the deployment work?

- How many deploys of artifacts/code did we do?

- What is the average number of tests per pipeline?

- How many deploys failed in the past?

- How many models and dashboards were deployed?

Environments

- What code is in what environment?

- Looking across my entire organization, how many pipelines are in production? development?

Testing/Impact/Regressions

- How many tests ran in the QA environment? passed? failed? warning?

- How often do we change the production schema?

Productivity/Team/Projects

- How many tickets did we release?

- Which tickets did we release? per project?

- For a particular project, what pipelines, tests, deploys and tickets are happening?

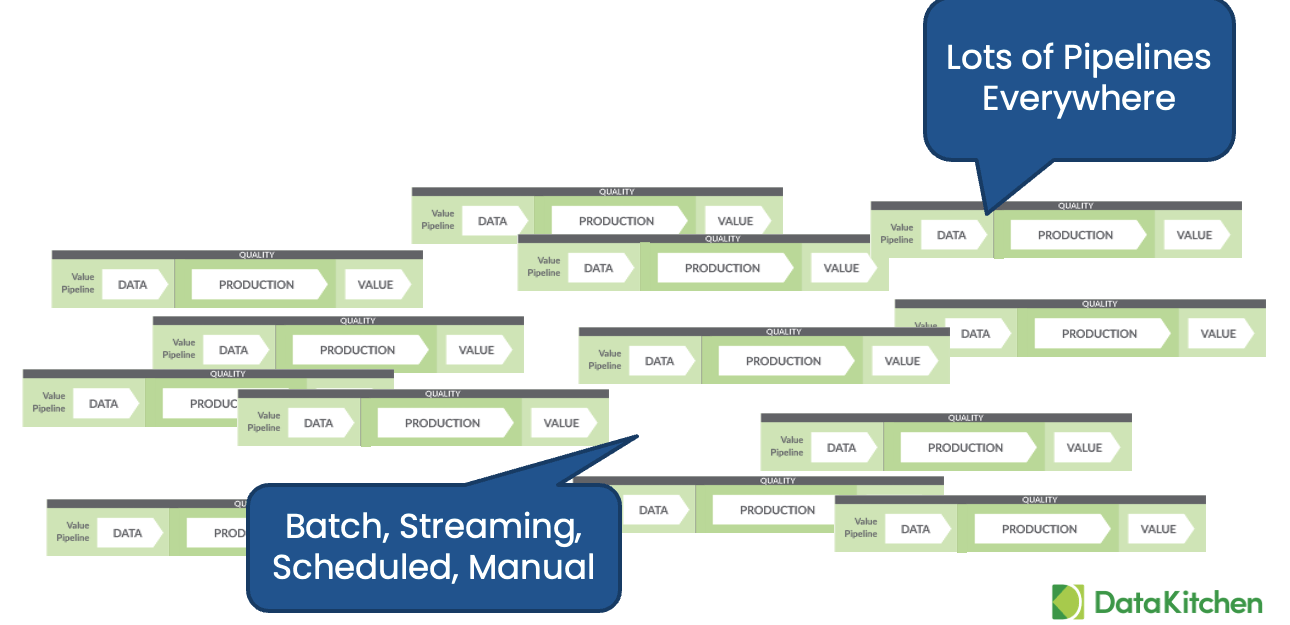

Why does this happen? Why is this problem not solved today?

- Team Are Very Busy: teams are already busy and stressed – and know they are not meeting their customer’s expectations

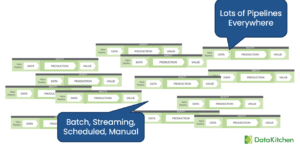

- They have a Low Change Appetite: Teams have complicated in place data architectures and tools. They fear change in what already running

- There is no single pane of glass: no ability to see across all tools, pipelines, data sets, and teams in one place. They have hundreds or thousands of existing pipelines, jobs, and processes running already.

- They don’t know what, where, and how to check. They need to make sure their customers are happy with the resulting analytics. But they often don’t know the salient points to check or test.

- They live with lots of blame and shame without shared context. Problems are raised after the customer has found them, with panicked teams running around trying to find who and what is responsible.

How do other organizations solve this risk problem? The biggest risk of all is space flight. How do SpaceX and NASA manage risk? Build a Mission Control

- Build a UI with information about every aspect of the flight

- Use that information as the basis for making decisions and communicating to the interested parties

- Store information for after fact analysis

- Automatically alert and flag

A New Concept:

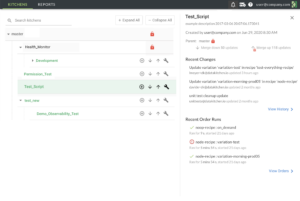

DataOps Mission Control’s goal is to provide visibility of every journey that data takes from source to customer value, across every tool, environment, data analytic team, and customer so that problems are detected, localized, and raised immediately. How? By being able to test and monitor every data analytics pipeline in an organization, from source to value, in development and production, teams can deliver insight to their customer with no errors and a high rate of pipeline change.

However, if you solve this problem, you will see:

- Less embarrassment: No finding things are broken after the fact, from your customers

- Less hassle: Provides a way to get people off your back and self service the production status

- More Space to Create: Instead of chasing problems and answering simple questions

- A Big Step to Transformation: You can’t focus on delivering customer value if your customers don’t trust the data or your team

Learn more, watch our on-demand webinar