How do you engineer quality into Data and Analytics Systems?

There has been considerable discussion lately about data contracts and the shift-left approach in data and analytics systems. The manufacturing industry learned decades ago that catching defects early in the production process saves exponentially more money than fixing them after products ship. Today’s data engineering teams face a strikingly similar challenge, yet many organizations continue to discover data quality issues only after their analytics have already influenced critical business decisions. The concepts of “shift-left” and “shift-down” testing, borrowed from manufacturing and software development, respectively, offer a powerful framework for fundamentally rethinking how we approach quality in data and analytics systems.

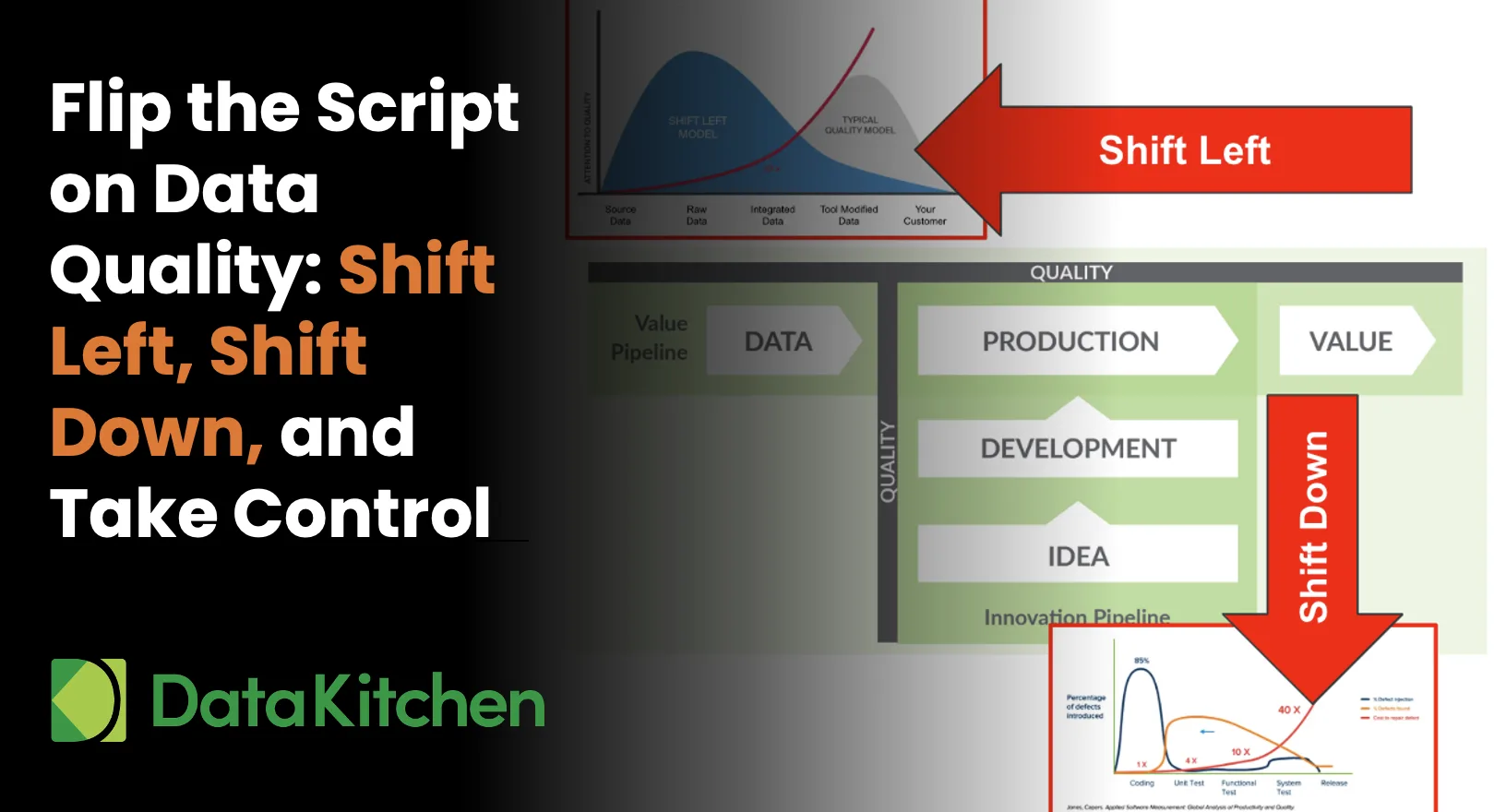

TLDR: Shift-Left, Shift-Down For Testing Data & Analytic Systems

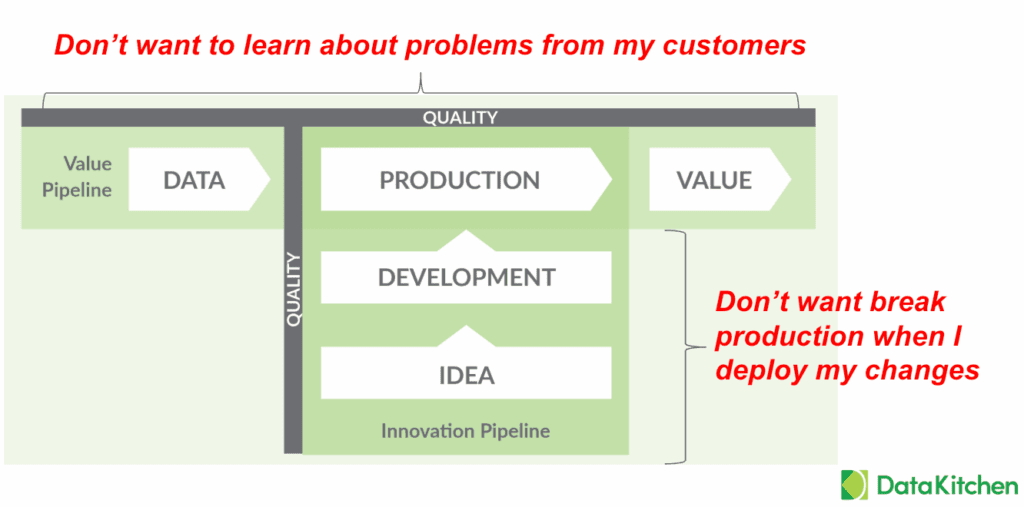

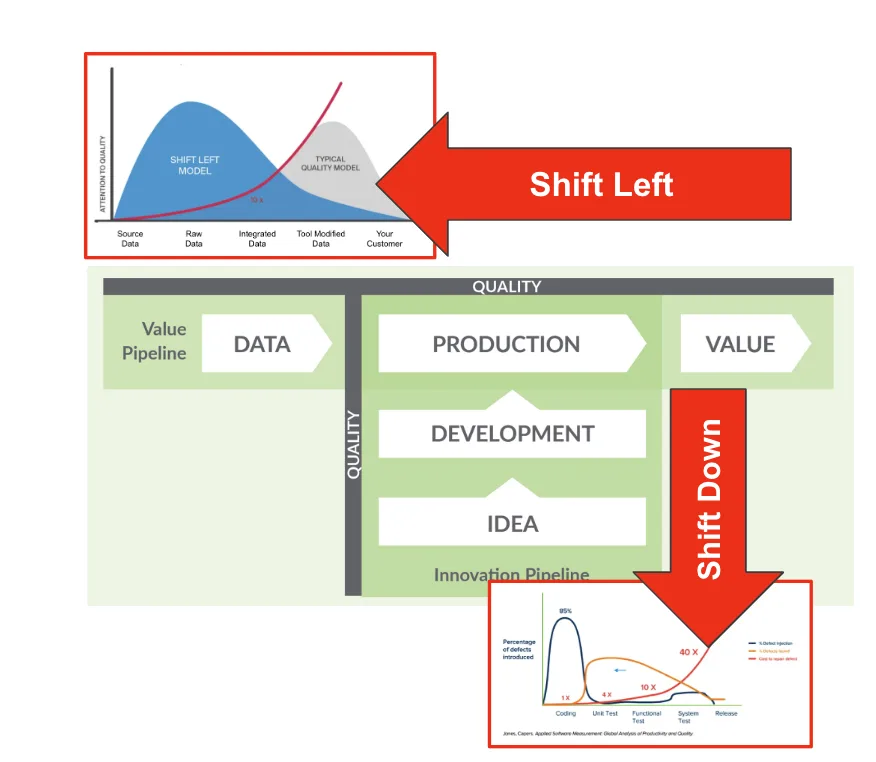

Shift-left on the assembly line of data production; create ‘Andon Cord’ of automated data tests and tool checks on each part of the assembly like to stop production when there is an error. Why? fewer data errors in production caused by crappy data or broken tools

Shift-down on the deployment path of new code or configuration into production; create regression tests and impact analysis to check the new code before it gets into production. Why? Fewer errors in production caused by crappy code getting into production

The Assembly Line Parallel: Understanding Data as Manufacturing

Modern data pipelines bear a remarkable resemblance to manufacturing assembly lines. Raw materials enter the system in the form of source data. These materials flow through various transformation stages, involving Python code for data access, SQL and ETL processes for transformation, and R or Python for modeling, as well as visualization tools like Tableau for creating consumable outputs. At each stage, the data undergoes processing that adds value while also introducing potential points of failure.

Just as a manufacturing line produces refined products from raw materials, data pipelines produce charts, graphs, models, and reports from raw data. The parallel extends further when we consider quality control. In manufacturing, quality checks at each stage of production prevent defective components from moving downstream. In data systems, similar checkpoints can prevent corrupted, incomplete, or incorrectly transformed data from propagating through the pipeline and ultimately reaching business users.

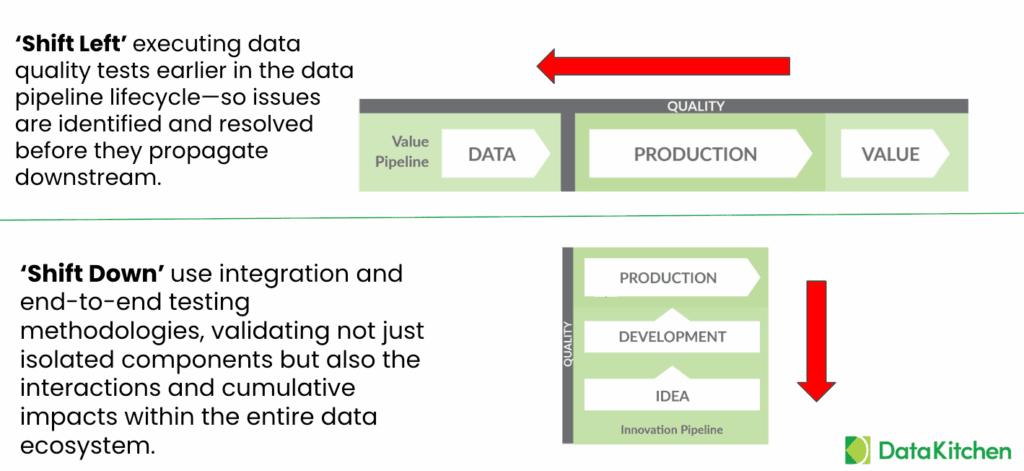

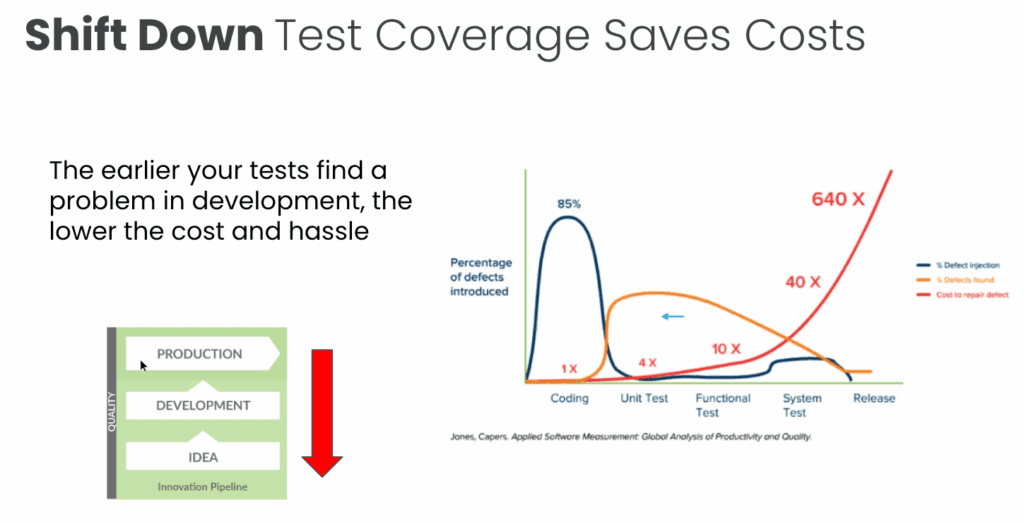

This manufacturing mindset reveals two critical dimensions along which we must shift our testing efforts. The horizontal dimension represents the flow of data through production pipelines, where “shifting left” means moving quality checks earlier in the data production lifecycle. The vertical dimension represents the flow of code and configuration changes from development to production, where “shifting down” refers to implementing comprehensive testing before code changes are deployed to production systems.

Shift Left: The Economics of Early Detection in Production

The concept of shifting left in data quality testing draws inspiration from the Japanese manufacturing concept of the “Andon Cord,” a mechanism that allows any worker on an assembly line to halt production when they detect a quality issue. In data systems, this translates to implementing automated data tests and tool checks at each stage of the pipeline, with the ability to stop data flow when errors are detected.

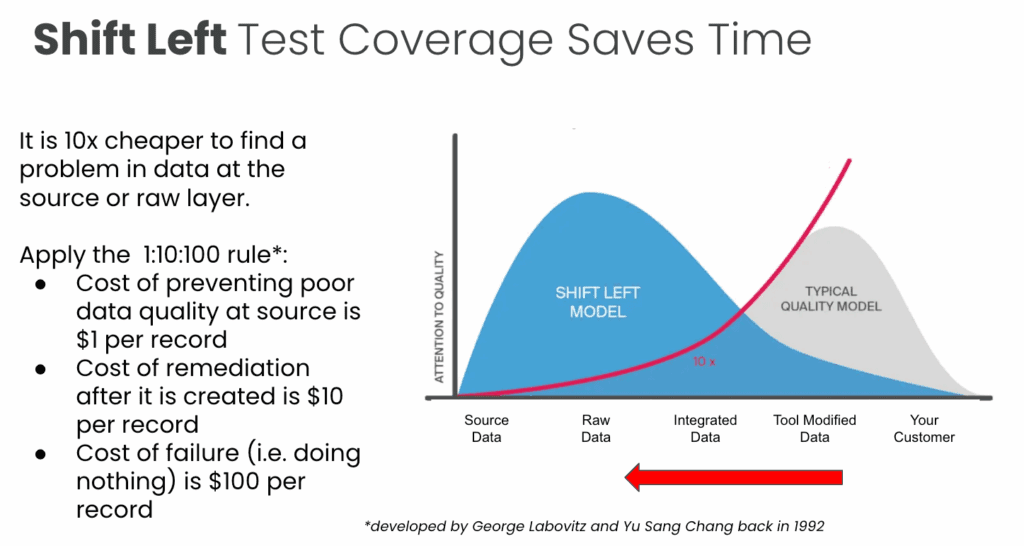

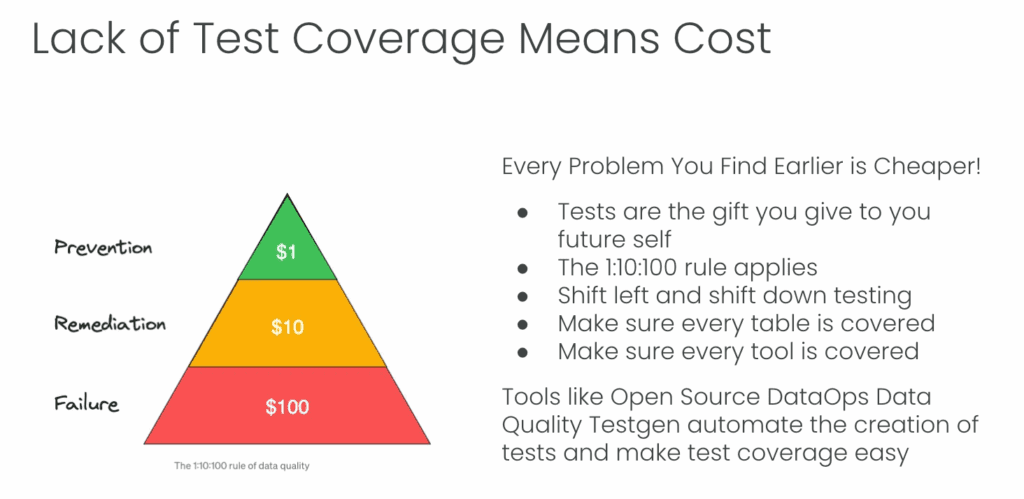

The economic argument for shift-left testing is compelling and aligns with the 1:10:100 rule, as identified by quality experts George Labovitz and Yu Sang Chang. In the context of data quality, this rule suggests that preventing poor data quality at the source costs approximately one dollar per record, remediating data quality issues after the data has been created costs ten dollars per record, and the cost of failure when poor quality data reaches production and influences decision-making approaches is one hundred dollars per record.

This exponential cost increase occurs because, as data moves through the pipeline, it becomes increasingly integrated with other datasets, embedded in downstream calculations, and ultimately influences business decisions. A simple data type mismatch caught at ingestion might take minutes to fix. The same issue discovered after it has corrupted aggregate calculations, been cached in multiple systems, and influenced executive reports could require days of investigation, correction, and communication to resolve.

Consider a practical scenario where a source system starts sending timestamps in a different timezone without warning. If caught at the ingestion point through shift-left testing, the fix involves updating a single transformation rule. If this issue goes unnoticed in the pipeline, it could lead to incorrect daily aggregations, skewed trend analyses, and fundamentally flawed business metrics. The remediation effort increases as teams must identify all affected downstream calculations, regenerate historical aggregates, and possibly explain incorrect reports that have already been distributed. Implementing shift-left testing requires a fundamental rethink of how we design data pipelines. Instead of viewing testing as an afterthought or a separate quality assurance phase, tests become essential parts of the data flow itself. This involves adding validation checks immediately after data ingestion to verify schema compliance, data type consistency, and adherence to business rules. It also includes performing statistical tests after transformation steps to ensure data distributions stay within expected ranges. Additionally, validating the outputs of machine learning models against baseline metrics before allowing predictions to influence downstream processes.

Shift Down: Preventing Deployment Disasters in Development

While ‘shift left’ addresses quality issues in the production data flow, ‘shift down testing’ focuses on preventing code and configuration changes from introducing new errors into production systems. This concept, borrowed from software development practices, emphasizes comprehensive testing during the development phase before changes are promoted to production.

The traditional approach in many data organizations involves what might charitably be called “hope-based testing.” Developers make changes to SQL transformations, update Python scripts, or modify ETL configurations, perform cursory manual checks, and deploy to production with fingers crossed. This approach inevitably leads to a familiar pattern: urgent calls about broken dashboards, frantic debugging sessions, and hasty rollbacks that disrupt business operations

Shift down testing replaces hope with systematic validation. It includes various testing methods, each with a specific role in quality assurance. Integration tests confirm that multiple data processing steps work correctly together, ensuring data flows smoothly between different tools and transformations. End-to-end tests simulate complete data pipelines from ingestion to final output, confirming overall correctness across the entire system. These dynamic tests run on realistic test data that reflects production characteristics, executed in development environments that closely mimic production infrastructure.

The economic advantages of shift-down testing follow a similar exponential pattern to shift-left testing. Research consistently indicates that defects identified during coding are relatively inexpensive to fix, typically requiring only time to correct the code and rerun local tests. In contrast, defects detected during integration testing are significantly more costly, often requiring coordination between teams and necessitating changes to multiple components. Defects that reach production can be forty to six hundred times more expensive than those caught early, as they often necessitate emergency responses, hotfixes, data remediation, and damage control with stakeholders. Try Data Architectures like FITT (Functional, Idempotent, Tested, Two-Stage) to implement this with less hassle.

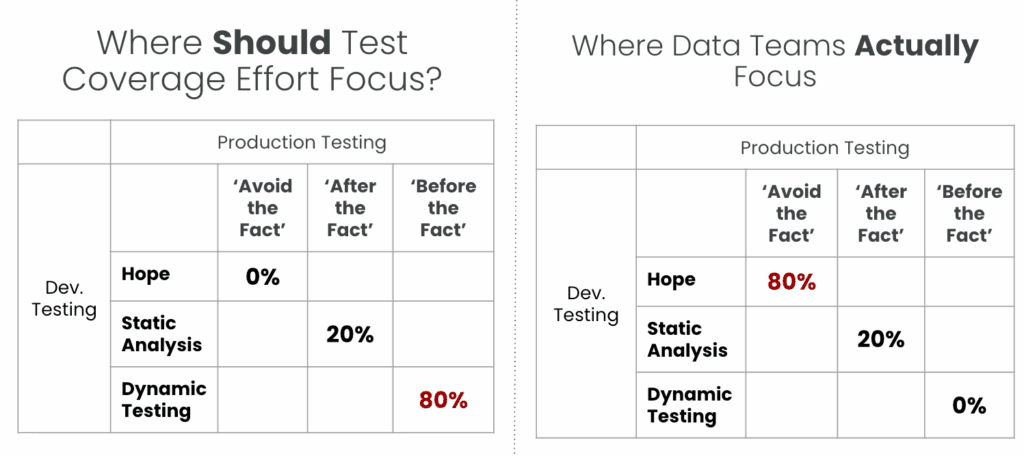

The Reality Gap: Where Teams Actually Focus

Despite the clear economic benefits of comprehensive testing, a stark reality gap exists between optimal testing practices and where data teams actually focus their efforts. Based on industry observations and DataKitchen’s research, approximately eighty percent of data teams rely primarily on hope-based testing in development, with no automated validation before deploying changes to production. The remaining twenty percent might employ some static analysis tools, such as SQL linters, but dynamic testing that validates actual data transformations remains virtually nonexistent in most organizations.

On the production side, the situation is equally concerning. Most teams operate in what can be called “avoid the fact” mode, essentially waiting for customers or business users to report problems. Some organizations have implemented “after the fact” monitoring, continuously polling databases and checking data quality metrics; however, this approach still allows problems to reach production before they are detected. Only a small minority has implemented true “before the fact” testing that catches issues during the production process itself.

This misallocation of testing effort creates a vicious cycle. Teams spend the majority of their time fighting fires in production, leaving little bandwidth for implementing proper testing practices. The lack of testing leads to more production issues, which consume more time, further reducing the capacity for improvement. Breaking this cycle requires a deliberate shift in priorities and a recognition that investing in testing infrastructure pays dividends through reduced operational burden.

Implementation Strategies for Comprehensive Test Coverage

Implementing effective shift-left and shift-down testing requires more than just adding a few validation checks to existing pipelines. It demands a systematic approach to test coverage that addresses multiple dimensions of data quality and system reliability.

For shift left implementation, teams should begin by mapping their entire data pipeline and identifying critical quality checkpoints. At each checkpoint, implement tests. These tests should be automated and integrated into the pipeline orchestration, with the capability to stop processing if critical issues are found.

Input validation serves as the first line of defense, encompassing schema validation to ensure data conforms to expected formats, referential integrity checks to verify foreign key relationships, and business rule validation to confirm data values fall within acceptable ranges. For instance, a retail analytics pipeline might check that product prices are positive, order dates are not in the future, and customer IDs match existing records.

Validation of transformations occurs after each processing step. This involves row count reconciliation to prevent data loss, distribution checks to detect anomalies, and deterministic testing to ensure known inputs produce expected outputs. A financial data pipeline might verify that debits and credits balance, currency conversions are accurate, and moving averages align with expected results.

Output validation acts as the final quality gate before data reaches users. It includes consistency checks across metrics, monitoring of thresholds for key performance indicators, and anomaly detection for unusual patterns that indicate errors. For example, a marketing analytics system might ensure segment counts sum correctly, conversion rates stay within historical ranges, and no impossible values appear in calculated data.

For shift-down deployment, teams must set up development and testing environments that accurately replicate production systems, including the same tools, data volumes, performance characteristics, and security measures. Without realistic environments, issues that only appear under production conditions might go unnoticed.

The data strategy is especially important for shift-down testing. Production data can’t be used directly due to privacy and security concerns, but synthetic data may lack real-world complexity. Effective teams employ data masking and sampling that preserve statistical properties and edge cases while safeguarding sensitive information. They maintain libraries of test datasets that represent various scenarios, including normal operations, peak loads, and known problematic patterns.

Cultural and Organizational Considerations

Technical solutions alone cannot solve the data quality challenge. The successful implementation of shift-left and shift-down testing requires cultural and organizational changes that prioritize quality as a shared responsibility, rather than an afterthought. Leadership must recognize that testing is not overhead but rather an investment in operational efficiency and business trust. This entails allocating time and resources for test development, maintaining the testing infrastructure, and continually improving quality processes. It means celebrating both prevented issues and resolved ones, recognizing that the absence of production failures represents successful quality engineering rather than luck.

Teams need to adopt a quality-first mindset where writing tests becomes as natural as writing transformation code.

This cultural shift often requires education and training, as many data professionals lack exposure to systematic testing practices. Organizations should invest in training programs that teach testing methodologies, provide hands-on experience with testing tools, and share best practices across teams to enhance overall testing effectiveness.

The organizational structure should support quality initiatives by clearly assigning ownership and accountability. While everyone shares responsibility for quality, dedicated roles such as data quality engineers or analytics reliability engineers can provide specialized expertise and drive systematic improvements. These specialists work across teams to establish standards, develop reusable testing frameworks, and promote best practices.

Metrics and incentives should align with quality goals. Rather than measuring success solely by feature delivery or project completion, organizations should track metrics like test coverage percentages, mean time to detection for issues, and the ratio of issues caught in development versus production. These metrics provide visibility into quality improvements and help justify continued investment in testing infrastructure.

Tools and Technologies for Shifting Down and Left

The evolution of data engineering tools has brought sophisticated testing capabilities within reach of teams at all scales. Modern orchestration platforms, such as Dagster and Apache Airflow, support integrated testing workflows where quality checks become first-class citizens in pipeline definitions. Transformation tools like dbt include built-in testing frameworks that make it easy to define and execute data quality tests alongside transformation logic.

Specialized data quality platforms have emerged to address the unique challenges of testing data pipelines. Open-source tools like DataKitchen’s DataOps Data Quality TestGen automate the creation of data quality tests, reducing the manual effort required to achieve comprehensive coverage. These tools can profile existing data to suggest relevant tests, automatically generate test code, and integrate with existing orchestration and monitoring systems.

Commercial data observability platforms offer additional capabilities for teams that are ready to invest in enterprise-grade solutions. These platforms offer features such as automated anomaly detection, data lineage tracking for impact analysis, and sophisticated alerting systems that route issues to the relevant teams. While powerful, these tools work best when combined with systematic shift-left and shift-down practices rather than serving as a replacement for proper testing.

The key to successful tool adoption lies in selecting technologies that integrate smoothly with existing workflows rather than requiring wholesale process changes. Teams should start with tools that provide immediate value with minimal disruption, then gradually expand their testing toolkit as practices mature. A pragmatic approach might begin with simple SQL-based tests in existing transformation tools, then add specialized data quality tools as test coverage grows, and finally implement comprehensive observability platforms once testing practices are well-established.

Implementing shift-left and shift-down testing is not a one-time project, but an ongoing journey of continuous improvement. Organizations require mechanisms to assess the effectiveness of their testing practices and pinpoint areas for improvement.

Regular retrospectives and post-incident reviews offer opportunities to identify testing gaps and areas for improvement. When production issues occur despite testing efforts, teams should analyze why existing tests failed to catch the problem and implement new tests to prevent recurrence. This creates a feedback loop where each incident strengthens the testing framework, gradually building comprehensive coverage through iterative improvement.

The Path Forward

The shift from reactive firefighting to proactive quality engineering marks a fundamental change in how organizations handle data and analytics systems. By shifting left to identify data issues early in the pipeline and shifting down to stop code defects from reaching production, teams can significantly reduce the cost and impact of quality problems while boosting the reliability and trustworthiness of their analytics.

The journey toward comprehensive test coverage requires technical investment, cultural transformation (like Andons), and organizational commitment. However, the alternative—continuing to operate in a mode where quality issues regularly disrupt business operations and weaken stakeholder trust—becomes increasingly unsustainable in a world where data drives critical decisions.

Organizations that successfully implement shift-left and shift-down testing will gain a significant competitive advantage. They will spend less time fixing problems in production and more time focusing on delivering value. They will rely on trustworthy data for making decisions, rather than relying on analytics that might be incorrect. They will build systems that scale reliably rather than becoming more fragile as complexity grows.

The tools, techniques, and knowledge needed to implement comprehensive testing are available today. What remains is for data engineering teams to embrace these practices, tailor them to their specific contexts, and commit to the ongoing effort necessary to maintain high-quality data systems. Although the investment may seem hefty, the costs of operating without proper testing grow higher with each production incident, each wrong business decision, and each loss of stakeholder trust.

The manufacturing industry learned these lessons decades ago, and software development has spent the last twenty years catching up. The time has come for data engineering to adopt the same quality-first mindset, implementing testing practices that will turn data pipelines from fragile hope chains into robust systems worthy of the critical business decisions they support. The way forward is clear: shift left, shift down, and embed quality into every part of our data and analytics systems.