On 24 January 2023, Gartner released the article “5 Ways to Enhance Your Data Engineering Practices.” By Robert Thanaraj, Ehtisham Zaidi, and 2 more.

Gartner suggests in the article that successful Data Engineering teams have two crucial challenges.

- How to optimize Data Team Productivity – essentially that teams should avoid adding more bodies whenever they have more work that needs to be done. How do you scale an organization without hiring an army of hard-to-find data engineering talent? Or, as one of our customers put it, “How do I increase the total amount of team insight generated without continually adding more staff (and cost)?”

- Staff turnover, stress, and unhappiness. Data team morale is consistent with DataKitchen’s own research. We surveyed 600 data engineers, including 100 managers, to understand how they are faring and feeling about the work that they are doing. The top-line result was that 97% of data engineers are feeling burnout.

Overview of Gartner’s data engineering enhancements article

To set the stage for Gartner’s recommendations, let’s give an example of a new Data Engineering Manager, Marcus, who faces a whole host of challenges to succeed in his new role:

Marcus has a problem. His company just decided that it needs to get its act together with data. He took charge of a 12-person small team with a few data engineers, scientists, and analysts a few months ago. It’s not been going well. All data engineering work has been done by hand, with each person working hard and trying to service development requests and do production tasks. But new work is not getting done, and data errors are common. To add fuel to the fire, a few weeks ago, one of the most talented people in the group left. As a result, a less senior team member was made responsible for modifying a production pipeline. With the detailed knowledge of the system now no longer at the company, the modification and the system were not adequately validated, and the updated system was not only late but producing bad data for two weeks until the problem was finally identified.

What is Gartner’s advice for a new data engineering lead like Marcus? Let’s look at the article’s key recommendations.

- Adopt DataOps Practices: “Successful data engineering teams are cross-functional and adopt DataOps practices.”

- Focus on Customer Value First: “Organizations that focus on business value, as opposed to technological enhancements …. are more efficient in prioritizing data delivery demands.”

- Release New Data Engineering Work Often With Low Risk: “Testing and release processes are heavily manual tasks… automate these processes.”

- Make Trusted Data Products with Reusable Modules: “Many organizations are operating monolithic data systems and processes that massively slow their data delivery time.”

- Create a Path To Production For Self-Service: “… business users explore data through self-service data preparation, few have established gatekeeping processes to deliver these workloads to production.”

The key element of Gartner’s advice is simple, don’t hire a bunch more people into your team.

“Because adding more data engineers in response to increasing data requests is not a sustainable solution, organizations must start investing in DataOps practices to streamline and scale data delivery processes.” This is the equivalent advice for data teams provided to software teams in the classic “Mythical Man Month.” Brooks law (for data): “Adding data engineer personpower to a late data project makes it later.”

Shouldn’t Marcus consider upgrading his technology? A better ETL tool? Database? Pick some other hot tool? His team is already on Azure and uses ADF, Synapse, PowerBi, and several powerful Azure Data Science tools. Gartner is explicit that teams should “focus on business value, as opposed to technological enhancements.” Marcus will not fix his challenges by helping his team write SQL faster. Productivity does not come through the fingers of each data engineer; it comes from building a system around those engineers that allows them to run production with minimal errors and move things into production quickly, with low risk, so they can focus on making their customers successful.

Marcus has inherited a team in which individual ‘heroes’ built data analytics as a set of side projects without consistency or management. He sees the chance to make a difference in this new role. Still, there is little testing and no consistent deployment processes, and everyone is very busy with ‘keeping the lights on’ by constantly chasing down errors in production systems. Errors have caused the company’s leaders to lose confidence in the data products their team produces. His team often delivers late and manages 70 disjointed independent weekly jobs to ingest, transform, visualize, and deliver results to his customers.

The second element of Gartner’s advice is to focus on your team’s day-to-day development work to increase reusability, quality, and delivery speed.

Focus the team on delivering value to the customer in small increments. Learn, improve, and iterate quickly (with feedback from the customer) with low risk. Focus on code and pattern reuse and DataOps Automation to scale. If he is to take Gartner’s advice to heart, Marcus will have to add a set of tasks to his team’s daily data engineering tasks. In addition to describing the customer’s needs, each user story (or functional specification) will include requirements like these:

- Update & publish changes to data engineering code/config within an hour without disrupting operations and without errors.

- Find opportunities to automate and refactor. Data is obviously essential to data engineering teams. But the code (or tool configuration) that acts upon data is equally important. And that code creates complexity. Build analytic data systems that have modular, reusable components. Build components that are idempotent on data. And automate any manual steps in deployment or testing with scripted automation tools.

- Define being “Done” with a project as in production and delivering value for your customer. Done does not mean ‘it works on my machine.’ Done does not mean ‘I followed our project SLDC to the letter.’ You are building data products that live and breathe for years. They must continually improve.

- Automatically observe production runs to satisfy your customer’s needs (timeliness, quality, etc.) and ensure the data products are trusted. Discover data and system errors in production before users do.

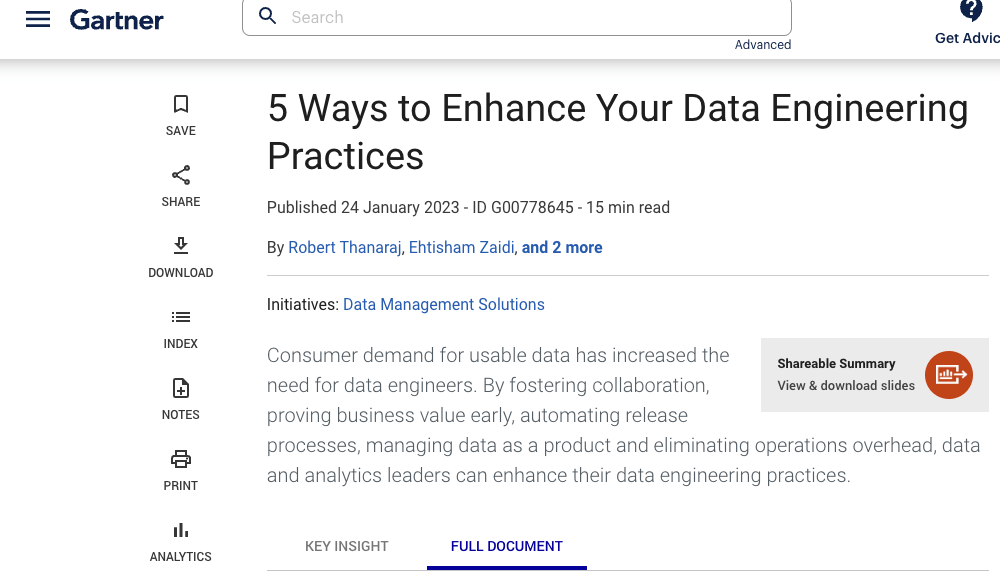

- Don’t throw your work over the wall to production operations. Have data engineers either ‘build and run’ their own systems or integrate operations teams throughout the process.

If you are a data architect (or you play one), you may already have creative ideas about addressing these requirements. Often, you must change what it means to deliver value to your customer. When you architect for flexibility, quality, rapid deployment, and real-time data monitoring (in addition to your customer requirements), you move towards a DataOps-centric data engineering practice.

The final element of Gartner’s advice is to provide a path to production for self-service users.

When Gartner advises your team to put “Customer Value First,” what does that mean? Data Engineering teams often deliver to one or more self-service teams in a hub and spoke or data enablement organization model. This idea sometimes means sh** flows downhill to the data engineer team from self-service teams – your team gets all the blame and none of the glory from delivering business value. What if you took another perspective? Self-service is a great way to discover the most valuable business opportunities in data analytics. And those self-service tools a great way to quickly prove that value to the business? How can your team help make the path to production for those nuggets quick, cost-effective, and sustainable?

Some of these self-service projects will eventually ‘earn the right’ to move into production. The data engineering team’s job is to find ways to wrap those valuable ideas in a DataOps blanket with automated testing, end-to-end observability, and scripted automation. As Gartner states: “promoting recurring data preparation workloads to production through necessary gatekeeping protocols” provides both safety and efficiency. Remember, the whole point of Agile-based processes is to maximize the work you don’t have to do – and self-service saves your team wasted cycles guessing business customer requirements.

Bonus: DataKitchen’s advice is to start with DataOps Observability.

Over the years, we have helped many data engineering teams adopt DataOps practices. After all this experience, we have concluded that the best “first step” for any team is enabling a DataOps Observability capability. Of all the sources of wasted time and bottlenecks that Gartner discusses, the biggest is allowing errors into production. This weakness erodes trust, causes massive headaches and re-work, and creates fear in the development teams.

Too many teams have fragile, unmonitored production systems causing users to find problems with the data or reports. Eliminating these errors through end-to-end DataOps Observability frees the team to make the development process changes Gartner recommends (cross-functional collaboration, value prioritization, process automation, modularity, reusability, self-service operations focus).

Marcus sees that implementing DataOps practices is his way out. He saw a talk by DataKitchen at a conference five years back that clarified why. However, before investing the time needed to enable more automated DataOps processes, he has to make that time available by reducing the effort his team spends chasing production problems and refactoring bad engineering. Automating the observation of pipeline technology, data quality, and SLAs with a DataOps Observability capability will remove that burden from his team and enable Marcus to improve his team’s productivity – and customer experience – through better DataOps practices.

Summary: 10x your data engineering game.

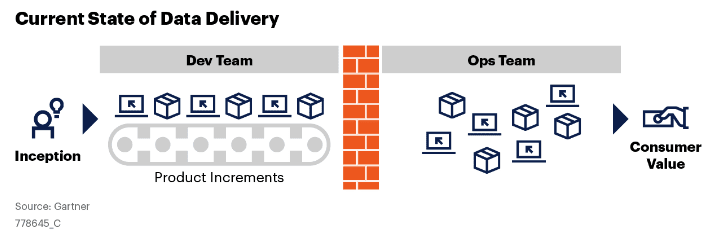

What is the likely benefit if a team enacts the tactics Gartner recommends? Gartner included a Strategic Planning assumption in the document that estimates the value. “By 2025, data engineering teams guided by DataOps practices and tools will be ten times more productive than teams that do not use DataOps.” This aligns with what DataKitchen has experienced with customers using our DataOps Automation and DataOps Observability Products.

We see the 10x productivity improvement coming from some key enablers. The first is the reduction of errors in production with automated end-to-end observability. The second is decreasing the cycle time and risk of deploying to production with automated testing. The third is automation and enabling governed secure, self-serve development environments. When these enablers are implemented, such as through DataKitchen products, teams will work faster, produce higher-quality results, and will be happier.

Learn More

- Implement DataOps Data Engineering yourself. See our white paper, “Seven Steps to Implement DataOps.”

- Our recent Blog post: “Plumbing Wisdom for Data Pipelines.”

- Blog Post: How to succeed as a DataOps Engineer