Part 1: Defining the Problems

This is the first post in DataKitchen’s four-part series on DataOps Observability. Observability is a methodology for providing visibility of every journey that data takes from source to customer value across every tool, environment, data store, team, and customer so that problems are detected and addressed immediately. DataKitchen has released the first version of its Observability product, which implements the concepts described in this series. (Part 2)

DataOps Industry Challenges

Jason is the CDO for Company X, responsible for six teams of data professionals across several locations. The breadth of his teams’ work and the technologies they use present a significant challenge to his main goal: to deliver new and useful analytics solutions to the business. He has 1000 data pipelines running with 10,000 users relying on the data insights they produce–and no way to know if they are working properly. And, his teams are overworked maintaining these systems and firefighting; they cannot deliver on new requests.

Like Jason, you invest significantly in your data and the infrastructure and tools your teams use to create value. Do you know for sure that it’s all working properly, or do you hope and pray that a source change, code fix, or new integration won’t break things? If something does break, can you find and fix the problem quickly, or does your team spend days diagnosing the issue? Do you fear that phone call or email from an angry customer who finds the problem before you do? How can you be confident that nothing will go wrong and your customers will continue to trust your deliverables?

DataOps Observability can help you ensure that your complex data pipelines and processes are accurate and deliver as designed. Observability also validates that your data transformations, models, and reports are performing as expected. This solution sits on top of your existing infrastructure⸺without replacing staff or systems⸺to monitor your data operations. And it can alert you to problems before anyone else sees them.

DataOps Observability is a logical first step in implementing DataOps in your organization. It tackles the problems you are facing right now, and prepares you for a future of full DataOps automation.

Errors Happen; Do You React or Prevent?

Sure enough, Jason gets the call he dreads, but it’s worse than ever! This call is from the CEO⸺his boss’s boss⸺and it’s only 7:30 a.m. He breaks into a sweat and answers the phone. Through the yelling, he learns that a compliance report sent to critical business partners was empty! He makes promises and cancels the rest of his day. With 26 of his best engineers on a call, they begin troubleshooting. At 1:00 p.m., the ad hoc team had the root cause: a change to a source file passed a blank field through the pipeline. At 2:45, they have a fix implemented on one machine, but it will take six weeks to deploy that fix to the production pipeline. At 3:00, he takes a deep breath and calls the CEO to break the news.

Sound familiar? When was the last time this happened to you? Today’s data and analytic systems are complex, made up of any number of disparate tools and data stores. Crisis happens, and errors are inevitable. They cause huge embarrassment and affect business performance.

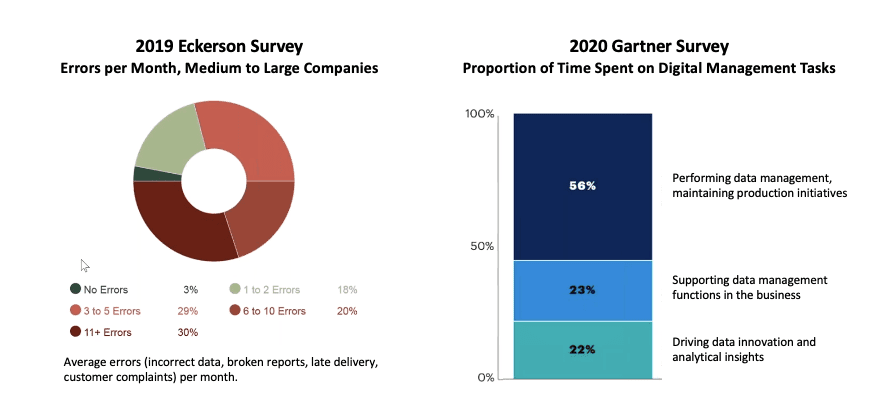

Jason’s experience is not a unique one. A 2019 DataKitchen/Eckerson survey found that 79% of companies have more than three data-related errors in their pipelines per month. A 2020 Gartner survey revealed that 56% of engineer time is spent on operational tasks and addressing errors, not on innovations and customer requests.

Many companies are stuck in a culture of “hope and pray” that their changes and integrations won’t break anything and a practice of “firefighting” when things inevitably go wrong. They wait for customers to find problems. They blindly trust their providers to deliver good, consistent data on time. They interrupt the daily work of their best minds to chase and fix a single error in a specific pipeline, all the while not knowing if any of the other thousands of pipelines are failing.

It’s a culture of productivity drains, which results in customers losing trust in the data.

Bad data reaches the customer because companies haven’t invested enough, or at all, in testing, automation, and monitoring. They have no way to catch errors early. And with engineers jumping around to put out the fires, their already demanding workloads are deprioritized. Customer delivery dates are missed, new features cannot be integrated, and innovations stop coming. As customer confidence in the data and analytics decreases, so does customer satisfaction.

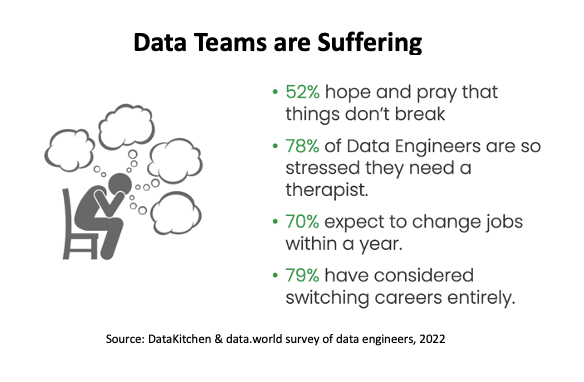

Another result is rampant frustration within data teams. A survey of data engineers conducted by DataKitchen in 2022 revealed some shocking statistics. Data engineers are the people building pipelines, as well as doing data processing and data production. And they are suffering: 78% feel they need to see a therapist, and a similar number have considered quitting or switching careers. The industry is experiencing a shortage of people who do data work due to a supply problem as well as the exodus of people leaving the field because it’s so stressful.

Why Haven’t They Solved It?

Jason follows the progress of the production fix very closely and updates the CEO at every stage. Naturally, the CEO wants to know why it takes so long to resolve the issue. “It’s just code; it’s what you guys do every day! Can’t you patch it or something?” When the fix is fully tested and deployed to the production pipeline, Jason has time to reflect. He’ll conduct a post-mortem with his team on the specific issue, but he wants to find a solution to the bigger problem. Why has the company never addressed this kind of risk? What can he introduce into his data infrastructure and development cycle to ensure these things don’t happen again?

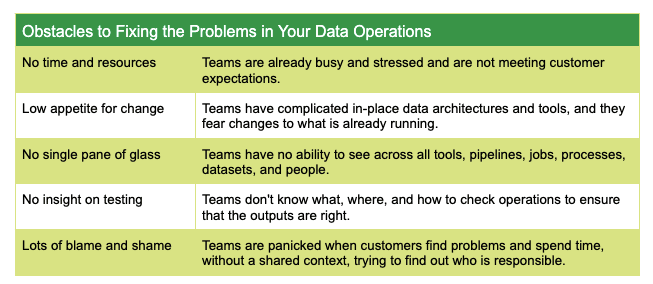

You’re just too busy. You’ll get to it next year…right? The situation is so bad, your team is stressed, and things are breaking left and right. So, why hasn’t your company⸺and many others⸺solved these problems? There are several reasons.

Teams are obviously very busy. They have backpacks full of customer requests, and they go to work every day knowing that they won’t and can’t meet customer expectations. The work that they are able to complete may sit for a while because any change to the complicated architecture or fragile pipeline may break something. When a change finally deploys, the teams have no way to see the whole landscape to ensure everything continues to run smoothly. Even if they could look at everything across their complex data operations, they don’t know what and where to check for dependencies, failures, and data inconsistencies.

And then there are the considerable numbers of teams and data processes involved. When something goes wrong, there is a lot of finger pointing and blame to go around. Each team knows its small part of the landscape, but there is no shared context among them of the larger picture⸺its infrastructure and problem areas⸺to adequately and efficiently address the issues.

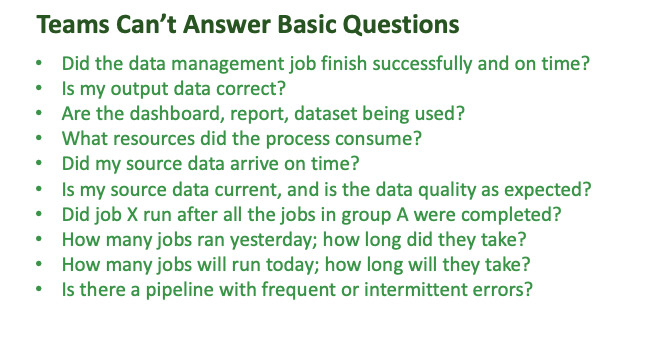

It’s a big risk if your teams can’t answer basic questions about production processes or if the answers are found in pockets of your organization with teams who have no way to communicate broadly. Do they know if the data is going to arrive on time? Do they know if integrations with other datasets are right? Without a single source of truth about what’s running, how can you pinpoint the failures? How can you find problems before your customers do?

There’s a similar risk when teams do not understand the status of development processes and deployments to production. Do your teams know how and when deployments are happening? Do they know what processes and environments are affected by a deployment? Do they know what changes are going into production? Not knowing these answers makes it very hard to ensure that everything is going well and even harder to find problems when they occur.

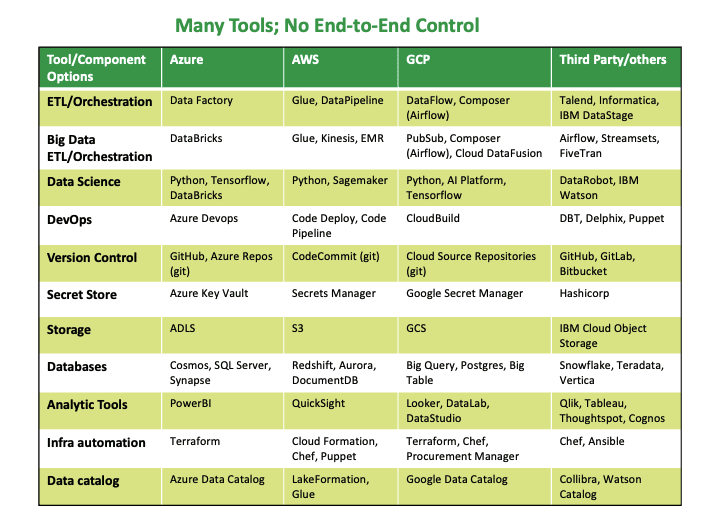

The industry is replete with data tools, automation tools, and IT monitoring tools. But none of these tools fully addresses the problem. You have to monitor your entire data estate and all the reasons why pipelines may succeed or fail, not just server performance, load testing, and tool and transaction testing. A complete Observability solution is required.

This is where DataOps Observability fills a gap in the industry.

____________

Now that you understand the problems that DataOps Observability addresses, you can learn about how data journeys bring observability to all your stakeholders, pipelines, tools, and data processes across your organization in part two of our series, Introducing Data Journeys (Part 2)

_____________