Below is our final post (5 of 5) on combining data mesh with DataOps to foster innovation while addressing the challenges of a data mesh decentralized architecture. We see a DataOps process hub like the DataKitchen Platform playing a central supporting role in successfully implementing a data mesh. DataOps excels at the type of workflow automation that can coordinate interdependent domains, manage order-of-operations issues and handle inter-domain communication. The following section will explore the DataOps-enabled data mesh in more depth.

It would be incredibly inefficient to build a data mesh without automation. DataOps focuses on automating data analytics workflows to enable rapid innovation with low error rates. It also engenders collaboration across complex sets of people, technology, and environments. DataOps produces clear measurement and monitoring of the end-to-end analytics pipelines starting with data sources. While data mesh concerns itself with architecture and team alignment, DataOps automates workflows that simplify data mesh development and operations. DataOps is also an ideal platform upon which to build the shared infrastructure needed by the data mesh domains. For example, DataOps provides a way to instantiate self-service development environments. It would be a waste of effort for each domain to create and maintain that capability independently.

A data mesh implemented on a DataOps process hub, like the DataKitchen Platform, can avoid the bottlenecks characteristic of large, monolithic enterprise data architectures. One can implement DataOps on its own without data mesh, but data mesh is a powerful organizing principle for architecture design and an excellent fit for DataOps-enabled organizations. DataOps is the scaffolding and connective tissue that helps you construct and connect a data mesh.

A discussion of DataOps moves the focus away from organization and domains and considers one of the most important questions facing data organizations – a question that rarely gets asked. “How do you build a data factory?” The data factory takes inputs in the form of raw data and produces outputs in the form of charts, graphs and views. The data factory encompasses all of the domains in a system architecture. Most enterprises rush to create analytics before considering the workflows that will improve and monitor analytics throughout their deployment lifecycle. It’s no wonder that data teams are drowning in technical debt.

Before you can run your data factory, you have to build your data factory. Before you build your factory, you would do well to design mechanisms that create and manage your data factory. DataOps is the factory that builds, maintains and monitors data mesh domains.

Architecture, uptime, response time, key performance parameters – these are challenging problems, and so they tend to take up all the oxygen in the room. Take a broader view. Architect your data factory so that your data scientists lead the industry in cycle time. Design your data analytics workflows with tests at every stage of processing so that errors are virtually zero in number. Doing so will give you the agility that your data organization needs to cope with new analytics requirements. Agile analytics will help your data teams realize the full benefits of an application and data architecture divided into domains.

Efficient workflows are an essential component of a successful data team initiative. One common problem is that code changes, or new data sets may break existing code. Your domain DAG (directed acyclic graph) is ingesting data and then transforming, modeling and visualizing it. It’s hard enough to test within a single domain, but imagine testing with other domains which use different teams and toolchains, managed in other locations. How do you allow a local change to a domain without sacrificing global governance and control? That’s important to do, not only within a single domain but between a group of interdependent domains as well.

With a DataOps process hub, like the DataKitchen Platform, testing is performed in an environment that mirrors the system. DataKitchen supports an intelligent, test-informed, system-wide production orchestration (meta-orchestration) that spans toolchains. Unlike orchestration tools like Airflow, Azure Data Factory or Control-M, DataKitchen can natively connect to the complex chain of data engineering, science, analytics, self-service, governance and database tools, and meta-orchestrates a hierarchy of DAGs. Meta-orchestration in a heterogeneous tools world is a critical component to successfully rolling out a data mesh.

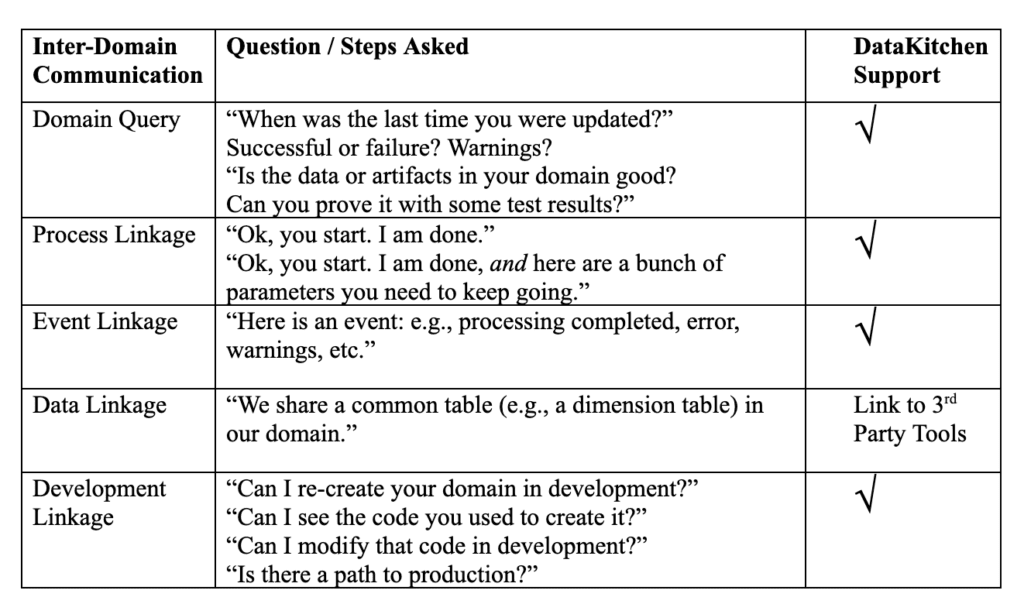

The DataKitchen Platform natively provides URL access to nearly all the interfaces that are required for inter-domain communication.

Table 1: DataKitchen Platform support for inter-domain communication.

The capability to execute domain queries comes from DataKitchen order runs. Process queries come from calling an order run or running a Recipe (orchestration pipeline). Event linkage is natively supported, and development linkage stems from Kitchens (on-demand development sandboxes). DataKitchen supports data linkage by integrating with tools that access and store data – there are plenty of great ones.

DataKitchen groups recipes into components called ingredients. Ingredients are composable units that can enable domains to change independently. They can also be orchestrated together within Kitchens.

DataKitchen can help you address the critical tasks of developing, deploying, and monitoring analytics related to a domain.

- Recipes – Orchestrate the whole pipeline (i.e., ingest, process/curate, serve, etc.) inside a domain. DataKitchen Recipes can serve as a master DAG as well as lower-level DAGs nested inside other DAGs.

- Monitoring Tests – Make sure the data (from suppliers and to customers) is trustworthy—this aids in diagnostics (i.e., detection and localization of an issue). In the DataKitchen context, monitoring and functional tests use the same code.

- Variations – Execute data pipelines with specific parameters. Deliver the correct data product for the consumer because one size does not fit all. Variations enable the data team to create different versions of their domain to handle development, production, “canary” versions or any other change. Variations unlock a great degree of agility and enable people to be highly productive.

- Kitchen Wizard – Provide on-demand infrastructure to the data teams to prevent delays. One concern related to domain teams is the potential duplication of effort with respect to horizontal infrastructure. DataKitchen can be used to automate shared services. For example, DataKitchen provides a mechanism to create self-service development sandboxes, so the individual teams do not have to develop and support this capability.

- Kitchens/Recipes/Functional Tests – Iterate to support the “product-oriented” approach. Building the factory that creates analytics with minimal cycle time is a critical enabler for the customer focus that is essential for successful domain teams.

Conclusion

Data mesh is a powerful new paradigm which deals with the complexity in giant, monolithic data systems. As an organizing principle, it focuses on data, architecture and teams and less, the operational processes that are critically important for agile, error-free analytics. As part of your data mesh strategy, DataOps assists with the process and workflow aspects of data mesh. DataOps automates shared services preventing duplication of effort among teams. DataOps also addresses some of the complexity associated with domain inter-dependencies and enables the data organization to strike the right balance between central control/governance and local domain independence.

This completes our blog series on the synergy between DataOps and data mesh. If you missed any part of this series, you can return to the first post here: “What is a Data Mesh?”