Part 4: Reviewing the Benefits

This is the final post in DataKitchen’s four-part series on DataOps Observability. Observability is a methodology for providing visibility of every journey that data takes from source to customer value across every tool, environment, data store, team, and customer so that problems are detected and addressed immediately. DataKitchen has released a version of its Observability product, which implements the concepts described in this series. (Part 1) (Part 2) (Part 3)

DataOps Observability Benefits Summary

Jason formed a small team and implemented DataKitchen DataOps Observability. The team built a data journey and monitored a subset of pipelines as a proof of concept. When he presented some initial data to a group of stakeholders, his audience was surprised at the errors revealed, given that only the critical, customer-facing errors had garnered their attention to date. Jason showed them how DataOps Observability could monitor, alert him to problems, prompt early fixes of these issues, and ultimately prevent many of these errors.

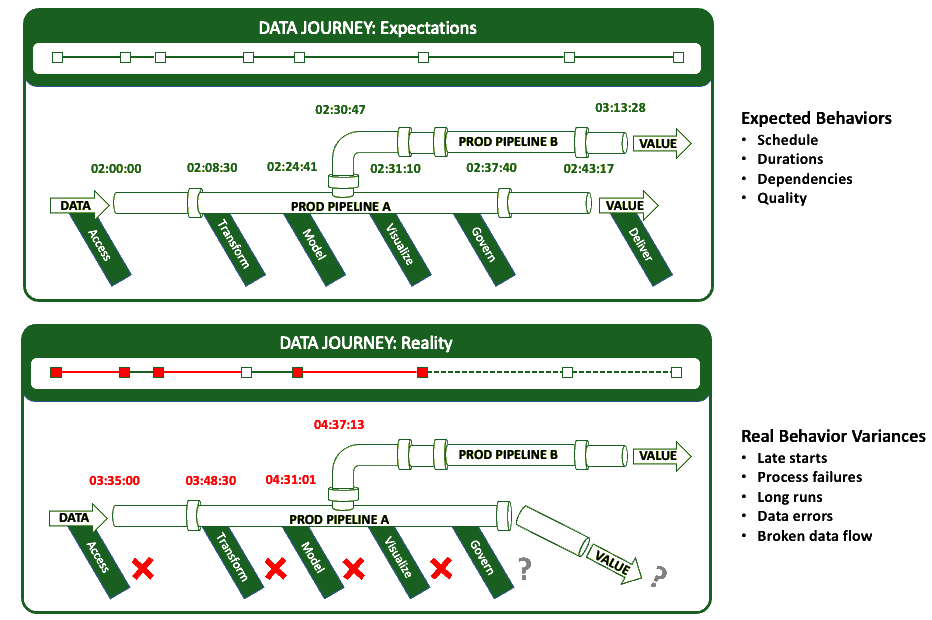

DataOps Observability is your mission control for every journey from data source to customer value, from any development environment into production, across every tool, every team, every environment, and every customer so that problems are detected, localized, and understood immediately.

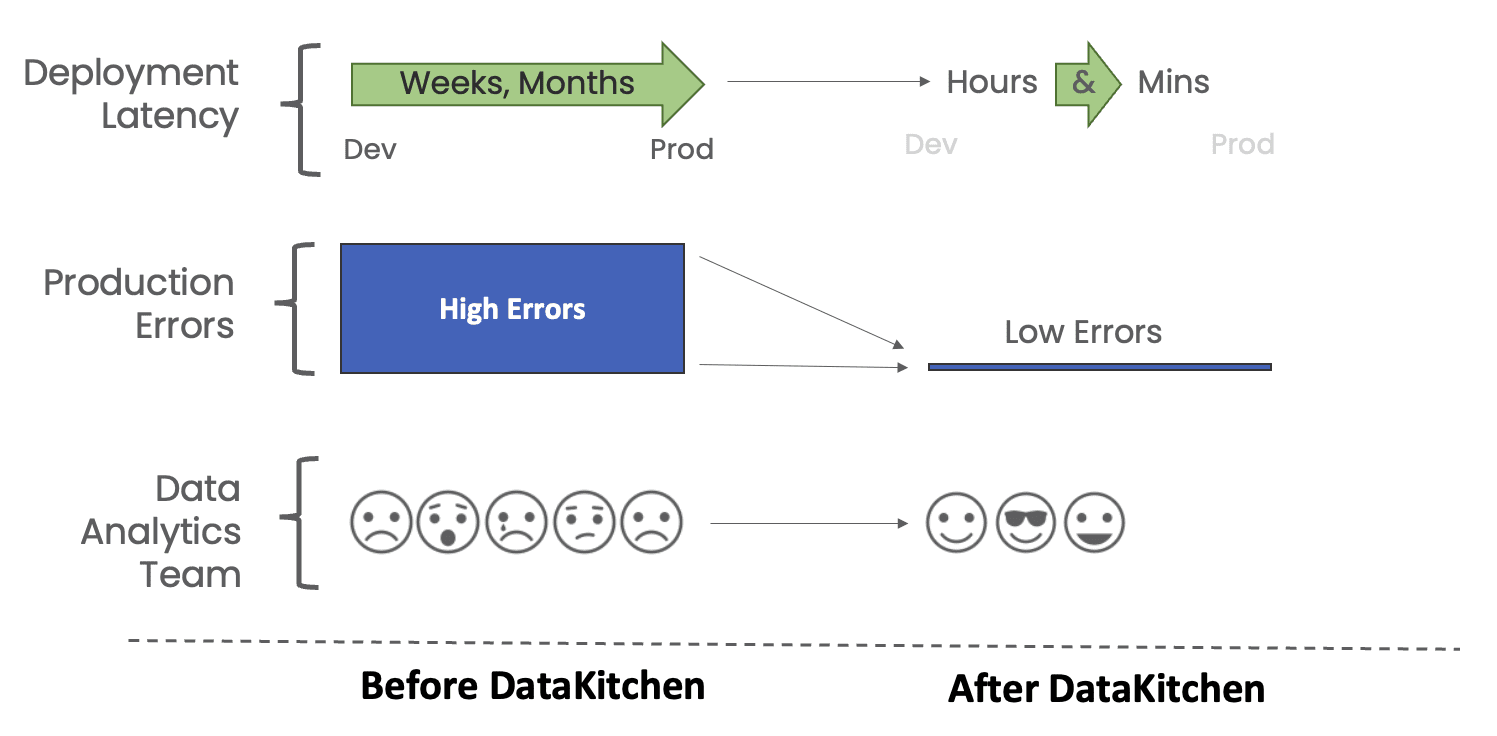

In a world of complexity, failure, and frustration, data and analytics teams need to deliver insight to their customers with no errors and a high rate of change. As in Jason’s experience, the stress and embarrassment of breaking things crowd out the ability to create new insights. In a 2021 survey of 600 data professionals, responses suggested an overwhelming majority are calling for relief. In the survey, 97% reported experiencing burnout, 91% reported frequent requests for analytics with unrealistic or unreasonable expectations, and 87% reported getting blamed when things go wrong.

You don’t have to live with these problems. DataOps Observability provides critical features and benefits.

- Data journeys allow monitoring of every data process from source to customer value.

- Production expectations reduce embarrassing errors to zero, including data and tool testing.

- Development data and tool testing increase the delivery rate and lower the risk of deploying new analytic insights.

- Historical dashboards enable you to find the root causes of issues.

- An intuitive, role-based user interface allows stakeholders, engineers, managers, data engineers, data scientists, analysts, and your business customers to be on the same page.

- Simple connections and an open API enable fast integrations without replacing existing tools.

To produce rapid, trusted customer insight, you need to start by reducing your team’s hassles and embarrassment while increasing the time it takes to develop and deliver. You can lower your error rates and achieve your goals by monitoring all data journeys. Do not replace your tools or infrastructure; use the DataKitchen DataOps Observability product on top of those tools. When you find a problem, use the DataKitchen DataOps Automation product to permanently fix it by automating all your chosen technologies’ testing, deployment, and orchestration.

Spend less time worrying about what may go wrong and gain more time to create by observing the entire data journey and taking early action to stay on track.

You have learned about the problems that DataOps Observability addresses in part one of this series, and you have gained an understanding of how data journeys can help everyone visualize operations across an entire data estate in part two. In part three, you examined the components of effective data journeys. In the final part of this series, you were offered a summary of DataOps Observability benefits, perhaps ready-made for a company presentation! (Part 1) (Part 2) (Part 3)