How Data Quality Leaders Can Gain Influence And Avoid The Tragedy of the Commons

Data quality has long been essential for organizations striving for data-driven decision-making. Despite the best efforts of data teams, poor data quality remains a persistent challenge, leading to distrust in analytics, inefficiencies in operations, and costly errors. Many organizations struggle with incomplete, inconsistent, or outdated data, making it difficult to derive reliable insights. Furthermore, data quality leaders often face the challenge of limited influence within their organizations, making it hard to enforce necessary changes. Without proper visibility and control over data processes, these leaders are left addressing the same recurring problems without meaningful long-term solutions.

Addressing these issues requires a systematic approach that identifies and corrects errors, prevents them from occurring, and gives data quality leaders the influence they need to effect change. This article talks about three ideas: using the ecological idea of the ‘Tragedy Of The Commons’ as a metaphor for the eternal issue of data quality, how data quality leaders can leverage Dale Carnegie’s 100-year-old ideas on how to influence people, and how to wrap this improvement process with DataOps iterative improvement.

Data Quality Is An Example Of The ‘Tragedy Of The Commons’

Data quality issues often stem from fragmented ownership, leading to neglect. Organizations treat data as a shared resource, but without clear responsibility, errors accumulate, creating a “Tragedy of the Commons.” The tragedy is that if many people enjoy unfettered access to a finite, valuable resource, they will tend to overuse it and may end up wrecking its value. According to industry surveys, 73% of data practitioners do not trust their data, 60% of data projects fail due to poor data quality, and billions of dollars are lost annually due to data errors. Data owners often focus only on immediate, high-priority issues while missing the systemic challenges of data quality, making it difficult to shift towards long-term improvements. They overgraze their standard data fields, leaving a bare, denuded data landscape.

A shared pasture is overgrazed because each person acts in their short-term interest rather than considering the collective good. Similarly, in data management, different teams use and create or modify data for their specific needs but often do not take responsibility for maintaining its quality for others’ use. This results in degraded data integrity over time, with no single entity accountable for ensuring its reliability.

Misaligned incentives exacerbate this lack of ownership. Business teams prioritize speed and efficiency, often bypassing best practices to meet immediate goals. On the other hand, data engineers and analysts are responsible for maintaining data accuracy but struggle to get buy-in from stakeholders, who do not prioritize data quality until it becomes a crisis.

Compounding the issue, data governance policies tend to be unclear or poorly enforced. While organizations might have data quality guidelines, the absence of robust enforcement mechanisms and accountability means these policies fail to function effectively. Consequently, data quality professionals face a challenging situation—they are tasked with maintaining high data integrity, yet they lack the authority to implement necessary changes.

The Influence Factor: Overcoming Organizational Resistance With Persuasion

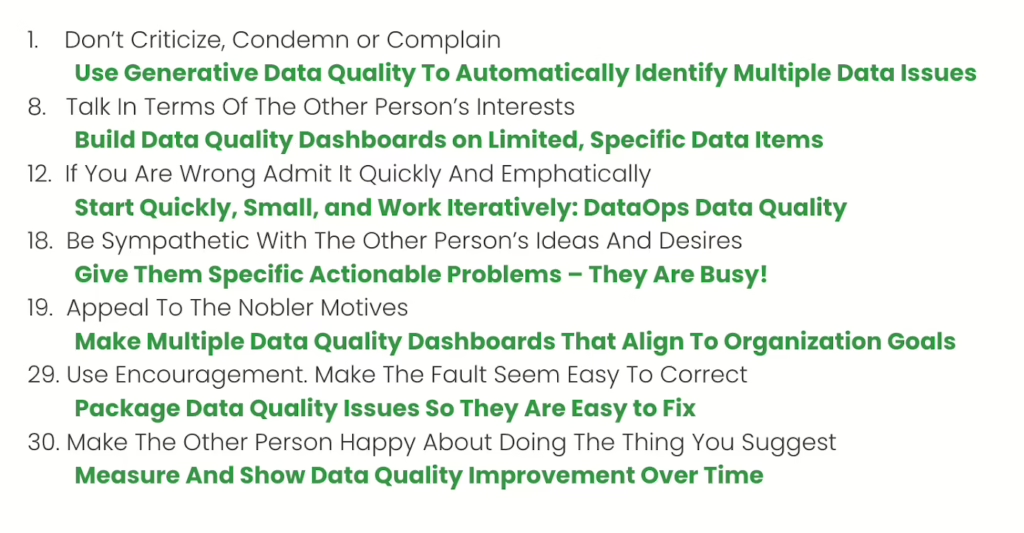

Data quality leaders often have influence but little power—they can identify problems but lack the authority to enforce change. Let’s see how they can take inspiration from Dale Carnegie’s principles on persuasion and leadership. In How to Win Friends and Influence People, Carnegie emphasizes that people are more likely to respond positively when approached with understanding rather than criticism. This is particularly relevant in data quality, where leaders often struggle to gain support for improvements. One of Carnegie’s key principles is, “Don’t criticize, condemn, or complain.” Data professionals frequently encounter resistance when they approach teams with a list of problems rather than solutions. Instead of pointing fingers at errors in reports or data pipelines, they should frame data quality challenges as opportunities for collective improvement, highlighting the benefits of cleaner data for business decisions.

Another essential Carnagie principle is to “Talk in terms of the other person’s interests.” Different stakeholders view data through different lenses. A finance leader is concerned with accurate financial reporting, while a marketing executive is more interested in precise customer segmentation. Data quality leaders must tailor their messaging accordingly, demonstrating how improvements align with each team’s objectives. They can foster greater collaboration and commitment across departments by presenting data quality as an enabler of business success rather than a technical burden.

Another key principle is, “If you are wrong, admit it quickly and emphatically.” Due to the complexity of data systems and the number of people handling them, errors are inevitable in data quality. Data quality professionals should not hesitate to acknowledge when data issues arise rather than attempting to downplay or obscure them. Transparency fosters trust among stakeholders and speeds up corrective actions. The team should avoid hiding or defending flaws by addressing issues openly and committing to resolutions to build credibility.

Furthermore, Carnegie’s advice to “Use encouragement and make the fault seem easy to correct” is especially relevant. Many data professionals struggle with getting buy-in from engineers and business teams when addressing data issues. Instead of overwhelming teams with exhaustive reports filled with errors, they should present issues to make the solution appear straightforward. This could involve breaking down problems into small, actionable steps and providing clear guidance on resolving them efficiently.

Finally, Carnegie’s principle of “Make the other person happy about doing what you suggest” applies directly to securing collaboration across teams. Data quality improvements should benefit all stakeholders, whether they reduce inefficiencies for engineers, improve forecasting for business leaders, or ensure compliance for regulatory teams. By demonstrating how better data quality leads to smoother workflows, fewer operational disruptions, and more reliable insights, data professionals can motivate others to take ownership of improvements rather than see them as burdens.

A DataOps Approach To Data Quality: Iterative & Impactful

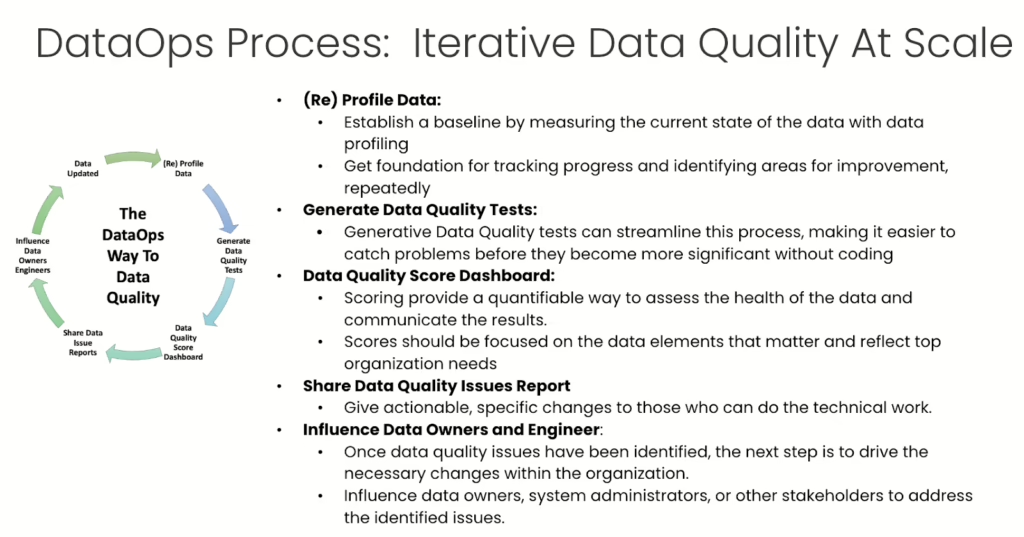

Traditional data quality initiatives often fail due to rigid, time-consuming processes. Embrace an agile, iterative approach that enables teams to start small and act quickly, establishing a working quality process within hours. Rather than spending months manually coding data quality rules, AI-driven test generation significantly reduces effort while maintaining high accuracy. Refining and iterating the tests based on real-world feedback facilitates continuous improvement instead of a static, one-time assessment—Automate data quality insights, providing clear and actionable reports that inform decision-makers. By tracking data quality scores over time, leaders can measure progress and demonstrate the impact of their initiatives. This approach ensures that data teams concentrate on small, high-impact data items, enabling them to gain momentum and quickly showcase the value of quality improvements. The key to success is not attempting to boil the ocean but selecting specific, meaningful data elements tied to business priorities and iterating toward better standards.

The Path Forward: Building Influence Though Rapid, Focused Iterations to Improve Data Quality

Better tools and influence strategies are key to overcoming the ‘Tragedy of the Commons’ in data quality. Data quality professionals must find ways to communicate the importance of clean, reliable data in ways that resonate with different stakeholders.

One practical approach is to use Dale Carnegie’s principles of persuasion. For instance, instead of criticizing teams for poor data practices, data leaders should frame their message around how better data benefits everyone. Using language that aligns with different stakeholders’ interests—such as compliance for legal teams, accuracy for finance, and speed for marketing—helps build collaboration and overcome resistance.

Another strategy is to make data quality improvements feel manageable. If teams see data issues as insurmountable, they are less likely to act. Data leaders can gain incremental buy-in by breaking down problems into smaller, more actionable fixes and demonstrating quick wins. Rather than blaming, encouragement goes a long way in fostering a culture where data quality is seen as a shared responsibility rather than a burden.

Ultimately, the solution to data quality challenges lies in technology and data leaders’ ability to navigate organizational dynamics, build influence, and advocate for systemic changes. By understanding the behavioral and structural barriers to data quality, they can position themselves as essential in shaping data governance and organizational strategy.

Open Source And Further Information

DataKitchen has built an open-source tool, DataOps Data Quality TestGen, specifically to address the ‘Tragedy Of The Commons’ in data quality. The tool provides AI-driven insights to leverage Dale Carnegie’s ideas on how to influence people and easy-to-use, full-featured data quality with built-in DataOps iterative improvement.

- Install DataOps Data Quality TestGen Open Source: https://info.datakitchen.io/install-dataops-data-quality-testgen-today

- Data Quality and Observability Free Certification: https://info.datakitchen.io/data-observability-and-data-quality-testing-certification

- DataOps Data Quality White Paper: https://info.datakitchen.io/white-paper-data-quality-the-dataops-way

- Webinar: Data Quality Scorecards: https://info.datakitchen.io/webinar-form-2025-02-actionable-automated-agile-data-quality-scorecards