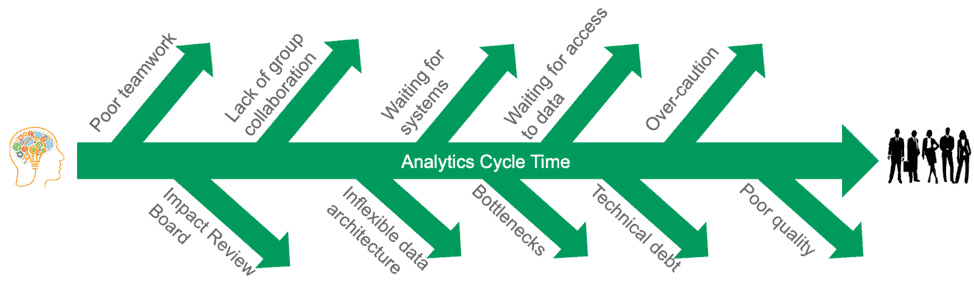

Cloud computing does NOT always deliver increased agility. Migrating from an on-prem database to a cloud database may produce cost, scalability, flexibility, and maintenance benefits. However, the cloud initiative will not deliver agility if the data scientists, analysts and engineers are constantly yanked from development projects in order to fix broken reports and manage data errors. If the impact review board requires four weeks to approve a change, that is four weeks whether the analytics run on-prem or in the cloud. Companies that migrate inefficient, error-prone workflow processes to a cloud toolchain will likely be disappointed when the results underwhelm.

Figure 1: Inefficient and error-prone workflows will remain inefficient and error-prone even if migrated to a cloud-based toolchain.

A cloud migration project itself can benefit from a DataOps approach. DataOps is a methodology that applies Agile Development, DevOps and lean manufacturing to analytics to maximize business agility. DataKitchen provides a DataOps Platform that incorporates these principles and automates workflows alongside your on-prem or cloud toolchain. It can help your data organization virtually eliminate errors, minimize cycle time, and enable seamless collaboration of data team members and their stakeholders. DataKitchen is particularly strong in managing data pipelines that span multiple data centers. It can also effectively modularize a toolchain so data pipelines can be migrated one processing stage or tool at a time. DataKitchen enables enterprises to get the most from a hybrid cloud or multi-cloud initiative.

Mitigate Risk with Parallel Data Pipelines

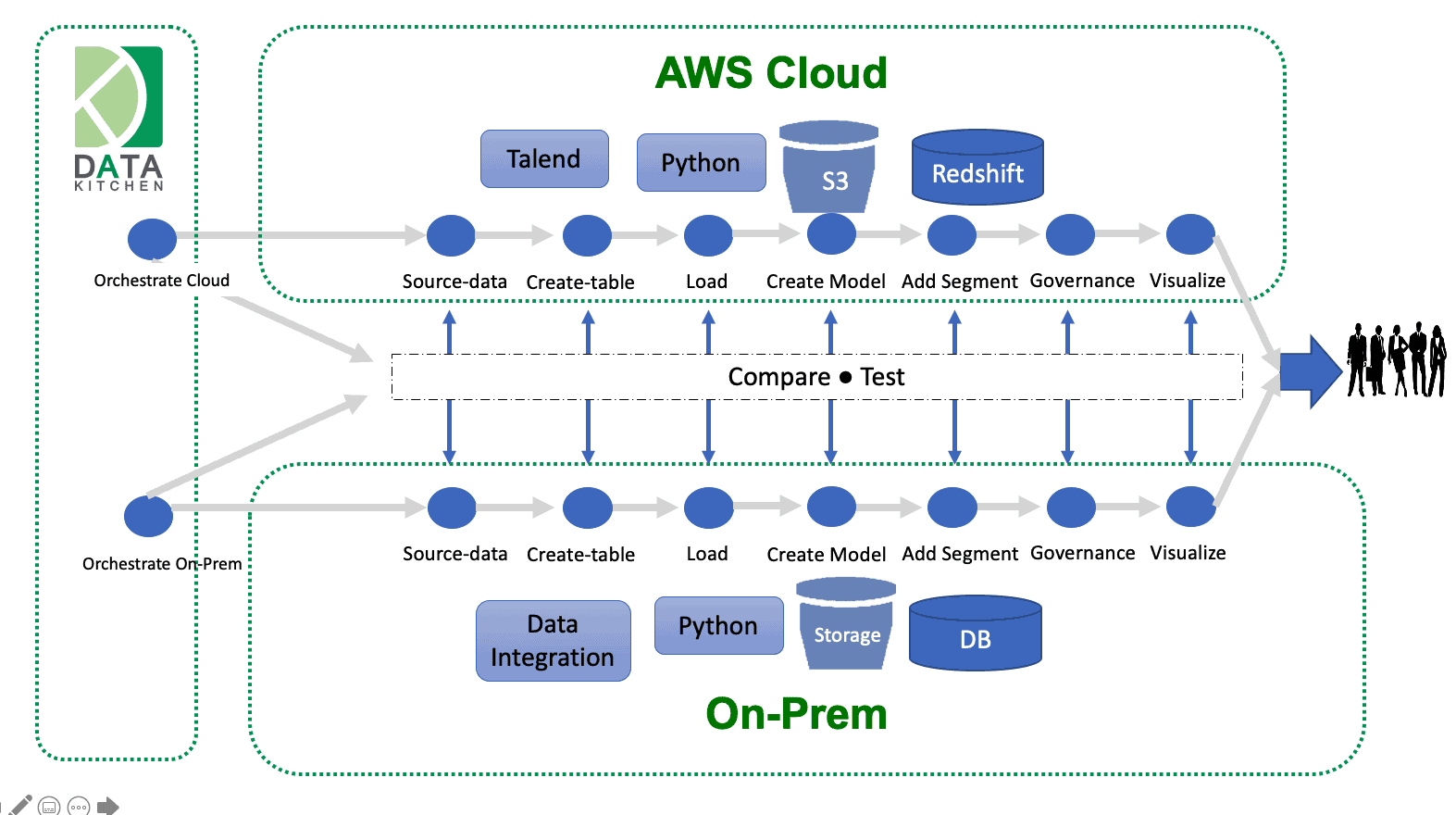

Enterprises may mitigate risk by instantiating cloud data operations while running their legacy on-prem data pipelines in parallel, comparing results after each processing operation. There’s often underlying business logic embedded in analytics that people sometimes have a hard time recreating from scratch. DataOps testing will help the team find those discrepancies and address them before they appear in your critical analytics. DataKitchen brings transparency into on-prem and cloud data pipelines, enabling the data team with a unified view of all of your end-to-end pipelines – the data analytics version of “a single pane of glass.”

With parallel cloud and on-prem data pipelines, the implementation team can decide whether to cut over to cloud operations one processing stage at a time or all at once, depending on a project’s use case and risk profile.

Figure 2: Enterprises may mitigate risk by running cloud and legacy on-prem data pipelines in parallel, comparing results after each processing operation.

Migrate with Confidence

DataOps testing helps ensure that a migration proceeds robustly. In one case that we encountered, a company moved its data operations to the cloud only to hear reports from users that the data looked wrong. They found that their 6 billion row database had only partly transferred. DataOps prescribes testing inputs, outputs and business logic at each stage of processing. It verifies code and configuration files by automating impact review. It also verifies streaming data with data tests, including location balance, historical balance and statistical process control. In this case, a simple location balance test, like a row-count test, would have easily identified the problem of the missing data. The data team would have received an alert and remediated the issue before it affected user reports.

Keep Teams Coordinated

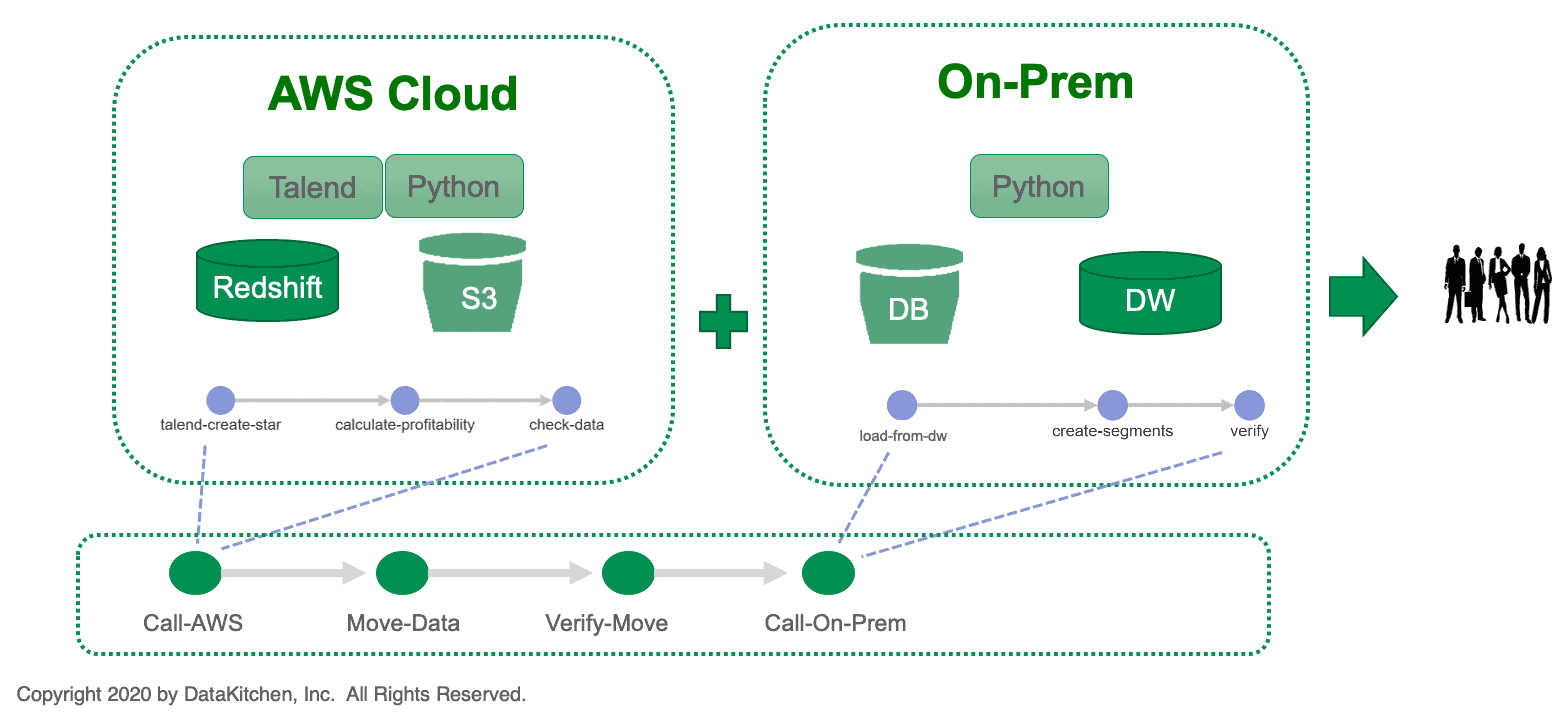

DataKitchen automates workflows that enable your data teams to collaborate more efficiently. If you have an on-prem team and a cloud team that need to stay coordinated, the groups will be able to continue making changes without interfering with each other’s productivity. Imagine that you are delivering a dashboard to the CEO. Some of it comes from the on-prem team and some from the cloud team. The teams may be in different locations with minimal communication flowing back and forth. These two teams want to work independently, but their work has to come together seamlessly. Task coordination occurs intrinsically when teams use DataKitchen, enabling the groups to strike the right balance between centralization and freedom. The figure below shows a cloud team and an on-prem team each managing their own data pipelines. DataKitchen enables each team to manage its local data pipeline and toolchain. The output of each group merges together under the control of a top-level pipeline. The model also applies to multi-cloud implementations.

Figure 3: DataKitchen enables teams using different toolchains to stay coordinated.

Development and Test Environment Agility

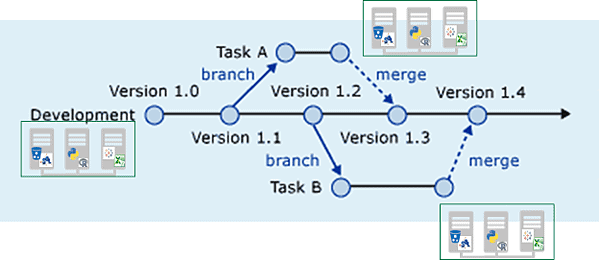

Analysts need a controlled environment for their experiments, and data engineers need a place to develop outside of production. Instead of having to talk to the hyper-dominant database salesperson for more licenses or go through a long procurement process to buy new hardware, the cloud offers the ability to turn on compute, storage and software tools with an API command. You can create on-the-fly development and testing environments that accurately reflect production and have test data that is accurate, secure, and scrubbed. A key feature that enables agility is the ability to spin-up hardware and software infrastructure. A common misconception is that on-demand infrastructure is all that you need. Don’t be misled by the hype! Cloud infrastructure is an essential ingredient of agility, but you need to implement the ideas in the DataOps Manifesto backed by a technical platform like DataKitchen to fully maximize agility.

Figure 4: DataKitchen development environments tightly couple to the branches and merges of a source control tree so you can manage all your parallel analytics development efforts.

Conclusion

Companies choose to migrate applications to the cloud in order to be more agile, but there is more to business agility than on-demand infrastructure. Business agility derives from agile workflows. In data analytics this means implementing DataOps to reduce errors, minimize cycle time and improve collaboration within the data organization. DataKitchen works alongside your toolchain and automates your workflows to bring the benefits of DataOps to your analytics teams. With robust and efficient workflows, you’ll maximize your company’s business agility, whether on-prem, in the cloud, or a mix of both.