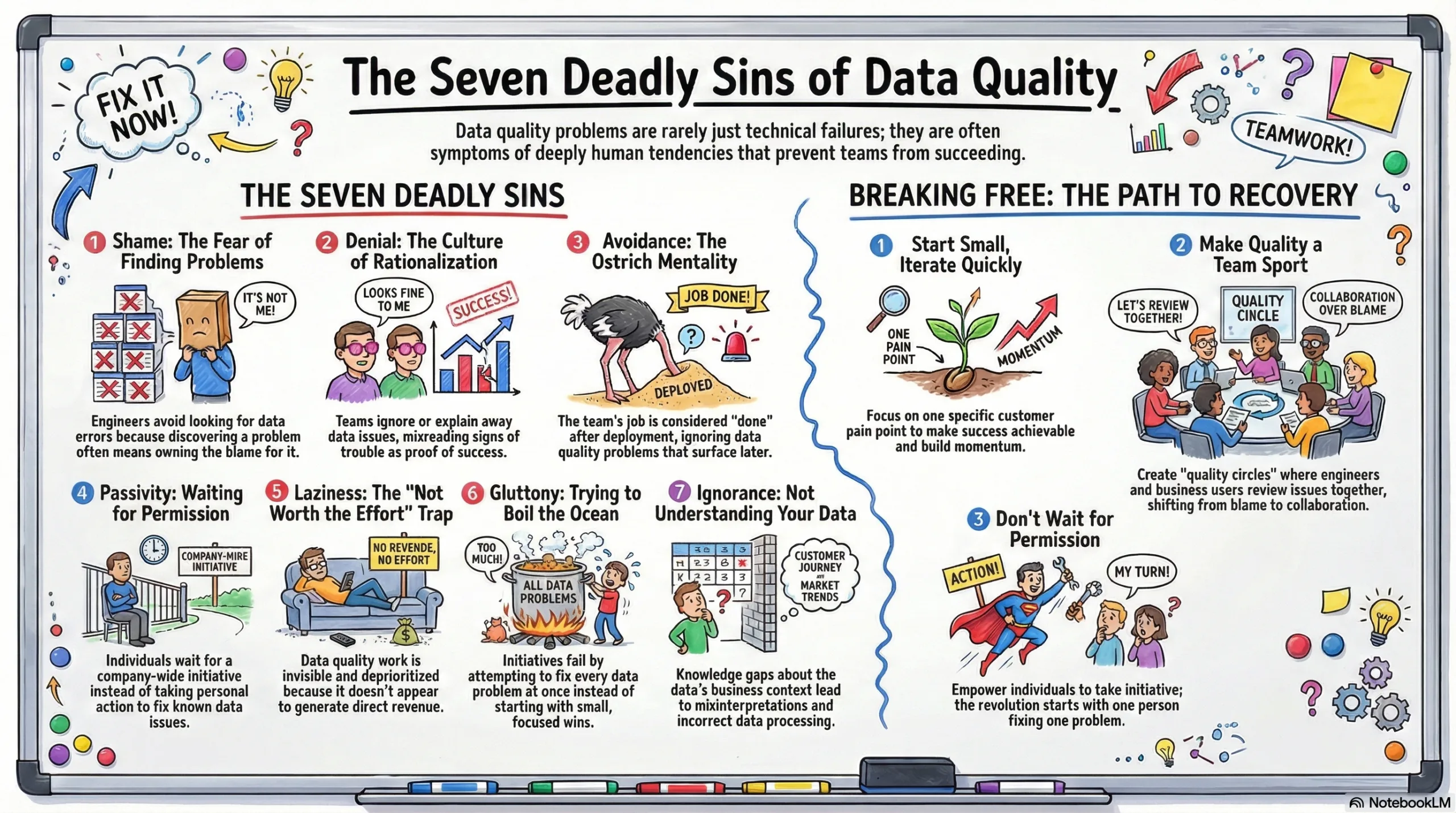

Data quality problems don’t just appear out of nowhere. They fester in organizational cultures where dysfunction becomes normalized, where warning signs are ignored, and where the path of least resistance leads to ever-growing data debt. After years of working with data teams across industries, we’ve identified seven behavioral patterns that consistently undermine data quality initiatives. Like the classical deadly sins, these aren’t simply technical failures—they’re deeply human tendencies that prevent teams from achieving the data quality their organizations desperately need.

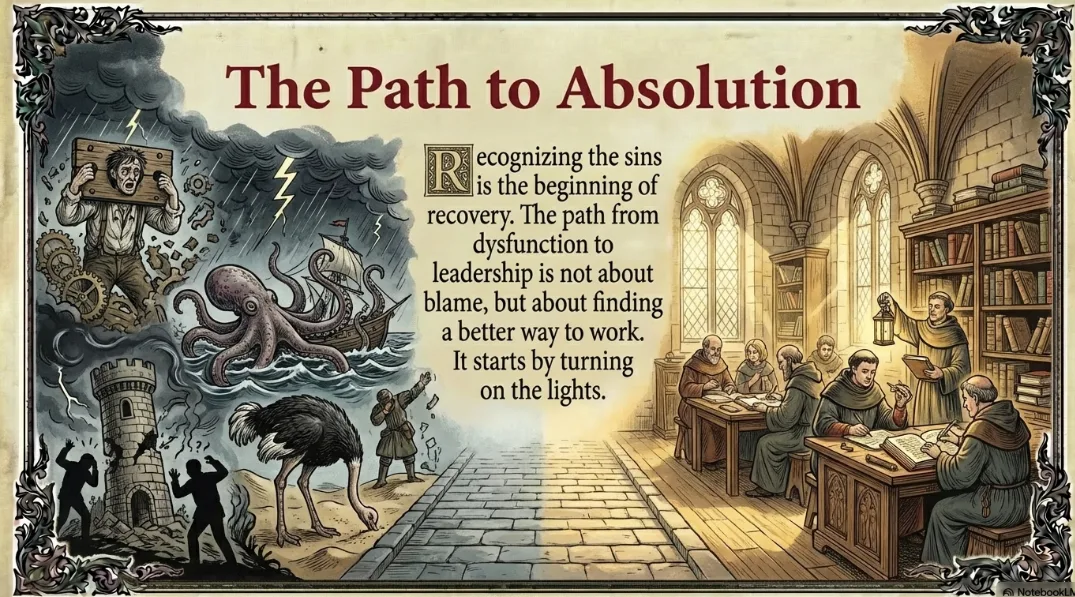

Understanding these sins is the first step toward overcoming them. Each represents a common trap that even the most well-intentioned data teams fall into. The good news? Recognition is the beginning of recovery, and there are practical paths forward for teams willing to confront these challenges head-on.

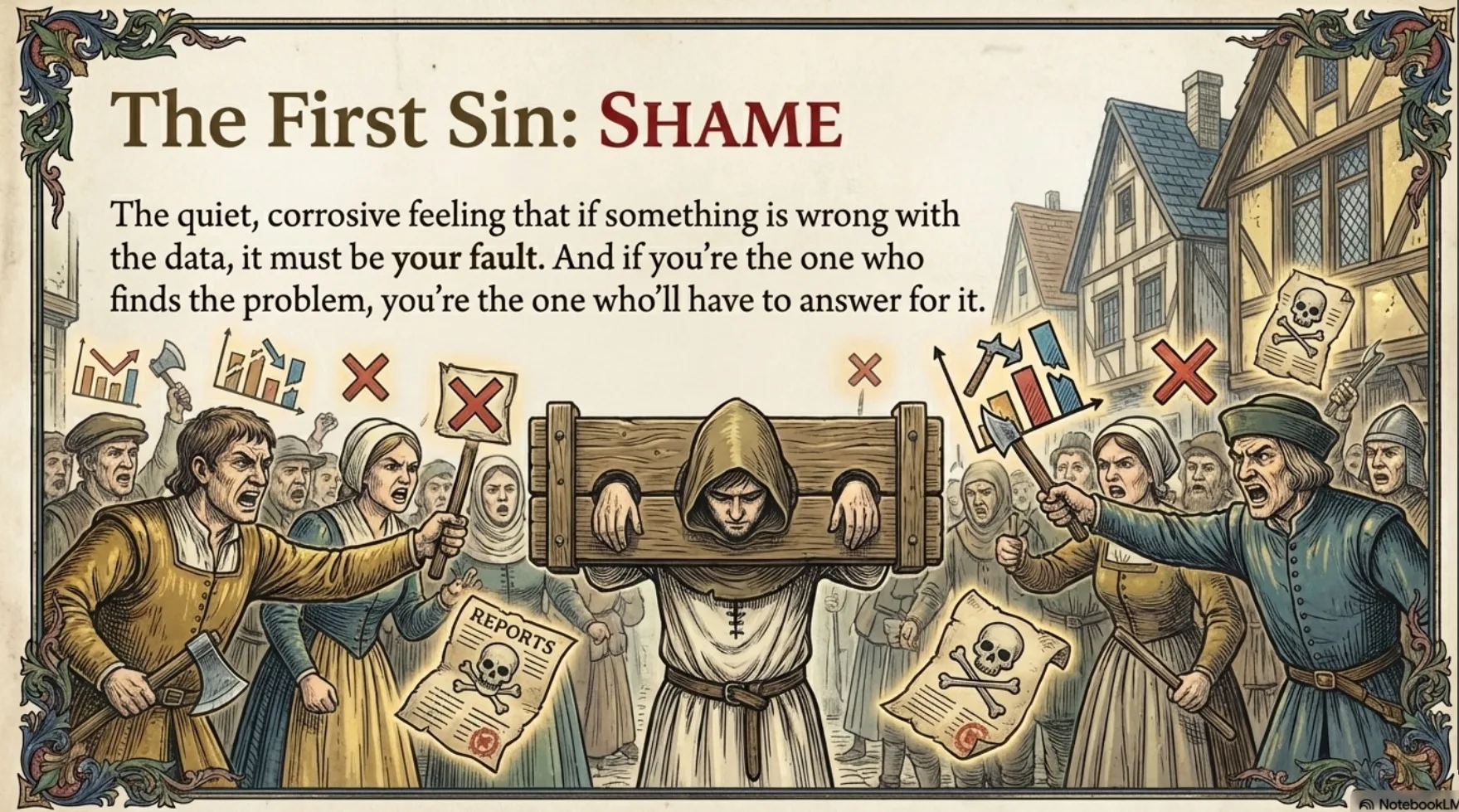

1. Shame: The Fear of Finding Problems

Here’s something we don’t talk about enough in data engineering: shame. The quiet, corrosive feeling that if something is wrong with the data, it must be your fault. And if you’re the one who finds the problem, you’re the one who’ll have to answer for it. As explored in The Data Errors Shame Game, this shame creates a strange and painful paradox: the very people best positioned to catch data quality issues often develop an unspoken preference not to look too closely. Not because they don’t care, but because they’ve learned that discovering a problem means owning the blame for it.

When data quality responsibility lives entirely within the engineering team, every error feels personal. Engineers are expected to anticipate problems before they happen, catch them instantly when they do, and fix them without anyone noticing there was ever an issue. Over time, this creates a defensive posture: if I don’t find the error, I can’t be blamed for it. The antidote to shame is shared visibility. When data engineers and business users look at quality problems together, the dynamic shifts from “what did you miss?” to “what should we tackle first?” Building quality circles where stakeholders regularly review data issues together dissolves the isolation that makes shame thrive.

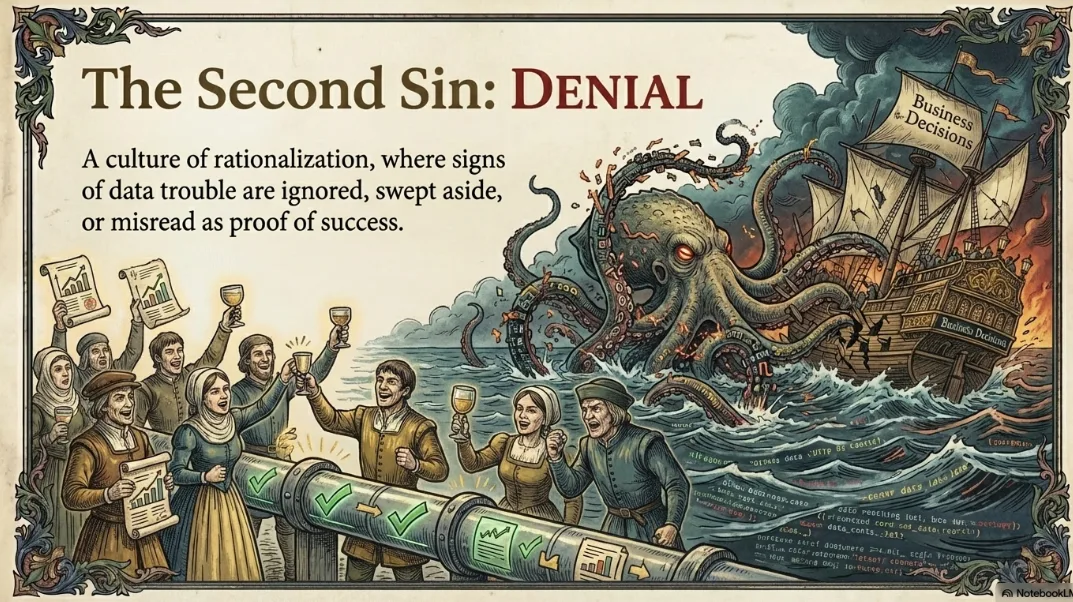

2. Denial: The Culture of Rationalization

In many organizations, data quality problems fester in the shadows—ignored, rationalized, or swept aside with confident-sounding statements that mask deeper dysfunction. As described in Is Your Team in Denial about Data Quality?, teams often build a culture of rationalization where signs of trouble are misread as proof of success. A pipeline ran “all green”? That doesn’t mean the data inside was correct. “I haven’t heard a single complaint.”? That usually means users have stopped expecting anything better or have worked around the data team entirely.

The woman on the phone saying “The users know about that—they have a workaround!” might be trying to help, but she’s normalizing failure. Workarounds don’t fix broken data; they institutionalize it. Confidence is not a substitute for verification—manual processes, however well-intentioned, are rarely repeatable, scalable, or trustworthy. Breaking the cycle of denial starts with playing “Data Quality Denial Bingo” with your team: printing out common rationalizations and checking off every statement team members have heard—or said themselves—in the past month. Use the laughter and mild embarrassment that follows as a gateway to having a serious conversation about your data quality practices.

3. Avoidance: The Ostrich Mentality

Your team has gone full ostrich. Heads buried deep in the sand, convinced that if they can’t see the production data errors, those errors must not exist. The pipeline is deployed. The tests passed. Job done, right? As examined in The Ostrich Problem: Your Data Team Thinks Their Job Ends at Deployment, this “deploy and forget” mindset is one of the most common—and most corrosive—patterns in data engineering teams. The team sees their job as done once pipeline code is in production. They don’t feel responsible for data quality problems that pop up after deployment

The frustrating part? Your team isn’t lazy or incompetent—they’re just overwhelmed with development work and genuinely don’t realize how bad things have gotten downstream. The ostrich approach feels safe when you’re already buried in sprint work. The fix starts with gathering the facts: survey end customers about their trust in the data, count recent data errors from tickets and Slack threads, and use tools to score your data quality. Share the results with your team. Most engineers don’t want to ship bad data; they just don’t have visibility into what’s happening after deployment. Turn on the lights, and the ostriches will lift their heads.

4. Passivity: Waiting for Permission

Picture this: You’re sitting in yet another meeting where someone asks, “Can we trust this data?” and the room falls silent. If you’re nodding along, you’ve identified yourself as the perfect candidate to become your organization’s data quality champion. As we discussed in The Data Quality Revolution Starts with You, data quality transformation doesn’t begin with company-wide initiatives or sweeping organizational changes. It starts with a single empowered individual who decides that enough is enough—and that person could be you, whether you’re a data engineer debugging pipeline issues at 2 AM or a data leader watching your team struggle.

The magic happens when you stop waiting for permission and start leveraging your personal influence. You already have more power than you think. You know which datasets cause the most headaches, which stakeholders ask the toughest questions, and which processes break down most frequently. This insider knowledge is your superpower. Instead of attempting to fix every data problem in your organization, focus on a specific end customer with a specific pain point. Choose one customer, understand their world, and make their life measurably better. When you transform someone’s work life by fixing their data headaches, you’re recruiting a champion who will advocate for your initiatives.

5. Laziness: The “Not Worth the Effort” Trap

Data quality work is largely invisible. When everything is running smoothly, no one notices the effort behind it. But everyone notices the moment something breaks—when a report is wrong, or a dashboard fails. As discussed in Why Data Quality Isn’t Worth The Effort, this creates a dynamic where data teams are expected to prevent problems without being seen, making it hard to advocate for the time and resources required to do the job well. Data quality efforts are often deprioritized because they don’t directly generate revenue.

Data environments are constantly changing: new data sources are introduced, pipelines are updated, and business logic evolves. These changes often occur without proper cross-team communication, leaving data engineers and analysts to discover issues only after they’ve caused damage. The result is teams stuck playing defense, constantly reacting to problems rather than proactively preventing them. The key insight is that automation doesn’t have to be overwhelming. Tools that automatically generate tests based on the structure and behavior of the data itself can dramatically simplify the process, shifting data quality from a reactive chore to a proactive, integrated part of the development process.

6. Gluttony: Trying to Boil the Ocean

Here’s where most data quality initiatives go wrong: they try to boil the ocean. Improving data quality can feel overwhelming—there are so many things to fix and so many processes to improve. Where do you even start? As outlined in A DataOps Way To Data Quality, the answer lies in starting small, iterating quickly, and treating every improvement as a test or experiment. Instead of attempting to fix every data problem in your organization at once, focus on a specific pain point with a specific customer.

This laser focus does two things: it makes success achievable, and it creates visible wins that build momentum for bigger changes. Think of it like renovating a house—you wouldn’t tear down every wall at once. Instead, you’d start with one room, perfect the process, then apply those lessons to the next space. DataOps principles provide the roadmap: start small, iterate quickly, launch a simple data quality check, measure its impact, learn from what works, then iterate. This approach feels less intimidating than traditional “big bang” implementations and delivers results faster.

7. Ignorance: Not Understanding Your Data

The most fundamental sin is simply not understanding the data you’re responsible for. As explored in Data Quality When You Don’t Understand the Data, knowledge gaps—a lack of comprehensive understanding of the data being handled and the business context it serves—can lead to misinterpretations and incorrect data processing. This knowledge deficit can stem from insufficient training, high turnover rates, or poor knowledge transfer practices. When knowledge is concentrated among a few individuals rather than distributed across the team, it creates risky dependencies.

Poor documentation exacerbates this issue, making it difficult for new team members to get up to speed or for others to fill in during absences. In fast-paced environments, teams often prioritize immediate deliverables over long-term quality improvements. This lack of time for collaboration and learning can lead to shortcuts that compromise data quality. The path forward involves building institutional knowledge through data profiling, creating documentation that travels with the data, and establishing quality circles where cross-functional teams regularly discuss data issues and share context.

Acceptance And Recovery.

Understanding these sins is critical because recognition is the first step toward recovery. These behaviors explain why, despite the availability of tools and methodologies, data quality remains a persistent struggle for many organizations.

- They Prevent Proactivity: These sins trap teams in a reactive “firefighting” mode, where they spend significant time (up to 40%) responding to defects rather than building new value.

- They Erode Trust: When these sins lead to poor data quality, they undermine customer trust and confidence in decision-making, potentially costing organizations millions annually.

- They Mask Structural Issues: Sins like Denial and Ignorance hide the fact that technical teams and domain experts often operate in silos, speaking different languages and failing to share context.

To solidify this concept, think of your data infrastructure like a high-performance sports car.

If the mechanics (data engineers) are afraid to open the hood because they might be blamed for what they find (Shame), or if they ignore a rattling noise because “the radio still works” (Denial), the car will eventually break down on the highway. If they believe their job ends the moment the car leaves the garage (Avoidance) or refuse to perform maintenance because no one applauds an oil change (Laziness), the engine will fail. The Seven Sins are the bad habits of the mechanics that guarantee the car will crash, regardless of how expensive or well-designed the vehicle originally was.

Breaking Free from the Seven Sins

The path from data quality dysfunction to data quality leadership isn’t as long as you think. It starts with one person making one small improvement for one specific customer. From there, it’s about building momentum, measuring impact, and expanding your influence one success at a time. The tools are available—including open-source options that remove budget barriers—the methodologies are proven, and the need is obvious.

Getting your team to overcome these seven deadly sins isn’t about shame or blame—it’s about showing them there’s a better way. When they can spot data problems before customers do, when the same issues stop breaking things week after week, and when those dreaded stakeholder calls turn into the occasional “hey, nice work”—suddenly, caring about data quality doesn’t feel like a burden anymore. It feels like the thing that makes their jobs less stressful. Your organization’s data quality transformation is waiting for someone to take the first step.

WANT TO LEARN MORE? Join the webinar: https://info.datakitchen.io/webinar-seven-deadly-sins-of-data-quality

Frequently Asked Questions, TLDR;

What Are The Seven Sins Of Data Quality And Where Can I Learn More?

- Shame (background: shame game blog https://datakitchen.io/the-data-errors-shame-game-why-data-engineers-avoid-harsh-truths/

- Denial (background: denial game blog https://datakitchen.io/is-your-team-in-denial-of-data-quality/ ),

- Avoidance (background: ostrich blog https://datakitchen.io/the-ostrich-problem-your-data-team-thinks-their-job-ends-at-deployment/),

- Passivity (background: it starts with you blog https://datakitchen.io/the-data-quality-revolution-starts-with-you/ ),

- Laziness (background: Uncle Chip 1 blog/video https://datakitchen.io/why-data-quality-isnt-worth-the-effort/ ),

- Gluttony (background: start small—dataops way to data quality https://info.datakitchen.io/white-paper-data-quality-the-dataops-way )

- Ignorance (background: Uncle Chip 3 blog & video https://datakitchen.io/data-quality-when-you-dont-understand-the-data-data-quality-coffee-with-uncle-chip-3/ )

How Does DataOps Data Quality TestGen Help?

DataKitchen’s DataOps TestGen is explicitly designed to counter the “Seven Deadly Sins of Data Quality” not just by fixing technical bugs, but by addressing the behavioral and cultural root causes of these sins. By automating the heavy lifting of testing and profiling, it removes the barriers that allow these dysfunctions to thrive.

Here is how TestGen addresses each specific sin:

1. Curing Ignorance (Not Understanding Your Data)

Ignorance creates a catch-22: teams feel they cannot test data they do not understand, so they do nothing.

How TestGen Helps: It does not require you to know the data before testing it. TestGen begins with a profiling step that reads your data column by column to learn its shape, content, and structure.

The Mechanism: It uses this profile to infer rules, building a baseline of what “healthy” data looks like. It automatically flags 27 kinds of hygiene problems and potential PII (Personally Identifiable Information) without the user needing to write a single line of SQL or Python. This effectively “turns on the lights” for teams who are operating in the dark.

2. Overcoming Laziness (The “Not Worth the Effort” Trap)

Teams often deprioritize data quality because writing tests is tedious, invisible work that doesn’t generate revenue.

How TestGen Helps: It automates the most labor-intensive part of the process: writing the tests.

The Mechanism: TestGen can instantly generate hundreds of quality checks based on the data’s structure. By automatically producing 80% of the necessary test coverage (such as null checks, value ranges, and unique constraints), it transforms a “reactive chore” into a manageable, proactive process. It renders the excuse “we don’t have time” invalid.

3. Breaking Denial (The Culture of Rationalization)

Teams often rationalize problems (“users have a workaround”) or rely on “wishful thinking” because they lack concrete proof of failure.

How TestGen Helps: It replaces opinions and gut feelings with cold, hard evidence.

The Mechanism: In less than an hour, TestGen can generate a data quality dashboard that provides real numbers and error counts. This creates an objective source of truth that forces the team to acknowledge the reality of the data’s condition, making it impossible to misread signs of trouble as proof of success.

4. Stopping Avoidance (The Ostrich Mentality)

The “deploy and forget” mindset occurs when engineers bury their heads in the sand, assuming that if they don’t look for production errors, those errors don’t exist.

How TestGen Helps: It makes the invisible visible, forcing the “ostrich heads” out of the sand.

The Mechanism: By automating production monitoring, TestGen ensures that data quality is measured continuously after deployment. It shifts the team from a reactive “firefighting” mode (which consumes 40% of their time) to a proactive mode where they catch issues before customers do.

5. Eliminating Shame (The Fear of Finding Problems)

Engineers often avoid looking for errors because they fear being blamed for them, or they fear being asked why they “missed” a test.

How TestGen Helps: It shifts the dynamic from individual blame to collaborative quality.

The Mechanism: Because the tool generates the tests automatically, engineers are not shamed for “missing” a test they couldn’t have predicted. Furthermore, TestGen provides shareable dashboards that allow business stakeholders to see the quality status directly. This removes the engineer from the lonely, uncomfortable role of being the sole messenger of bad news and enables the team to prioritize fixes together.

6. Defeating Passivity (Waiting for Permission)

Individuals often wait for a massive company-wide initiative or budget approval before addressing data quality.

How TestGen Helps: It empowers the “empowered individual” to act immediately.

The Mechanism: As a free, open-source tool that runs locally or on-premise, TestGen removes the barriers of budget approvals and procurement processes. A single engineer can download it, connect it to a dataset, and demonstrate value in minutes, effectively starting a “revolution” without needing permission from executives.

7. Avoiding Gluttony (Boiling the Ocean)

Initiatives fail when teams try to fix every data problem in the organization at once.

How TestGen Helps: It facilitates the DataOps approach of starting small and iterating.

The Mechanism: While TestGen can assess thousands of tables, it encourages users to start with a “minimum viable” quality process. Users are urged to pick a few problem tables, generate a baseline, and get a process working (“70% right”) immediately rather than waiting for a perfect solution. This prevents the paralysis that comes from trying to fix the entire data estate simultaneously.