What Is A Data Journey? DataOps Observability?

Most Commonly Asked Questions

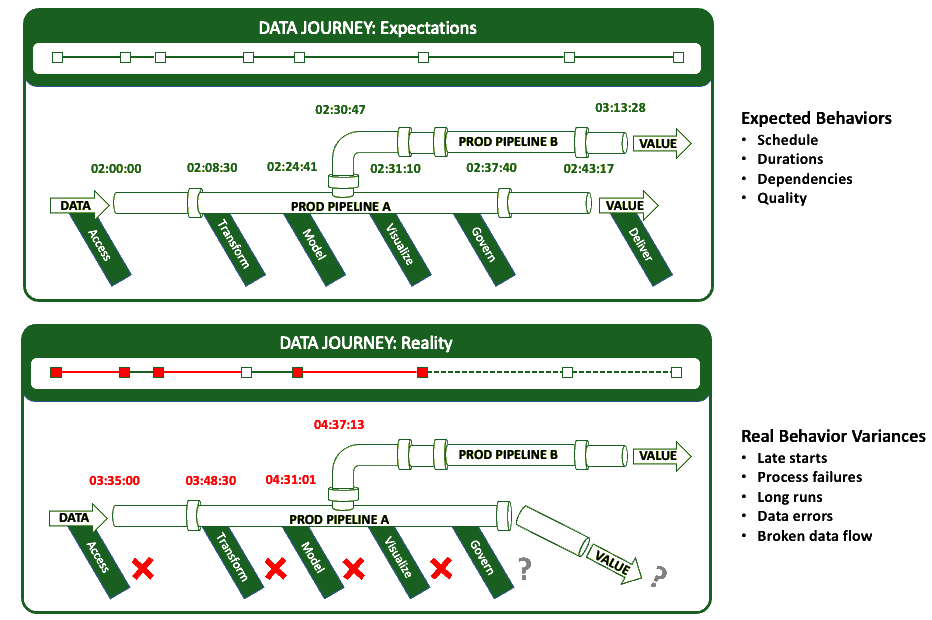

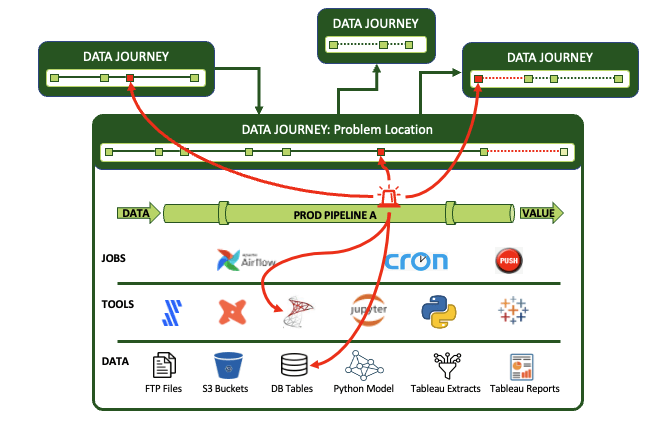

A Data Journey represents all the complexity in your data estate: data, tools, hardware, timeliness, etc., so you can monitor and set expectations. A DataOps Observability product monitors, tests, alerts, and analyzes your data estate in real-time. It provides a view of every Data Journey from data source to customer value, from any team development environment into production, across every tool, team, environment, and customer so that problems are detected, localized, and understood immediately.

Why Observe Your Data Journey?

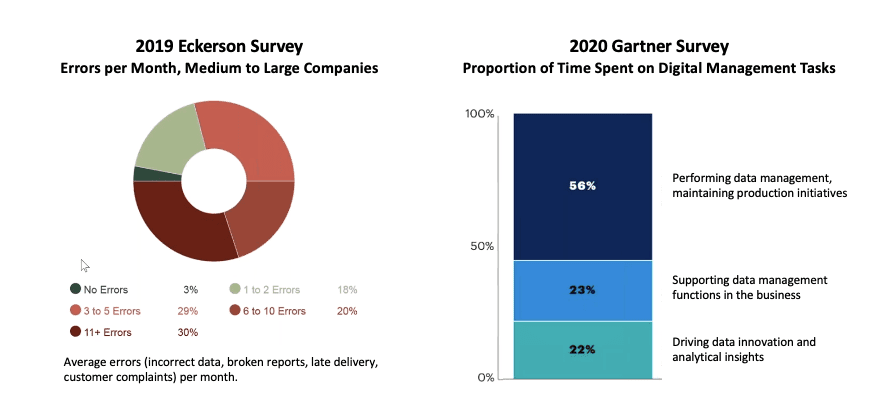

Problem #1: Many tools and pipelines – too many errors and delays:

- No enterprise-wide visibility of 100’s, 1000’s tools, pipelines, and data sets

- No end-to-end quality control

- Hard to diagnose issues

- Errors in data and elsewhere create distractions that limit new insight development.

Problem #2: Data and analytic teams face friction in fixing these problems:

- Very Busy: teams are already busy and stressed – and know they are not meeting their customer’s expectations.

- Low Change Appetite: Teams have complicated in-place data architectures and tools (which may include other logging and observability technologies). They fear changing what is already running.

- No single pane of glass: no ability to see across all tools, pipelines, data sets, and teams in one place.

- Teams don’t know where or how to check for data or artifact problems.

- Teams experince a lot of blame and shame without shared context to see and diagnose problems in real time.

What problem does observing your Data Journey solve?

Many data teams are stuck in ” hoping and praying” that their latest data feeds, system changes, and integrations won’t break anything. They wait for customers to find problems. They blindly trust their providers to deliver good data quickly without changing data structures. They interrupt the daily work of their best minds to chase and fix a single error in a specific pipeline. They do not know if the other thousands of data pipelines and tasks are failing.

It’s a culture of productivity drains, which results in customers losing trust in the data. Another result is rampant frustration within data teams. A survey of data engineers conducted by DataKitchen in 2022 revealed some shocking statistics: 78% feel they need to see a therapist, and a similar number have considered quitting or switching careers. The industry is experiencing a shortage of people who do data work due to a supply problem and the exodus of people leaving the field because it’s so stressful.

Teams do not have visibility across the entire data journey from source to value or the ability to diagnosis data, hardware, code, or software problems to find and analyze business impact.

What is a Data Journey?

The data journey is about observing what you have done, not changing your existing data estate. Data Journeys track and monitor all levels of the data stack, from data to tools to code to tests across all critical dimensions. A Data Journey supplies real-time statuses and alerts on start times, processing durations, test results, and infrastructure events, among other metrics. With this information, you can know if everything ran on time and without errors and immediately identify the parts that didn’t. Journeys provide a context for the many complex elements of a pipeline.

Learn more about the principles and ideas in a Data Journey at the following:

- Data Journey Manifesto https://datajourneymanifesto.org/

- Why the Data Journey Manifesto? https://datakitchen.io/why-the-data-journey-manifesto/

- Five Pillars of Data Journeys https://datakitchen.io/introducing-the-five-pillars-of-data-journeys/

- Data Journey First DataOps https://datakitchen.io/data-journey-first-dataops/

- The Terms and Conditions of a Data Contract are Data Tests https://datakitchen.io/the-terms-and-conditions-of-a-data-contract-are-data-tests/

- “You Complete Me,” said Data Lineage to Data Journeys. https://datakitchen.io/you-complete-me-said-data-lineage-to-dataops-observability/

- Two Downs Make Two Ups: The Only Success Metrics That Matter For Your Data & Analytics Team: https://datakitchen.io/two-downs-make-two-ups-the-only-success-metrics-that-matter-for-your-data-analytics-team/

- Webinar: Data Journey: This Missing Piece: https://info.datakitchen.io/datakitchen-on-demanddata-journey-is-the-missing-piece

Why is the ‘Modern Data Stack’ so complicated?

The industry has valuable data, automation, and data science / analytic tools. But none of these tools fully addresses the core problem: Data Teams must monitor the entire data estate and why pipelines may succeed or fail across all these technologies and related data. Making matters more difficult is that this is a $60 Billion a year industry with hundreds of vendors.

Source 116z

How does ‘DataOps Observability’ differ from ‘Data Lineage’?

Data lineage answers the question, “Where is this data coming from, and where is it going?” It is a way to describe the data assets in an organization. It is a description used to help data users understand where data came from and, with a data catalog, the content of specific data tables or files. Data Lineage does not answer other key questions, however. For example, data lineage cannot answer questions like: “Can I trust this data”? “And if not, what happened during the data journey that caused the problem??” “Has this data been updated with the most recent files”? Understanding your data journey and building a system to monitor that complex series of steps is an active, action-oriented way of improving the results you deliver to your customers. DataOps Data Journey Observability is ‘run-time lineage.’ Think of it this way: if your house is on fire, you don’t want to go to town hall and get the blueprints of your home to understand better how the fire could spread. You want smoke detectors in every room so you can be alerted quickly to avoid damage. Data Lineage is the blueprint of the house; a Data Journey is the set of fire detectors sending you signals in real-time. Ideally, you want both run time and data lineage.

Why should I care about errors and bottlenecks in the Data Journey?

You have a significant investment in your data and the infrastructure and tools your teams use to create value. Do you know it’s all working correctly, or do you hope and pray that a source data change, code fix, or new integration won’t break things? If something does break, can you find the problem efficiently and quickly, or does your team spend days diagnosing the issue? Do you fear that phone call or email from an angry customer who finds the problem before you do? How can you be confident that nothing will go wrong and that your customers will continue to trust your deliverables? Do you dread that first-morning work email and the problems it could bring?

Don’t current IT Application Performance Monitor tools do this already?

To see across all the journeys that data travels and up/down the tech and data stack requires a meta-structure that goes beyond typical application performance monitoring and IT infrastructure monitoring software products. While quite valuable, these solutions all produce lagging indicators. For example, you may know that you are approaching limits on disk space, but you can’t say if the data on that disk is correct. You may know that a particular process has been completed, but you can’t see if it was completed on time or with the correct output. You can’t quality-control your data integrations or reports with only some details.

Since data errors happen more frequently than resource failures, data journeys provide crucial additional context for pipeline jobs and tools and the products they produce. They observe and collect information, then synthesize it into coherent views, alerts, and analytics for people to predict, prevent, and react to problems.

What are the components of a solution for DataOps Observability?

- Represent the Complete Data Journey: Be able to monitor every step in the data journey in-depth across tools, data, and infrastructure and over complex organizational boundaries.

- Production Expectations, Data and Tool Testing, and Alerts: Be able to set time, quality control, and process step order rules/expectations with proactive (but not too noisy) push notifications.

- Development Data and Tool Testing: validate your entire data journey in the development process. Enable team members to ‘pull the pain forward’ to increase the delivery rate and lower the risk of deploying new insight.

- Historical Dashboards and Root Cause Analysis: store data over time about what happened to every data journey and learn from your mistakes and improve

- A User Interface Specific for Every Role: Easy to understand, the role-based UI allows everyone on the team — IT, managers, data engineers, scientists, analysts, and your business customer to be on the same page.

- Simple integrations and an open API: a solution should include pre-built, fast, easy integrations and an open API to enable rapid implementations without replacing existing tools.

- Monitor costs and end-user usage tracking: Be able to include specific cost items (e.g., server costs) as part of your data journey. Likewise, be able to monitor usage data for tables and reports. This data can help tell if a data journey’s costs outweigh its benefits.

- Start Fast With low Effort: start quickly with understanding your data journeys and create a set of data tests and expectations that can identify where things are going wrong. DataOps Observability should be able to generate, automatically, a base set of data tests and expectations so that teams can get value quickly.

Who cares about DataOps Observability?

Data and analytic teams and their leaders (CDOs, Directors of Data Engineering / Architecture / Enablement / Science). Second, small data teams that develop and support customer-facing data and analytic systems. Data teams that care about delivering insight to their customers with no errors and a high rate of change. Finally, any group wanting to work with less embarrassment, hassle, and more time to create original insight.

Data and analytic teams and their leaders (CDOs, Directors of Data Engineering / Architecture / Enablement / Science). Second, small data teams that develop and support customer-facing data and analytic systems. Data teams that care about delivering insight to their customers with no errors and a high rate of change. Finally, any group wanting to work with less embarrassment, hassle, and more time to create original insight.

How does this compare with Data Quality?

Many enterprises have few process controls on data flowing through their data factory. “Hoping for the best” is not an effective manufacturing strategy. You want to catch errors as early in your process as possible. Relying upon customers or business users to catch the mistakes will gradually erode trust in analytics and the data team.

A sole focus on source data quality does not fix all problems. In governance, people sometimes perform manual data quality assessments. These labor-intensive data quality evaluations are done periodically, providing a snapshot of quality at a particular time. DataOps Observability which focuses on lowering the rate of errors, ensures continuous testing and improvement in data integrity. DataOps Observability works 24×7 to validate the correctness of your data and analytics journey.

Quality = Data Quality + Process Quality

Our source data is in excellent shape, so we don’t need DataOps Observability, right?

It’s important to check data, but errors can also stem from problems in your workflows, end-to-end toolchain, or the configuration/code acting upon data. For example, understanding that “good” data is delivered late (a missed SLA) can also be a big problem. The DataOps Observability approach takes a birds-eye view of your data factory and attacks errors on all fronts.

We do a lot of manual QC checks in development, so we don’t need DataOps Observability.

Avoid manual tests. Manual testing is performed step-by-step by a person. Manual testing creates bottlenecks in your process flow. Manual testing is expensive as it requires someone to create an environment and run tests one at a time. It can also be prone to human error. Automated testing is a significant pillar in DataOps Observability. Build automated testing into the release and deployment workflow. Testing proves that analytics code is ready to be promoted to production. Most of the tests used to validate code during the analytics development phase are promoted into production with the analytics to verify and validate the operation of the data journey.

How is DataOps Observability different from Data Observability?

Data Observability tools test data in the database. This is a fine thing, and DataKitchen has been promoting the idea of data tests for many years (see our DataOps Automation Product!). However, you need to correlate those test results with other critical elements of the data journey – that is what DataOps Observability does.

What Is DataOps?

DataOps is a collection of technical practices, workflows, cultural norms, and architectural patterns that enable:

DataOps is a collection of technical practices, workflows, cultural norms, and architectural patterns that enable:

- Rapid innovation and experimentation deliver new insights to customers with increasing velocity.

- Extremely high data quality and very low error rates

- Collaboration across complex arrays of people, technology, and environments

- Clear measurement, monitoring, and transparency of results

Why Data Journey First DataOps?

When your data team is in crisis from errors in production, complaining customers, and uncaring data providers, we all wish we could be unshaken as the Buddha. Our recent survey showed that 97% of data engineers report experiencing burnout in their day-to-day jobs. Perhaps we could just chill out in those stressful situations and “let go,” as the Buddha suggests. The spiritual benefits of letting go may be profound, but finding and fixing the problem at its root is, as Samuel Florman writes, “existential joy.” Finding problems before your customers know they exist helps your team’s happiness, productivity, customer trust, and customer data success.

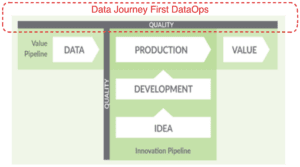

Given the complicated distributed systems we use to get value from data and the diversity of data, we need a simplifying framework. That idea is the Data Journey. In an era where data drives innovation and growth, it’s paramount that data leaders and engineers understand and monitor the five pillars of a Data Journey. The key to success is the capability to understand and monitor the health, status, and performance of your data, data tools, pipeline, and infrastructure, both at a macro and micro level. Failures on the Data Journey cost organizations millions of dollars.

Data Journey First DataOps requires a deep and continuous understanding of your production data estate. It provides a dynamic understanding of how your data flows, transforms, gets enriched, and is consumed. It allows you to trust through active verification. By observing Data Journeys, you can detect problems early, streamline your processes, and lower embarrassing errors in production.

Where can I learn more about DataOps Observability?

You’ve come to the right place! A great place to start is to read The DataOps Cookbook. Other useful resources include:

- [Conference] MIT CDO Session on DataOps Observability

- [Blog] DataOps Observability

- [Learn More] What is DataOps?

- [Product] DataKitchen DataOps Observability

- DataOps Observability: Taming the Chaos: https://info.datakitchen.io/white-paper-dataops-observability-taming-chaos

- DataOps Observability: Technical Product Overview https://info.datakitchen.io/white-paper-dataops-observability-technical-overview

- Documentation

- [Product] DataKitchen DataOps TestGen

- DataOps TestGen: ‘Mystery Box Full Of Data Errors’: https://info.datakitchen.io/white-paper-dataops-testgen-white-paper

- DataOps TestGen: Technical Product Overview: https://info.datakitchen.io/white-paper-dataops-testgen-technical-overview

- Documentation

Sign the DataOps Manifesto

Join the 10,000+ others who have commited to developing and delivering analytics in a better way.

Start Improving Your Data Quality Validation and DataOps Today!