Blog

Webinar: A Guide to the Six Types of Data Quality Dashboards

In an exciting webinar, we discuss the six major types of Data Quality Dashboards

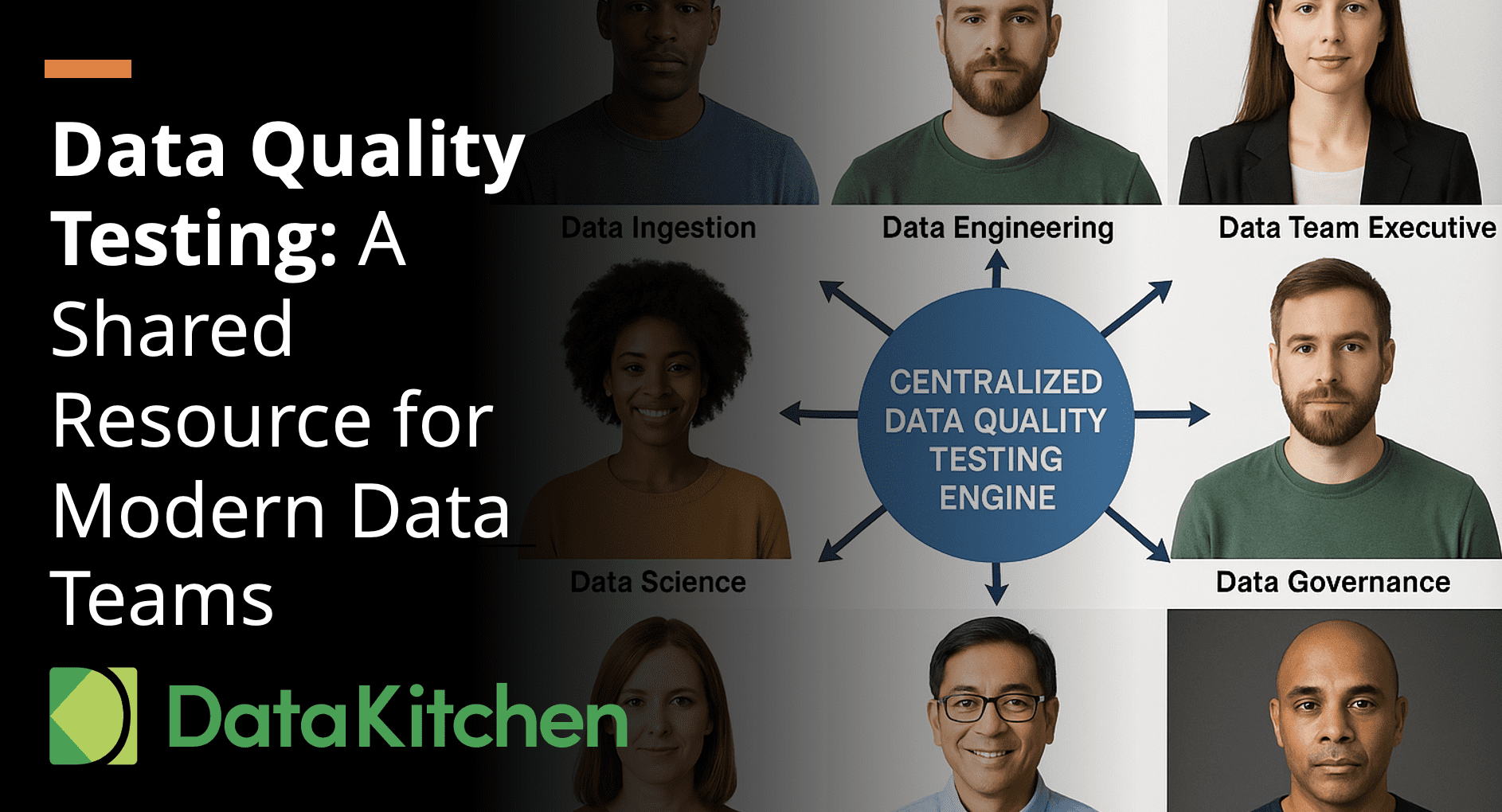

Data Quality Testing: A Shared Resource for Modern Data Teams

Data quality is not a problem any single role can solve in isolation. The complexity and scale of modern data ecosystems necessitate a collaborative approach, where quality testing serves as a shared infrastructure across all data and analytics roles.

Infographic – 6 Dimensions of DataOps Maturity

Curious about what DataOps capabilities your organization already has or how you stack up? DataKitchen published a white paper Launch Your DataOps Journey with the DataOps Maturity Model (which you can download here) to guide you on benchmarking your org's DataOps...

Infographic – 7 Steps to Implement DataOps

So you've learned about DataOps and want to get begin implementing it? Look no further than DataKitchen's white paper The Seven Steps to Implement DataOps (which you can download here)! We've taken the seven steps to implement DataOps and included them in the...

Forrester: DataOps for the Intelligent Edge of Business – Further Reading Recommendations

In Forrester’s recent report, DataOps for the Intelligent Edge of Business, Michele Goetz, et al., describe how data teams are facing challenges “about data to support the return on investment and experience.” In fact, “no amount of investment in new big data systems,...

Infographic – 6 Steps to An Enterprise DataOps Transformation

Many adages could be applied to implementing a successful, sustainable DataOps program at your organization, such as 'Rome wasn't built in a day.' or, "Don't boil the ocean." To help you approach introducing DataOps into your organization, we at DataKitchen broke down...

Finding an Executive Sponsor for Your DataOps Initiative

DataOps revolutionizes how data-analytics work gets done. Like many other “big ideas,” it sometimes faces resistance from within the organization. For most organizations, data is a means to an end. The organization’s primary focus is on its mission, whether that is a...

Six Top DataOps Trends for 2021

Since the term was coined, DataOps has expanded the way that people think about data analytics teams and their potential. 2020 was a huge year in DataOps industry acceptance. Media mentions of DataOps are on track to increase 52% over the prior year. To date in 2020,...

6 Highly Recommendable Gift Ideas for Your Data Nerd

So you’ve got a special data nerd in your life - congrats! They’re great to keep around for mental math, household finances, and lots of Star Trek jokes. We’re kidding - the data nerd is not a monolith. They come in all shapes, sizes, and nerd varieties. We’ve got...

What Is DataOps? Most Commonly Asked Questions

As DataOps continues to gain exposure, people are encountering the term for the first time. Below is our list of the most common questions that we hear about DataOps. What is DataOps? DataOps is a collection of technical practices, workflows, cultural norms, and...

Why DevOps Tools Fail at DataOps

Implementing DataOps requires a combination of new methods and automation that augment an enterprise’s existing toolchain. The fastest and most effective way to realize the benefits of DataOps is to adopt an off-the-shelf DataOps Platform. Some organizations try to...

How Celgene Built a Billion-Dollar Product Launch Success with DataOps

Improving Teamwork in Data Analytics with DataOps

Without DataOps, a Bad System Overwhelms Good People When enterprises invite us in to talk to them about DataOps, we generally encounter dedicated and competent people struggling with conflicting goals/priorities, weak process design, insufficient resources, clashing...

Prove Your Team’s Awesomeness with DataOps Process Analytics

Do you deserve a promotion? You may think to yourself that your work is exceptional. Could you prove it?As a Chief Data Officer (CDO) or Chief Analytics Officer (CAO), you serve as an advocate for the benefits of data-driven decision making. Yet, many CDO’s are...