🔥 TLDR: What You’ll Learn in This Article

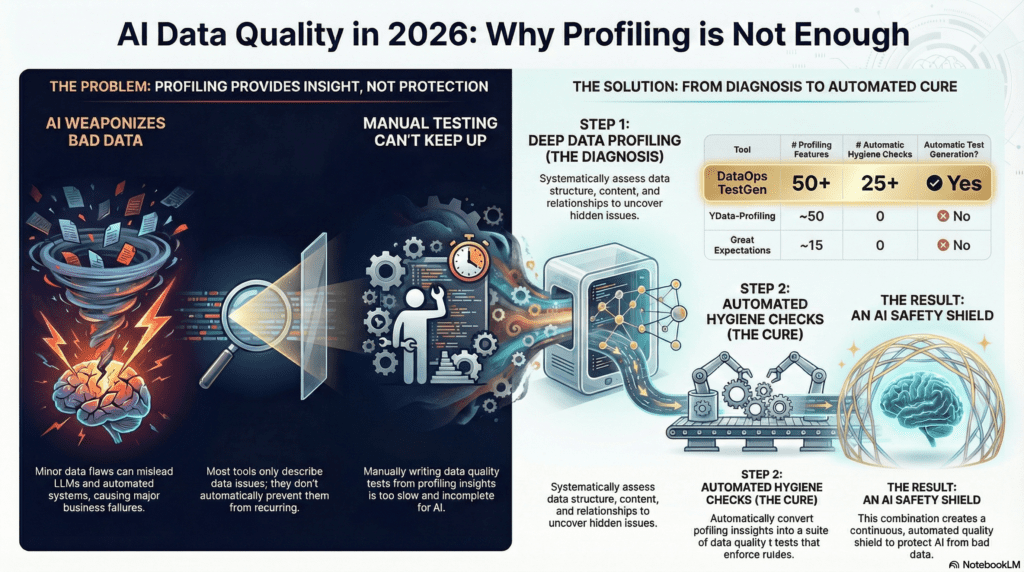

- AI has fundamentally changed the stakes: bad data no longer just breaks dashboards—it can actively mislead your LLMs and automated decision systems.

- Data profiling has re-emerged as the most essential first step in protecting AI-driven organizations from data-induced failures.

- Most open-source profiling tools stop at describing data; almost none automatically convert profiling insights into actionable data hygiene checks.

- DataOps Data Quality TestGen is the only open-source solution that combines deep profiling with automated AI-generated data hygiene tests.

- The comparison table reveals significant capability gaps across the open-source landscape—especially in hygiene automation.

- Profiling without hygiene checks is now a form of technical debt that directly increases AI risk.

- AI safety now is data quality, and data quality must become autonomous.

The 2026 Reality: When Bad Data Turns AI Against You

The world did not ease into AI transformation—it sprinted past you at full speed. One moment you were managing dashboards and debugging pipelines; the next, your LLM was confidently telling the CEO that revenue was up 40 percent when sales were actually in a freefall. Your analytics engineers were still vibe-coding SQL transforms at midnight, hoping today wouldn’t be the day they accidentally broke every downstream model. Your predictive systems—once the pride of your data science program—were degrading faster than you could retrain them. And your BI reports? Once a source of clarity, now a running joke. No one trusts them; worse, no one trusts you.

Welcome to 2026, where bad data doesn’t simply cause inconvenience—it weaponizes AI against your business. The cruel irony is that the AI revolution demands perfect data quality at the exact moment when data volume, velocity, and complexity have made traditional testing unsustainable. Your pipelines now feed enormous AI systems that retrain every hour, make decisions in milliseconds, and ingest dozens of data sources your team barely understands. Yet your quality processes still rely on manually written SQL tests created like it’s 2015—slow, brittle, incomplete, and hopelessly incapable of keeping up.

In this world, data quality is no longer a governance checkbox or a back-office headache. It is AI safety at enterprise scale, and without an evolved approach, your organization is flying blind into a storm.

What Is Data Profiling? And Why AI Has Made It Urgent Again

Data profiling is no longer a nice-to-have, nor is it simply the first step of a data project. It has become the indispensable diagnostic layer that determines whether your AI systems are being fed truth or poison.

In any data-driven organization, the journey to actionable insight begins not with analysis, but with understanding. Before data can be modeled, visualized, or trusted to inform decisions—especially decisions made autonomously by AI—its fundamental characteristics must be assessed. Data profiling is this foundational process: the systematic assessment of data to reveal its structure, consistency, and quality. It is the key to unlocking the true nature and condition of your data estate.

Profiling generates essential metadata that reflects the overall health of the data, allowing teams to understand whether the information is complete, consistent, and reliable. Gartner defines profiling as a technology used for “discovering and investigating data quality issues,” enabling organizations to trace errors to their origin and certify data as fit for use. By analyzing data types, value distributions, ranges, outliers, and formats, profiling produces a detailed snapshot of the data that is especially crucial in AI pipelines where even tiny anomalies can cascade into dramatic failure.

Core Techniques of Data Profiling

Data profiling is typically organized into three complementary techniques:

Structure Discovery: This technique validates data consistency and format, ensuring that fields conform to expectations. It catches misformatted postal codes, phone numbers containing text, and fields whose values violate schema assumptions. Structure discovery answers the question: Does this data look like what it is supposed to be?

Content Discovery: Content discovery looks deeply inside the values themselves—examining patterns, missingness, anomalies, and inconsistencies. It uncovers nulls, misspellings, invalid values, and systemic errors. It answers the question: What is actually inside this data, and does it make sense?

Relationship Discovery: This technique analyzes connections between tables and fields. By studying dependencies, relationships, and correlations, profiling helps establish referential integrity and prepares data for integration into a cohesive, trustworthy system. It answers: How do these datasets relate, and can those relationships be trusted?

Strategic Benefits of Profiling

When executed effectively, data profiling delivers significant organizational benefits that extend far beyond technical assessment:

- It enhances governance and compliance by exposing risks early.

- It builds trust in analytics and AI by ensuring data is complete and interpretable.

- It reduces operational cost by catching errors before they propagate downstream.

- It creates an organized data landscape that maps sources, relationships, and lineage.

Profiling is not merely an analytical step—it is the first serious act of data hygiene. And in a world where AI continuously and silently consumes data, hygiene is everything. But profiling alone is no longer enough.

Why Traditional Profiling Tools Are Falling Short

The open-source ecosystem provides several excellent profiling tools. YData-Profiling can generate a stunning, comprehensive report with a single line of code. Great Expectations offers rudimentary expectation suggestions. Deequ excels at profiling at Spark scale. DataCleaner, OpenRefine, Aggregate Profiler, Metabase, and even Apache Griffin each deliver value in their domains.

But profiling tools share one fatal flaw: they stop at insight.

They describe the data, but they do not enforce its quality.

They identify problems, but they do not prevent them.

They produce visibility, but not safety.

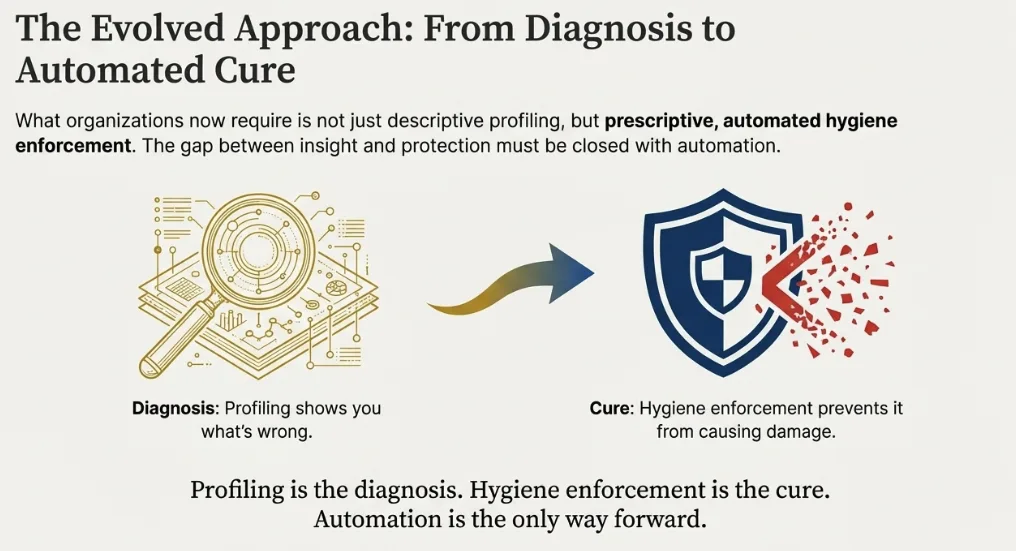

In the AI era, this gap has become intolerable. When an LLM misreads malformed data, it doesn’t ask for clarification—it hallucinates. When a model sees unexpected categories, it does not ask for help—it degrades silently. What organizations now require is not descriptive profiling, but prescriptive, automated hygiene enforcement.

This is where the landscape changes.

The Comparison: Profiling Depth vs. Hygiene Automation

Profiling shows you what’s wrong.

Hygiene automation prevents it from causing damage.

AI needs both.

The Breakthrough: Automated Data Hygiene Testing From Profiling Signals

DataOps Data Quality TestGen is the only open-source tool that directly links profiling to action. TestGen doesn’t merely generate metadata—it uses that metadata to automatically create over 120 data quality tests and apply 25+ hygiene issue checks, including missingness, null anomalies, duplicates, type mismatches, pattern violations, value-range issues, cardinality surprises, referential failures, and distribution shifts.

TestGen is the first open-source engine that turns profiling into predictive protection.

It creates a continuous, automated quality shield around your entire data estate—one that does not require humans to write rules, maintain YAML, or anticipate every way data can fail. In effect, it elevates profiling from passive understanding to proactive AI safety.

Data leaders must now treat data quality as a continuously running, always-on AI safety system. That means embracing deep profiling across the entire enterprise, shifting from descriptive analysis to prescriptive hygiene checks, and eliminating manual quality processes wherever possible. Traditional testing cannot scale to the shape or speed of AI-driven data ecosystems.

Profiling is the diagnosis. Hygiene enforcement is the cure. Automation is the only way forward.

Final Word: Data Must Be Understood & Clean Before It Is Powerful

In 2026, data quality has become a matter of AI, decision, and business integrity. Open-source profiling tools remain essential for visibility, but visibility without action is no longer enough. Only DataOps Data Quality TestGen turns profiling into automated enforcement, creating the guardrails required to operate safely in an AI-driven world.

Profiling is insight. Hygiene is protection. DataOps Data Quality TestGen is both.