Business analysts often find themselves in a no-win situation with constraints imposed from all sides. Their business unit colleagues ask an endless stream of urgent questions that require analytic insights. Business analysts must rapidly deliver value and simultaneously manage fragile and error-prone analytics production pipelines. Data tables from IT and other data sources require a large amount of repetitive, manual work to be used in analytics. In business analytics, fire-fighting and stress are common. Instead of throwing people and budgets at problems, DataOps offers a way to utilize automation to systematize analytics workflows. DataOps consolidates processes and workflows into a process hub that curates and manages the workflows that drive the creation of analytics. A DataOps process hub offers a way for business analytics teams to cope with fast-paced requirements without expanding staff or sacrificing quality.

Analytics Hub and Spoke

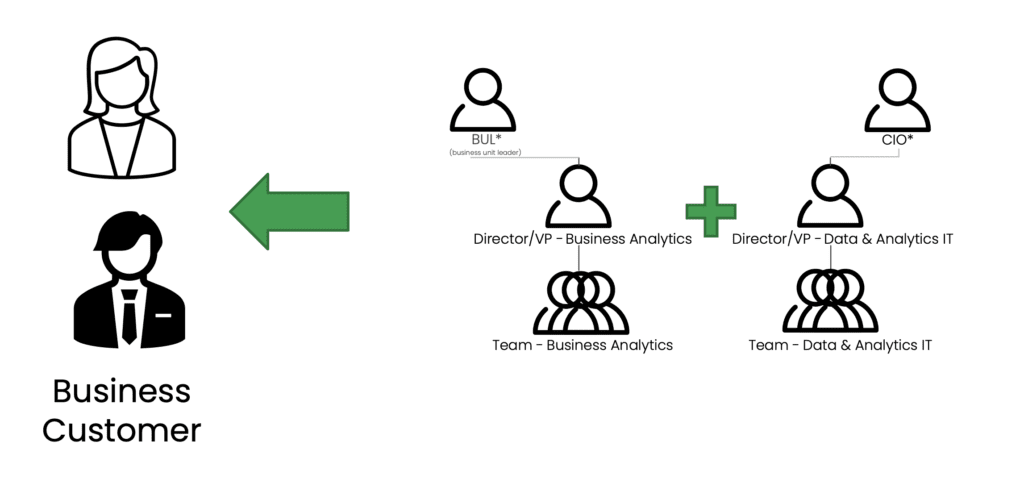

The data analytics function in large enterprises is generally distributed across departments and roles. Teams under the CDO and CAO are sometimes separate from the CIO. For example, teams working under the VP/Directors of Data Analytics may be tasked with accessing data, building databases, integrating data, and producing reports. Data scientists derive insights from data while business analysts work closely with and tend to the data needs of business units. Business analysts sometimes perform data science, but usually, they integrate and visualize data and create reports and dashboards from data supplied by other groups. Figure 1 below shows how contributions from two teams – an IT team on the right and a business analytics (BA) team on the left – must combine to create the analytics that serve business unit users. Some organizations use the metaphor of a “hub and spoke.” A centralized data or IT team maintains data platforms utilized by self-service users spread across the organization. The effort of both of these teams has to combine seamlessly to serve business customers.

Figure 1: Data analytics challenge – distributed teams must deliver value in collaboration.

If you work in the data industry, you’ve likely encountered this pattern of self-service, business unit teams and centralized data and analytic IT teams. In this post, we will focus on the business analytic teams, working directly for lines of business, and their unique challenges. The business analyst’s goal is to create original insight for their customer, but they spend far too much time engaging in repetitive manual tasks.

The business analyst is embedded in the business unit. They are collocated with business customers and identify with their objectives and challenges. The business analyst is on the front lines of the business unit’s data usage. If anything goes wrong in data analytics, the business analyst is the first to know. They see the data errors, the production errors, the broken reports and the inaccurate dashboards. As a result, they deal with stress, firefighting and heroism first hand.

Business Analytic Challenges

Business analytic teams have ongoing deliverables – a dashboard, a PowerPoint, or a model that they refresh and renew. Business analysts live under constant pressure to deliver. Business analysts must quickly update their analytics production deliverables as business conditions change. Customers and market forces drive deadlines and timeframes for analytics deliverables regardless of the level of effort required. It may take six weeks to add a new schema, but the VP may say she needs it for this Friday’s strategy summit. If the IT or data engineering team can’t respond with an enabling data platform in the required time frame, the business analyst does the necessary data work themselves. This ad hoc data engineering work often means coping with numerous data tables and diverse data sets using Alteryx, SQL, Excel or similar tools.

BA teams often do not receive a data platform tailored to their needs. They are given a bunch of raw tables, flat files and other data. Out of perhaps hundreds of tables, they have to pull together the ten tables that matter to a particular question. Tools like Alteryx help a little, but business analysts still perform an enormous amount of manual work. When the project scope and schedule are fixed, managers have to add more staff to keep up with the workload.

Sometimes BA teams turn to IT, which may have its drawbacks. Some IT teams are fantastic. They focus on making their customer, the business analyst teams, successful. Another kind of IT team is “less helpful.” These IT partners are unfortunately all-to-common in data-driven organizations. They prepare artifacts and throw them over the wall to the business analytics team. They are less oriented toward delivering customer value and more focused on servicing their internal process or internal software development lifecycle. These groups tend to be more insulated from their customer’s actual needs, so they take many months to deliver, and when a project does get done, it usually requires rework. A business analyst team receiving deliverables from an IT organization like that doesn’t trust what they receive and ends up having to QA it thoroughly, which also involves a lot of effort.

We’ve talked to business analytic teams extensively about their challenges during our years working in the data analytics industry. Business analyst teams want to serve their internal customers better and faster but often live with a perpetual feeling of failure. An analyst may work hard to bring original insights to their customer, but it’s common for a business colleague to react to analytics with a dozen follow-up questions. A successful analytics deliverable just generates more questions and more work. Imagine working in a field where a “job well done” generates an exponential amount of additional work. It’s hard to feel good about yourself when you leave a meeting with a list of action items, having shown off a new deliverable. Many business analysts feel like failures.

Many business analytic teams feel that the responsibility for delivering insights to the business rests on their shoulders and they are not adequately supported by IT, the gatekeepers of data. Analysts feel like IT is cutting them off at the knees. As a result, analytic teams are always behind the curve and very reactive with business customers. Business analytic teams field an endless stream of questions from marketing and salespeople and they can’t get ahead. They need high-quality data in an answer-ready format to address many scenarios with minimal keyboarding. What they are getting from IT and other data sources is, in reality, poor-quality data in a format that requires manual customization. They’re spending 80% of their time performing tasks that are necessary but far removed from the insight generation and innovation that their business customers need.

DataOps Process Hub

DataOps provides a methodology and a paradigm to understand and address these challenges. There’s a recent trend toward people creating data lake or data warehouse patterns and calling it data enablement or a data hub. DataOps expands upon this approach by focusing on the processes and workflows that create data enablement and business analytics. DataOps is effectively a “process hub” that creates, manages and monitors the data hub.

The BA team needs to take thousands of files or tables and synthesize them into the ten tables that matter the most for a particular business question. How do you get that to happen as quickly and robustly as possible? How do you enable the analytics team to update existing analytics with a schema change or additional data in a day or an hour instead of weeks or months?

The key to enabling rapid, iterative development is through a process hub, which complements and automates the data hub. A process hub, in essence, automates every possible workflow related to the data hub. A robust process hub is expressly designed to enable the data team to minimize the time spent maintaining working analytics and improve and update existing analytics with the least possible effort. Many large enterprises allow consultants and employees to keep tribal knowledge about the data architecture in their heads. The process hub captures all informal workflows as code executed on demand. Tests that verify and validate data flowing through the data pipelines are executed continuously. An impact review test suite executes before new analytics are deployed. Production analytics are revision controlled, and new analytics are tested and deployed seamlessly. When people rotate in and out of roles in the data organization, all of the intellectual property related to business analytics is retained (in Domino models, SQL, reports, tests, scripts, etc.) to be shared, reused, curated and continuously improved.

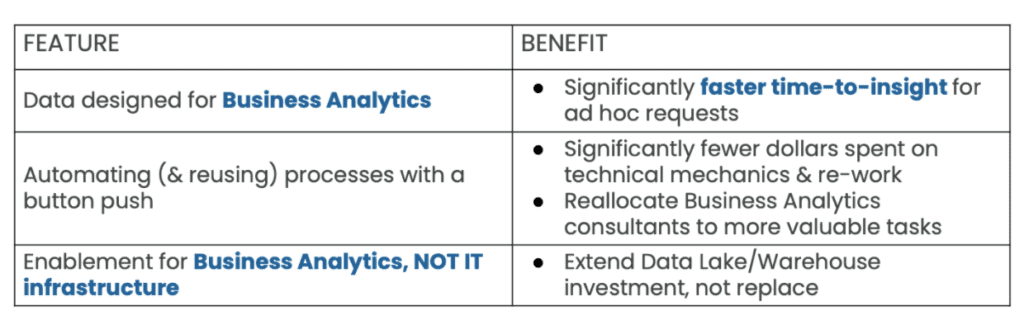

Table 1: Process hub features and benefits

We can summarize the benefits of the process hub as follows:

- Significantly faster time-to-insight for ad hoc requests – The business analytics team gets more immediate insight because the process hub provides them with a data representation that they both control and can easily modify for ad hoc requests.

- Less rework and maintenance – Automation enables the team to reallocate time previously spent on technical mechanics and rework to more valuable tasks, such as responding to customer inquiries.

- Enablement for business analytics – The focus on the process hub is to extend, not replace a data hub (i.e., IT-created infrastructure such as a data lake/warehouse). The process hub tailors the generic data hub to the custom, evolving needs of the business analytics team.

The process hub effectively frees your organization’s team of highly trained analysts from performing repetitive, manual tasks. The process hub changes the operating model of how business analytics teams work. Sometimes it helps to speak in concrete terms, so let’s briefly discuss the roles required on a business analytics team that uses a process hub.

Process Hub Roles and Responsibilities

Business Analyst / Data Scientist – Whatever the title, this person works with data to create innovative insights for customers in the business unit. This person is chartered with presenting data in a way that makes sense to their non-technical, non-analytic business colleagues. The work product could be a chart, graph, model or dashboard. This role typically already exists, but the process hub enables this person to stay productively focused on the value-added aspects of working with data. Two other roles make the business analyst successful.

Analytics Engineer – This “data doer” bridges the gap between what IT delivers and what business analytics requires. They take the data in an IT data hub and build data representations that business analysts need to do their work. If the Analytics Engineer performs this task manually, productivity has only advanced incrementally. However, with the help of a process hub and the DataOps Engineer, the work of the Analytics Engineer spurs an order of magnitude improvement in business analyst productivity.

DataOps Engineer – Knowing that the business analyst will always have follow-up questions and keep iterating on analytics, we need data representations that analysts can quickly and robustly modify. The DataOps Engineer creates the technical environment, including automated workflows and testing, so that the Analytics Engineer and Business Analyst stay focused on their customers. The DataOps Engineer creates and manages the DataOps process hub that makes the invisible visible. Processes and workflows that used to be stored in people’s heads are instantiated as code. DataOps Engineering Is also about collaboration through shared abstraction. In practice, this means taking valuable ‘nuggets’ of code that everyone creates (i.e., ETL, SQL, Python, XML, tableau workbooks, etc.) and integrating them into a shared system: inserting nuggets into pipelines, creating tests, running the data factory, automating deployments, working across people/teams, measuring success and enabling self-service.

The DataOps process hub is a shared abstraction that enables scalable, fast, iterative work to develop. Another way to think about it is simply as automation of end-to-end lifecycle processes and workflows: production orchestration, production data monitoring/testing, self-service environments, development regression and functional tests, test data automation, deployment automation, shared components, and process measurement.

Stop Firefighting

The DataOps process hub helps make workflows and processes visible and tangible. An analytics engineer, a visualization analyst, a data scientist all have a place where they see their work come together. The process hub helps them resolve issues, automate processes and improve workflows. DataOps is fundamentally about automation, and analytics engineering focuses on creating valuable datasets that make analysts successful. A process hub helps the team stop moving from one emergency to the next. A lot of business analytic teams are constantly firefighting. DataOps is about automating tasks, so you aren’t repeating manual processes. DataOps also focuses on abstracting and generalizing operations, so you aren’t managing multiple versions of the same code or artifacts.

DataOps Engineering is about designing the system that creates analytics more robustly and efficiently. DataOps advances these aim by:

- Easing the path to production and ensuring scalability.

- Streamlining processes for automation, efficiency and reuse.

- Moving assumptions, business rules and code upstream.

- Improving reliability, consistency and efficiency

- Building out a flexible framework.

- Curating and managing production processes.

- Building out a production toolkit.

- Creating shortcuts for future tasks.

- Collaborating instead of blindly adhering to policies and procedures

It’s prudent to assume that you will never completely avoid data emergencies. DataOps scales your processes to make the next urgent deadline easier to meet.

Managing a DataOps Team

A lot of industries talk about bringing data together in data hubs. DataOps adds a process hub to consolidate data workflows together. The process hub augments the data hub to centralize the people that act upon data into one place. Managing and curating the processes acting upon data is as important as the data itself. The process hub enables process scalability, productivity and project success. From a macro perspective, the process hub enables the business analytic teams to gain leverage to be more successful.

From the manager’s unique perspective, the process hub helps team and organization leaders manage data development and operations in a disciplined, consistent and repeatable manner. Are processes working? Are business customers happy with what the team is producing? Is the team fully productive? Can people move seamlessly between teams and projects? Can decentralized staff, consultants and vendors work together effectively? How can the teams gain consistency? How can the teams ensure collaboration? How does the team take a long-term view and not create technical debt? How do the teams curate reusable components (or shortcuts) that span projects? How can the organization simplify, abstract and generalize data patterns? The process hub helps answer these questions by collecting metrics on all the processes instantiated as code.

Managers are frequently asked to accomplish “more” deliverables with a “lesser” budget. This zero-sum dilemma comes down to increasing productivity so that a business analytics team can accomplish more with the same level of resources. The only reasonable response to this challenge is to focus on automation, quality and process efficiency. Put your processes in one place. Improve them. Curate, manage and automate them. We’ve seen organizations attain incredible productivity gains using a process hub – on the order of ten times more productivity. These impressive gains stem from spending more time focused on customer requirements and working in a more Agile way.

Conclusion

DataOps is fundamentally a philosophy and methodology of “business-processes-as-code.” A process hub keeps you from continuously throwing additional staff at problems. It utilizes automation to make BA teams more agile and responsive to the rapid-fire requests from business units. With a DataOps process hub, managers of BA teams can oversee a “well-oiled machine” of analysts iterating efficiently on analytics to address evolving business challenges.