Your team has gone full ostrich. Heads buried deep in the sand, convinced that if they can’t see the production data errors, those errors must not exist. The pipeline is deployed. The tests passed. Job done, right?

Meanwhile, you’re the one fielding angry Slack messages from business users who found garbage in their reports, and you’re starting to feel like the only person who actually cares about what happens after code hits production. The frustrating part? Your team isn’t lazy or incompetent—they’re just overwhelmed with development work and genuinely don’t realize how bad things have gotten downstream. The ostrich approach feels safe when you’re already buried in sprint work.

You can coax those heads out of the sand without turning it into a big confrontation or piling on more work. It starts with making the invisible impossible to ignore. The “deploy and forget” ostrich mindset is one of the most common—and most corrosive—patterns in data engineering teams. The good news? It’s fixable, and it doesn’t require a massive cultural overhaul or months of extra work. It starts with making the invisible visible.

Your team sees their job as done once the pipeline code is out in production, with all the unit and manual testing. They don’t really feel responsible for any data quality problems that pop up after deployment—you know, in the staged data, dimension marts, or wherever else it’s used. Finding that bad data, which can show up every hour or day, is just “not their job” in their eyes.

Your customers are getting hit with data errors, but the team either doesn’t realize how big a deal it is or doesn’t seem to care. They basically check out as soon as their deployment hits production. Since they’re already swamped with development work, they’re really pushing back against taking on any extra tasks. Customers are calling with questions and concerns about the data, which is also taking up team time. Adding data quality tests into the data production process – no one has the time or interest.

So what can you do to start a wave of change?

You’re not going to win this argument with opinions or gut feelings. Your team will push back, insist everything is fine, and point to their passing unit tests as proof. The only way to get those ostrich heads above ground is with cold, hard evidence they can’t ignore.

Step one: Gather the facts that prove there’s a problem.

Start by talking to the people who actually use the data every day. Send a quick survey to your end customers—nothing fancy, just a few pointed questions. Do they trust the data? Have they built their own shadow QA processes because they don’t trust the data? (Spoiler: they probably have, and they’re not happy about it.) Next, do some archaeology on your own side. Count the data errors from the past month. Dig through tickets, bug reports, and Slack threads. Ask your team directly—you might be surprised what they remember once you start asking.

Then go a step further and actually score your data quality. Using an open-source tool like TestGen, you can profile your data, get instant data hygiene test results, and have a data quality dashboard up and running in less than an hour—no massive implementation project, no endless configuration—just real numbers showing the current state of things.

Once you’ve got all of this—the survey results, the error history, the quality scores, the hygiene test results—share it with your team. Lay it all out. I’m willing to bet they’ll be genuinely surprised. Most engineers don’t want to ship bad data; they just don’t have visibility into what’s happening after deployment. This is your chance to turn on the lights.

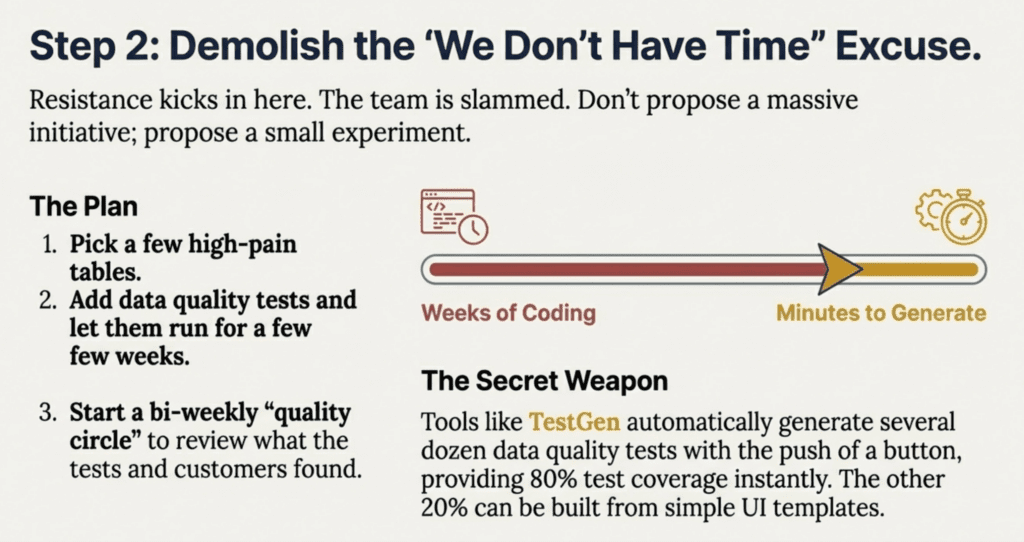

Step two: Show them that adding data quality tests isn’t the time sink they think it is.

Here’s where the resistance usually kicks in. Your team is already slammed with feature work, and the last thing they want is another “initiative” eating into their sprints. Fair enough. So don’t make it a big, massive initiative—make it a small experiment.

Pick a handful of tables that have been causing the most pain. Add some data quality tests and let them run for a few weeks. Watch what they catch. Then start a quality circle—a short meeting every two weeks where you review production errors together. Look at what the tests found, what customers reported, and ask a simple question: Can we create a permanent fix so this doesn’t keep happening?

Here’s the secret weapon: tools like TestGen automatically generate several dozen data quality tests with the push of a button. That’s not an exaggeration. TestGen handles the 80% test coverage your team needs without anyone writing custom code. The remaining 20%—the tests that are unique to your business logic—can be built using simple templates right in the TestGen UI, often with input from your business partners who know the data best. The “we don’t have time” excuse evaporates pretty quickly when you can generate meaningful test coverage in minutes instead of weeks.

Step three: Show them that production data quality testing isn’t a burden—it’s a gift.

This is where the mindset shift happens. A 2022 survey by Wakefield Research found that data engineers spend 40% of their time—two full days per week—firefighting data quality issues. That’s not a typo. Nearly half their workweek goes to chasing down bad data instead of building new things. Worse, 74% of data quality issues are first surfaced by stakeholders, meaning most teams are stuck in reactive mode, responding to complaints rather than catching problems before anyone notices.

The math is simple: teams that catch data errors proactively in production spend less time in firefighting mode. IDC research found that improving data quality reduced time spent on defects by 53%. That’s when your team gets back to the work they actually want to do.

And the business case extends beyond engineering productivity. Gartner research shows that poor data quality costs organizations an average of $12.9 million per year—and much of that cost comes from eroded customer trust. According to Accenture, only one-third of executives trust their data enough to derive value from it. When your stakeholders can’t trust the numbers, they stop using them.

The flip side? Organizations that invest in data quality see real results. McKinsey research shows companies with mature data governance programs report 15-20% higher operational efficiency. One e-commerce retailer that implemented a data quality initiative saw a 20% reduction in customer churn and a 300% ROI within the first year.

That’s the real pitch: caring about production data quality doesn’t add to your team’s workload. It’s the thing that finally gets them off the treadmill.

Conclusion

Getting your team to pull their heads out of the sand isn’t about shame or blame—it’s about showing them there’s a better way. When they can spot data problems before customers do, when the same issues stop breaking things week after week, and when those dreaded stakeholder calls turn into the occasional “hey, nice work”—suddenly, caring about production quality doesn’t feel like a burden anymore. It feels like the thing that makes their jobs less stressful.

Start small, gather the evidence, and let the results do the convincing. Your team didn’t get into data engineering to disappoint people. They just need a reason to look up.

Frequently Asked Questions about ‘What to do when your team doesn’t care about production data errors?”

What evidence do you have that “Teams that run data quality tests in production spend less time responding to customer requests and more time on development work”? The evidence here is strong:

A 2022 survey by Monte Carlo and Wakefield Research of 300+ data professionals found that data engineers spend 40% of their time—equivalent to two days per week—evaluating or checking data quality. Additionally, more than half of respondents ranked building or fixing pipelines as taking the most amount of their time throughout the day. VentureBeatBusiness Wire

The Harvard Business Review reports that 50% of a data practitioner’s time is spent on identifying, troubleshooting, and fixing data quality, integrity, and reliability issues. Forrester found that 40% of a data analyst’s time is spent vetting and validating analytics for data quality issues. Monte Carlo

Research from Thoughtworks suggests that data teams spend 30-40% of their time on data quality issues—a significant amount of time they could spend on revenue-generating activities like creating better products and features. Thoughtworks

According to an IDC study, the time developers spent on software defects was reduced by 53% after implementing quality improvements. The primary cause cited was improved test data quality. LinkedIn

The same Monte Carlo survey found that 74% of data engineers say data quality issues are surfaced first by stakeholders—meaning most teams are reactive rather than proactive, constantly responding to complaints rather than building new things. Business Wire

DataKitchen found a workforce that feels taxed beyond reasonable expectations with calls for relief. In fact, 79% of data engineers have considered leaving the industry entirely. DataKitchen

What evidence do you have for “Organizations that run data quality tests in production report increased customer trust and greater customer satisfaction”?

Gartner research director Mei Yang Selvage says that poor data quality practices “undermine digital initiatives, weaken their competitive standing and sow customer distrust.” Conversely, “innovative organizations like Airbnb and Amazon are using good quality data to allow them to know who their customers are, where they are, and what they like.” Gartner

According to the Gartner report “Measuring the Business Value of Data Quality,” organizations continue to experience “customer satisfaction issues” when data quality goes unmanaged. The impact on customer satisfaction undermines a company’s reputation, as customers can share their negative experiences on social media. Anodot

A case study from CastorDoc showed that Company XYZ, an e-commerce retailer, implemented a data quality improvement initiative, resulting in a 20% reduction in customer churn, a 15% increase in revenue from personalized marketing campaigns, and a 1% increase in customer satisfaction. The ROI on the data quality initiative was calculated at 300% within the first year. Castor Doc

Accenture found that only one-third of executives trust their data sufficiently to derive value from it (2019)—highlighting how widespread the data trust problem really is. Agile Data

McKinsey research shows that companies with data governance programs report 15-20% higher operational efficiency—data quality directly impacts customer trust and brand reputation. Integrate.io

TLDR: What are the five key points of this article?

The “deploy and forget” mindset is a visibility problem, not a laziness problem. Teams aren’t ignoring production data errors because they don’t care—they simply don’t see what’s happening downstream after deployment. The fix starts with making the invisible visible through surveys, error counts, and data quality dashboards.

Evidence beats arguments. You won’t convince your team there’s a problem with opinions or gut feelings. Cold, hard data—customer surveys, ticket counts, data quality scores—is what gets ostrich heads above ground.

Data quality testing doesn’t have to be a time sink. Tools like TestGen can auto-generate 80% of the needed test coverage in minutes, not weeks. The “we don’t have time” objection evaporates when you can run a small experiment on a few problem tables rather than launch a massive initiative.

Proactive testing reduces workload—it doesn’t add to it. Data engineers currently spend 40% of their time firefighting bad data. Teams that catch errors before stakeholders do spend dramatically less time in reactive mode and more time on work they actually enjoy.

The ROI is tangible and measurable. Poor data quality costs organizations $12.9M/year on average (Gartner), while companies with mature data quality practices see 15-20% higher operational efficiency (McKinsey) and significant reductions in customer churn.

How can teams effectively transition from a reactive to a proactive data culture?

Make the problem undeniable. Gather evidence your team can’t ignore—survey end users about their trust in the data, count recent data errors from tickets and Slack threads, and use a tool like TestGen to score your data quality and generate a dashboard instantly. Share the results. Most engineers will be surprised.

Start small and prove it’s not a time sink. Pick a few problem tables, add data quality tests, and run them for a few weeks. TestGen automatically generates dozens of tests with the push of a button—delivering 80% test coverage without custom code. The remaining 20% can be built from simple templates. Start a “quality circle” every two weeks to review what the tests catch and create permanent fixes.

Let the results shift the mindset. When the team sees they’re catching issues before stakeholders complain—and spending less time firefighting—production data quality stops feeling like extra work and becomes the thing that makes their jobs easier. The key is evidence first, small experiments second, and letting the wins speak for themselves.

How can teams effectively transition from a reactive to a proactive data culture?

First, gather facts showing there is a problem. Using a tool like Open Source TestGen, you can profile your data, get instant data hygiene test results, and have a data quality dashboard in less than an hour.

Second, show that adding data quality tests is not particularly time-consuming. Tools like TestGen automatically generate several dozen data quality tests with the push of a button.

Finally, show your team that data quality testing in production is not a burden but a benefit.

How can I start today? How can automated tools reduce the burden of data quality test generation?

Install DataOps Data Quality TestGen. Testgen is 100% open source, so please install it on-prem and use it in production. If it works, then our reasonable price enterprise version supports multiple users and database connections:

First, you can install DataOps Data Quality TestGen on your computer. It installs with demo data, and you can do a 10-minute quickstart walkthrough.

Second, our Data Quality and Observability Certification is available and free.

Third, our Slack channel is a great place to get support.